When it comes to web scraping, ‘ChatGPT’ is a common word that appears everywhere nowadays. So can ChatGPT help with web scraping? It’s a tricky question. The fact is, ChatGPT does not perform web scraping directly. But it can help with web scraping alongside other tools and libraries.

ChatGPT is primarily designed for generating human-like responses and performing Natural Language Processing tasks, but it also helps with web scraping in various ways. It can generate a scraper when prompts are given. It can also make use of its feature named ‘code interpreter’ (now known as Advanced Data Analytics) and a third-party plugin named ‘Scraper’ for web scraping.

In this article, you will learn how to prompt ChatGPT for generating a Python scraper, using which you can easily scrape any website.

How to Automate Web Scraping Using ChatGPT

ChatGPT cannot fully automate web scraping. But it helps in generating instructions, providing guidance, and handling the mentioned tasks without any hassle. Let’s understand in detail the workflow of ChatGPT in creating a scraper.

-

Input Processing

Using ChatGPT to scrape websites is a great choice as it can process the user inputs and understand the requirements for the web scraping process. For example, you can specify the URL to scrape.

-

Generation of Scraping Instructions

ChatGPT can generate instructions and commands required for web scraping based on user inputs. The generated instruction/code snippets tell how to extract specific data from the website.

Also Read: Navigating and Extracting Data -

Integration with Web Scraping Libraries

The generated instructions can be combined with different types of scraping libraries, such as BeautifulSoup, to implement an actual scraping process. These can also be used to extract specific data points from the HTML content, perform pagination or navigation, and save the data.

Also Read: Web scraping with Python -

Error Handling and Edge Cases

When you use ChatGPT for web scraping, it assists in error handling and addressing edge cases that may arise during scraping. It can provide guidance on handling various scenarios, such as handling dynamic website content, avoiding anti-scraping measures, or dealing with different data formats.

How to Create a Web Scraper Using ChatGPT

You can generate a Python-based web scraper using ChatGPT to scrape websites. For this, provide these instructions to the ChatGPT as the input. Here XPath is used to locate elements from the HTML. Using these elements you will navigate to product pages and extract data from them.

Build a Python-based scraper

1. Start URL - "https://scrapeme.live/shop/"

2. Navigate to each product page xpath='//li/a[contains(@class, "product__link")]' and collect the below data points:

price = '//p[@class="price"]/span/span/following-sibling::text()'

product title = '//h1[@class="product_title entry-title"]/text()'

description = '//div[contains(@class,"product-details__short-description")]/p/text()'

3. After extracting the details from the product page, do the pagination till 5 pages of the listing page

4. Utilize the Python requests module and the lxml parser to send HTTPS requests and extract the required data from the web pages.

5. Implement error handling by checking the response from the server or website. If the response status code is not 200, raise an exception indicating the failure and suggest a retry for that specific request.

6. All requests should be made through a proxy IP 192.168.1.10:8000 and with the following headers

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-GB,en;q=0.9',

'cache-control': 'no-cache',

'pragma': 'no-cache',

'sec-ch-ua': '"Google Chrome";v="113", "Chromium";v="113", "Not-A.Brand";v="24"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Linux"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'none',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36',

}

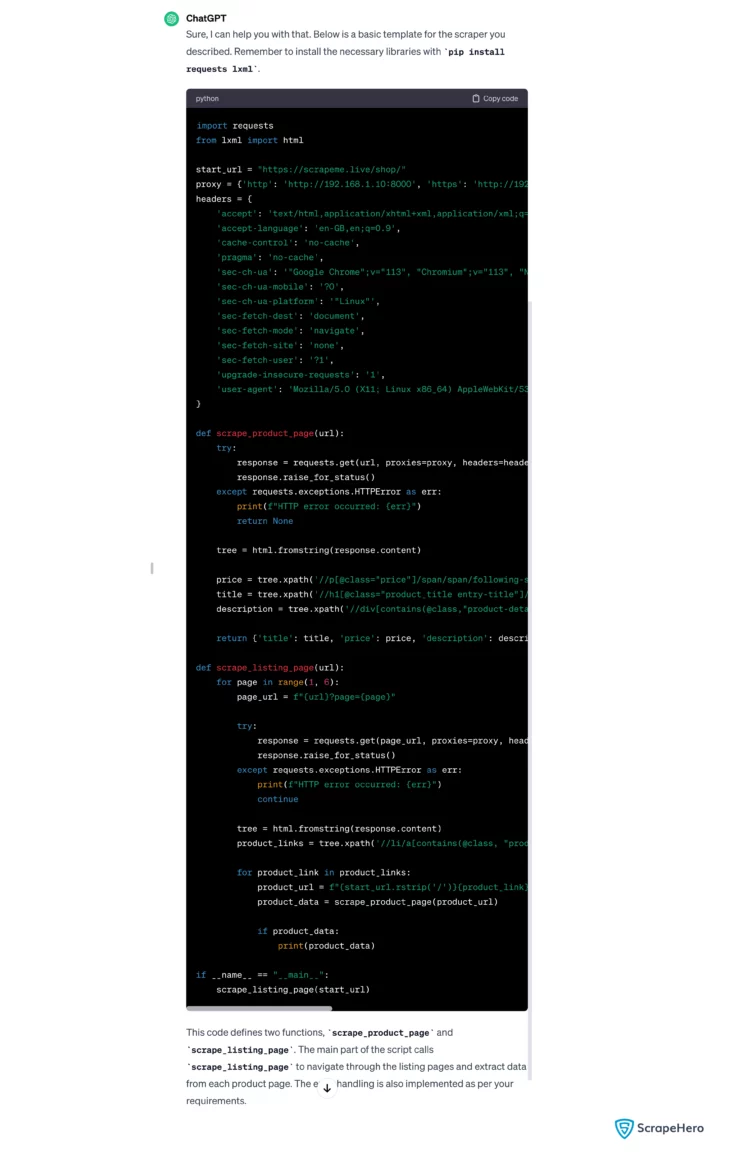

Output Generated by ChatGPT

ChatGPT generates the following output as a result of the instructions given by the user.

import requests

from lxml import html

start_url = "https://scrapeme.live/shop/"

proxy = {'http': 'http://192.168.1.10:8000', 'https': 'http://192.168.1.10:8000'}

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,/;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-GB,en;q=0.9',

'cache-control': 'no-cache',

'pragma': 'no-cache',

'sec-ch-ua': '"Google Chrome";v="113", "Chromium";v="113", "Not-A.Brand";v="24"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Linux"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'none',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36',

}

def scrape_product_page(url):

try:

response = requests.get(url, proxies=proxy, headers=headers)

response.raise_for_status()

except requests.exceptions.HTTPError as err:

print(f"HTTP error occurred: {err}")

return None

tree = html.fromstring(response.content)

price = tree.xpath('//p[@class="price"]/span/span/following-sibling::text()')[0].strip()

title = tree.xpath('//h1[@class="product_title entry-title"]/text()')[0].strip()

description = tree.xpath('//div[contains(@class,"product-details__short-description")]/p/text()')[0].strip()

return {'title': title, 'price': price, 'description': description}

def scrape_listing_page(url):

for page in range(1, 6):

page_url = f"{url}?page={page}"

try:

response = requests.get(page_url, proxies=proxy, headers=headers)

response.raise_for_status()

except requests.exceptions.HTTPError as err:

print(f"HTTP error occurred: {err}")

continue

tree = html.fromstring(response.content)

product_links = tree.xpath('//li/a[contains(@class, "product__link")]/@href')

for product_link in product_links:

product_url = f"{start_url.rstrip('/')}{product_link}"

product_data = scrape_product_page(product_url)

if product_data:

print(product_data)

if __name__ == "__main__":

scrape_listing_page(start_url)

Limitations of Using ChatGPT to Scrape Websites

ChatGPT is more than capable of helping you create a web scraper. However, it’s essential to be aware of the limitations involved in ChatGPT scraping. They are:

-

Anti-Scraping Measures can Pose Challenges

Even though you can use ChatGPT for web scraping, it may not be able to bypass the anti-scraping technologies used by the websites. This requires expertise in web scraping and related tools, which ChatGPT lacks.

-

Maintenance and Scalability

As web scraping projects evolve, maintenance and scalability become more challenging. ChatGPT can’t provide instructions to address these challenges, including handling large amounts of data.

-

Difficulty in Handling Complex Scraping Scenario

Web scraping can include handling complex data, dynamic content, maintaining sessions, etc. A ChatGPT scraper may not be able to handle such situations.

Use Cases

While there are certain limitations to using ChatGPT to scrape websites, there are many use cases where it can prove to be a valuable asset for web scraping. Some instances include:

-

Automation Based on User Input

ChatGPT can automate repetitive tasks by generating code snippets or instructions based on user input, thus reducing manual effort.

-

Rapid Prototyping

ChatGPT can rapidly build a prototype for web scraping with minimal functionality based on initial instructions from the user. This prototype serves as a starting point for developing scrapers and checking the feasibility of said scrapers.

-

Error Handling and Troubleshooting

During web scraping using ChatGPT, it can help troubleshoot common problems or errors encountered.

Wrapping Up

Despite its limitations, ChatGPT serves as an invaluable resource for web scraping for beginners as well as experienced users. For creating simple web scrapers, ChatGPT comes in handy. However, for building and maintaining web scrapers, which can be challenging, you need a more streamlined, speedy, and hassle-free solution.

Also when dealing with dynamic web pages, using ChatGPT is not a valid solution. Instead, you can use ScrapeHero Cloud, which offers pre-built crawlers and APIs for all your web scraping needs. It is affordable, fast, and reliable, offering a no-code approach to users without extensive technical knowledge.

For large-scale data extraction, you can consult ScrapeHero, as we can provide you with advanced, bespoke, and custom solutions for your needs. ScrapeHero web scraping services provide complete processing of the data pipeline, from data extraction to data quality checks, to boost your business.

Frequently Asked Questions

-

Can ChatGPT perform web scraping?

Fully automated web scraping is not possible with ChatGPT scraping. But with the use of the Scraper plugin, ChatGPT can perform web scraping.

-

How to use AI in web scraping?

BeautifulSoup, Selenium, TensorFlow, and PyTorch are commonly used to implement AI in web scraping.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data