This article outlines a few methods to scrape Google Reviews data. This could effectively export Google reviews data to Excel or other formats for easier access and use.

There are three methods to scrape Google Reviews:

1. Scrape Google Reviews with coding: Building a web scraper in Python or JavaScript

2. Scrape Google Reviews without coding:

- Using the ScrapeHero Cloud’s Google Reviews Scraper, a no-code scraping tool

- Using the ScrapeHero Cloud’s Google Reviews Scraper API

3. ScrapeHero Cloud offers you ready-made web crawlers and real-time APIs, which are the easiest way to extract data from websites and download it into spreadsheets with a few clicks.

Don’t want to code? ScrapeHero Cloud is exactly what you need.

With ScrapeHero Cloud, you can download data in just two clicks!

Building a Google reviews scraper in Python/JavaScript to extract

In this section, we will guide you on how to scrape Google Reviews using either Python or JavaScript. We will utilize the browser automation framework called Playwright to emulate browser behavior in our code.

One key advantage of this approach is its ability to bypass standard blocks to prevent scraping. However, familiarity with the Playwright API is necessary for practical use.

You could also use Python Requests, LXML, or Beautiful Soup to build a Google Maps scraper without using a browser or a browser automation library. But bypassing the anti scraping mechanisms put in place can be challenging and is beyond the scope of this article.

Here are the steps to scrape Google Maps data using Playwright:

Step 1: Choose either Python or JavaScript as your programming language.

Step 2: Install Playwright for your preferred language:

JavaScript

Python

JavaScript

const { chromium } = require('playwright');

const fs = require('fs');

async function run() {

const browser = await chromium.launch({

headless: false

});

/* Custom variables */

const searchTerm = 'Burj Khalifa';

// Creating new context and page.

const context = await browser.newContext();

const page = await context.newPage();

// navigating to google.com

await page.goto('https://www.google.com/');

// Searching the search term

await page.getByRole('combobox', { name: 'Search' }).click();

await page.getByRole('combobox', { name: 'Search' }).type(searchTerm);

await page.getByRole('combobox', { name: 'Search' }).press('Enter');

// clicking the review button

await page.locator('xpath=(//a[@data-async-trigger="reviewDialog"])[1]').click();

let data = await extractData(page);

saveData(data);

// Closing the browser instance

await context.close();

await browser.close();

}

/**

* This function will extract the necessary data.

* @param {page} page the page object that the data to be scraped.

* @returns {[object]} The scraped data as object.

*/

async function extractData(page) {

let dataToSave = [];

// Necessary selectors.

const xpathAllReviews = '//div[@jscontroller="fIQYlf"]';

const xpathMoreButton = "//a[@class='review-more-link']";

const xpathTitle = "//div[@class='TSUbDb']/a";

const xpathRating = "//g-review-stars[@class='lTi8oc']/span";

const xpathReviews = '//span[@jscontroller="MZnM8e"]';

const allReviews = page.locator(xpathAllReviews);

const allReviewsCount = await allReviews.count();

for (var index= 0; index < allReviewsCount ; index++) {

const element = await allReviews.nth(index);

// Clicking more button if the review is shortened.

const moreBtn = element.locator(xpathMoreButton)

if(await moreBtn.count()>0) {

try {

await moreBtn.click();

await page.waitForTimeout(2500);

}

catch {}

}

// Scraping necessary data.

const title = await element.locator(xpathTitle).innerText();

const rating = await element.locator(xpathRating).getAttribute("aria-label")

const review = await element.locator(xpathReviews).innerText();

let rawDataToSave = {

"author_name": title,

"rating": rating,

"review": review

}

// Collecting to a list.

dataToSave.push(rawDataToSave)

}

return dataToSave;

}

/**

* This function used to save the data as json file.

* @param {[object]} data the data to be written as json file.

*/

function saveData(data) {

let dataStr = JSON.stringify(data, null, 2)

fs.writeFile("google_reviews.json", dataStr, 'utf8', function (err) {

if (err) {

console.log("An error occurred while writing JSON Object to File.");

return console.log(err);

}

console.log("JSON file has been saved.");

});

}

run();

Python

import asyncio

import json

from playwright.async_api import Playwright, async_playwright

async def extract_data(page) -> list:

"""

Extracts the results information from the page

Args:

page: Playwright page object

Returns:

A list containing details of results as a dictionary. The dictionary

has title, review count, rating, address of various results

"""

review_box_xpath = '//div[@jscontroller="fIQYlf"] '

review_xpath = '//span[@data-expandable-section]'

secondary_review_xpath = '//span[@class="review-full-text"]'

author_xpath = '//div[@class="TSUbDb"]'

rating_xpath = '//g-review-stars/span'

await page.wait_for_selector(review_box_xpath)

review_box = page.locator(review_box_xpath)

data = []

for review_box_index in range(await review_box.count()):

result_elem = review_box.nth(review_box_index)

review = await result_elem.locator(review_xpath).inner_text()

review = review if review else await result_elem.locator(

secondary_review_xpath).inner_text()

author_name = await result_elem.locator(author_xpath).inner_text()

rating = await result_elem.locator(

rating_xpath).get_attribute('aria-label')

rating = rating.strip(', ') if rating else None

data.append({

'author_name': author_name,

'review': review,

'rating': rating

})

return data

async def run(playwright: Playwright) -> None:

"""

Main function which launches browser instance and performs browser

interactions

Args:

playwright: Playwright instance

"""

browser = await playwright.chromium.launch(

headless=False,

proxy={'server': 'proxy url'}

)

context = await browser.new_context()

# Open new page

page = await context.new_page()

# Go to https://www.google.com/

await page.goto("https://www.google.com/")

# Type search query

search_term = "burj khalifa"

await page.locator("[aria-label="Search"]").type(search_term)

# Press enter to search in google

await page.keyboard.press('Enter')

# wait for review button

await page.locator(

'//a[@data-async-trigger="reviewDialog"]').first.wait_for(

timeout=10000)

# Click reviews button

await page.locator('//a[@data-async-trigger="reviewDialog"]').first.click()

# Initialize the number of pagination required

pagination_limit = 3

# Iterate to load reviews for mentioned number of pages

for page_number in range(pagination_limit):

await page.locator('//div[@class="review-dialog-list"]').hover()

await page.mouse.wheel(0, 100000)

page_number += 1

await page.wait_for_timeout(2000)

# Extract all displayed reviews

data = await extract_data(page)

# Save all extracted data as a JSON file

with open('google_reviews.json', 'w') as f:

json.dump(data, f, indent=2)

# ---------------------

await context.close()

await browser.close()

async def main() -> None:

async with async_playwright() as playwright:

await run(playwright)

asyncio.run(main())

This code shows how to scrape Google Reviews of the Burj Khalifa from Google using the Playwright library in Python and JavaScript. The scripts have two main functions:

- run function: This function takes a Playwright instance as input and performs the scraping process by launching a Chromium browser instance and navigating to Google. It fills in a search query and waits for results before calling the extract_details function to store the data in a google_reviews.json file.

- extract_data function: This function takes a Playwright page object as input and returns a list of dictionaries containing details of each restaurant, including title, review count, rating, address, and phone number.

Finally, the main function uses the async_playwright context manager to execute the run function. A JSON file containing the listings from your executed Google Maps data scraping will be created.

Step 4: Run your code and collect the scraped data from Google Maps.

Disclaimer: The xpaths utilized in this tutorial may vary based on the location from which Google Maps is accessed. Google dynamically renders different xpaths for different regions. In this tutorial, the xpaths used were generated while accessing Google Maps from the United States.

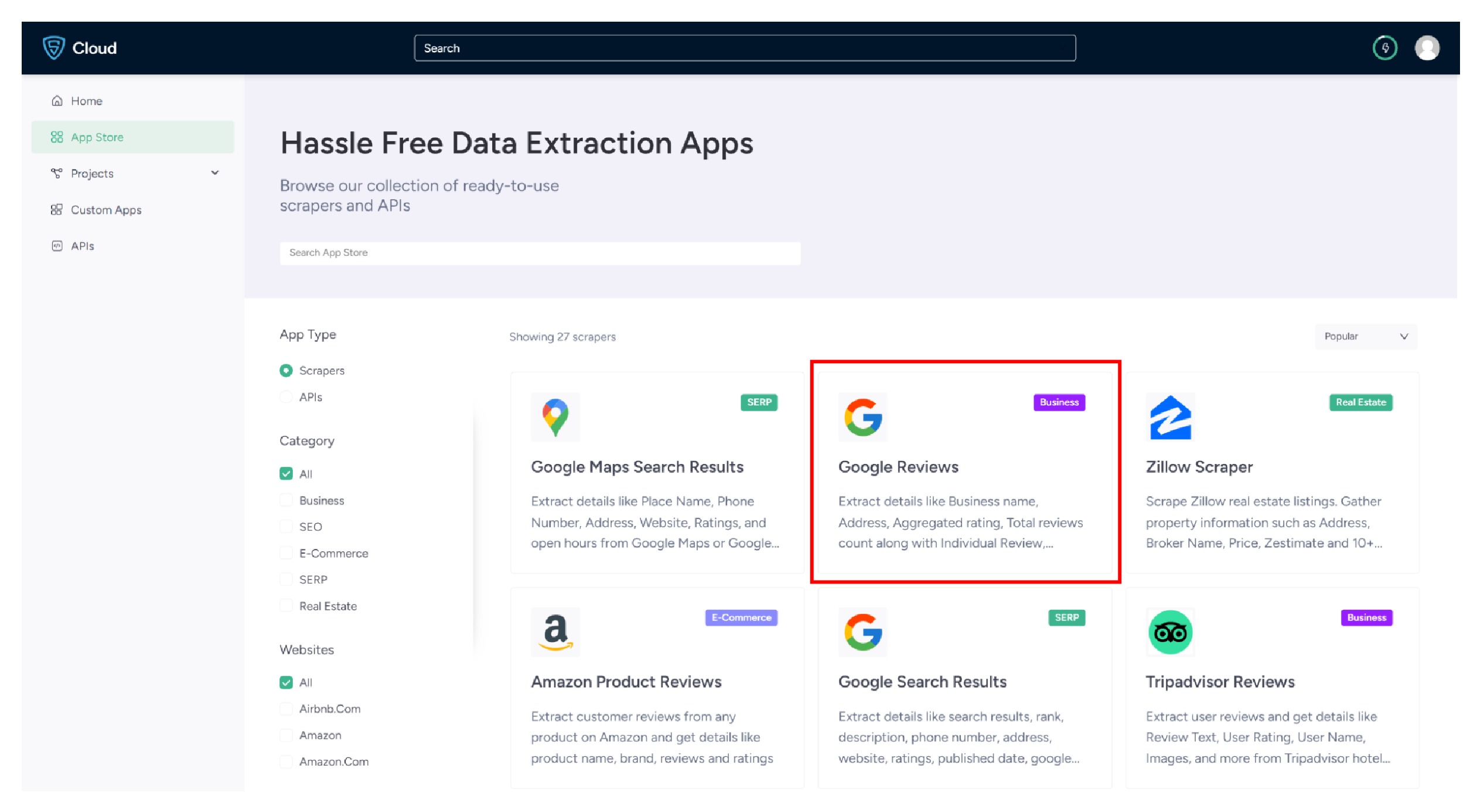

Using No-Code Google Reviews Scraper by ScrapeHero Cloud

The Google Reviews Scraper by ScrapeHero Cloud is a convenient method for scraping reviews from Google. It provides an easy, no-code method for scraping data, making it accessible for individuals with limited technical skills.

This section will guide you through the steps to set up and use the Google Maps scraper.

1. Sign up or log in to your ScrapeHero Cloud account.

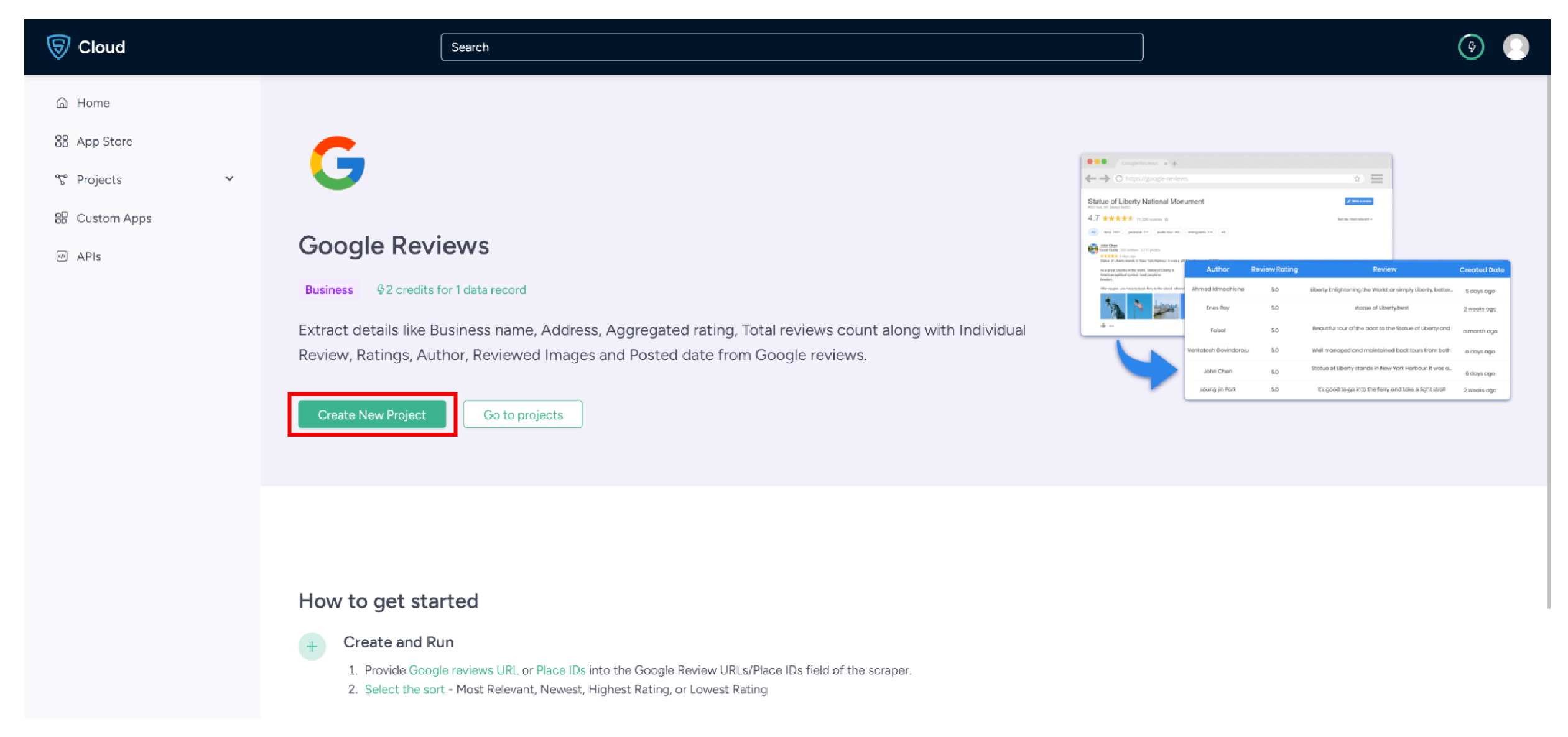

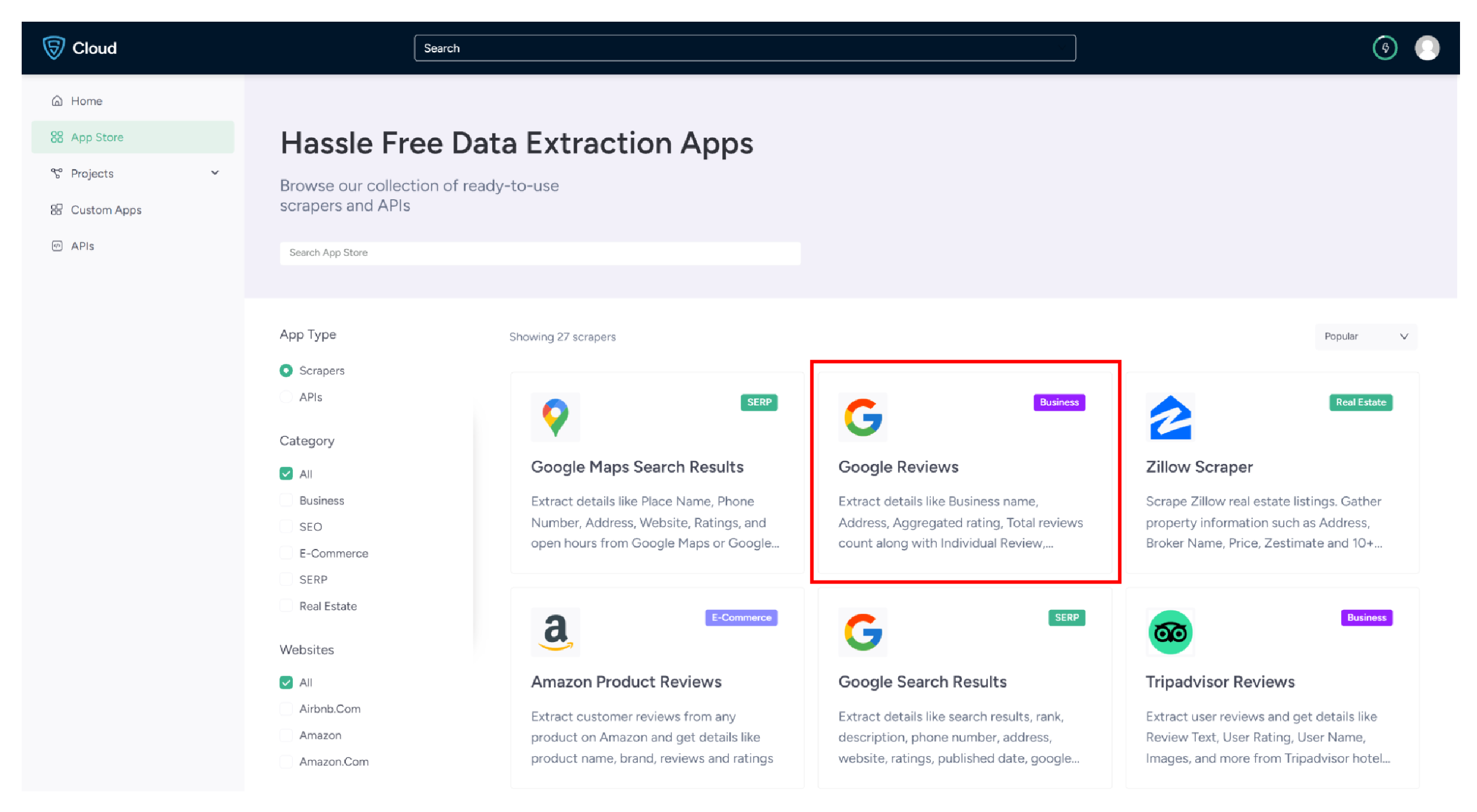

2. Go to the Google Reviews scraper by ScrapeHero Cloud in the marketplace.

3. Click the Create New Project button.

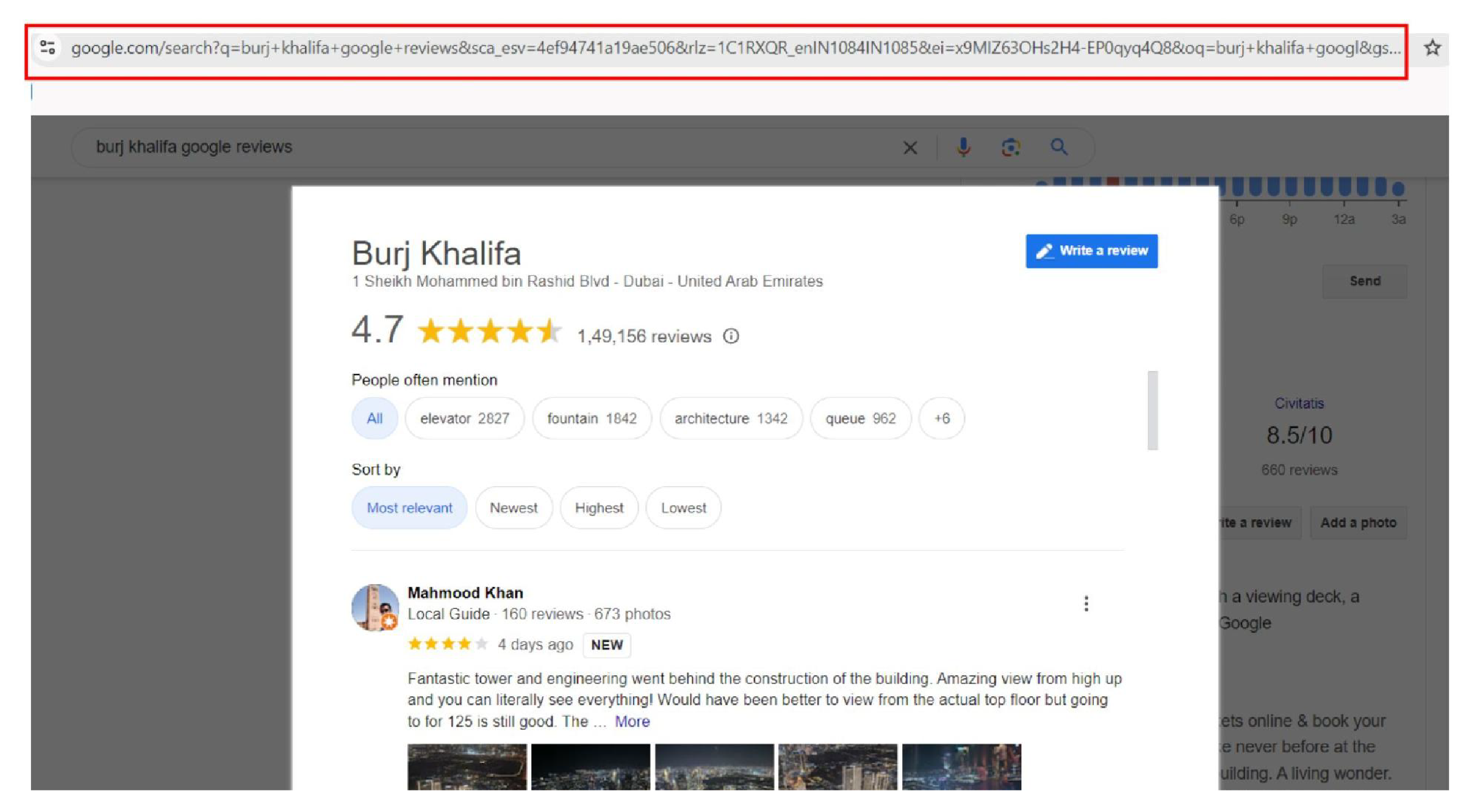

4. To get the reviews, you need to either provide a Google Review URL or place ID. You can get the Google Review URL from the search bar when you search for a query.

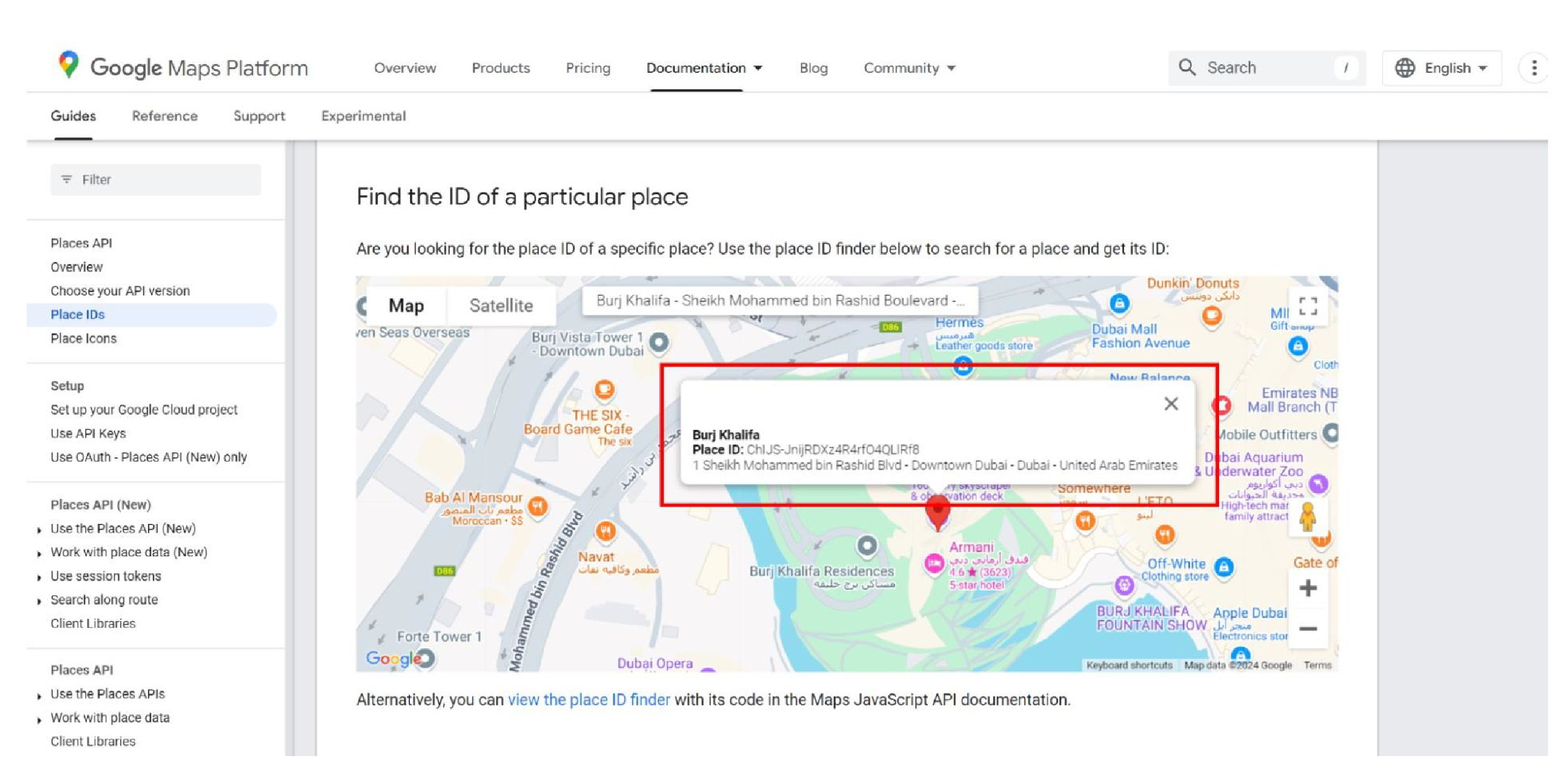

If you want to provide the place ID, you will get it from Google Maps Platform Place IDs.

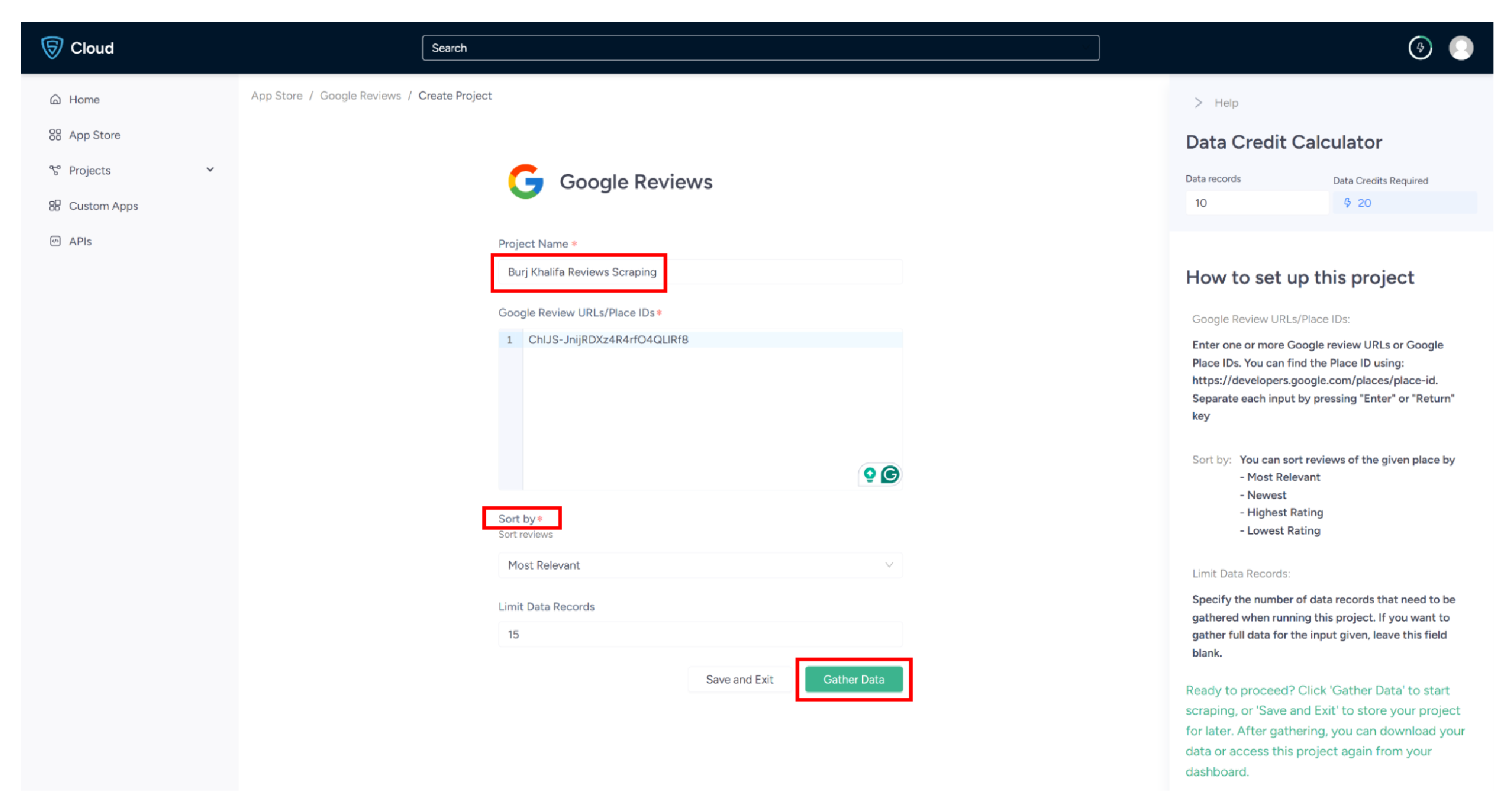

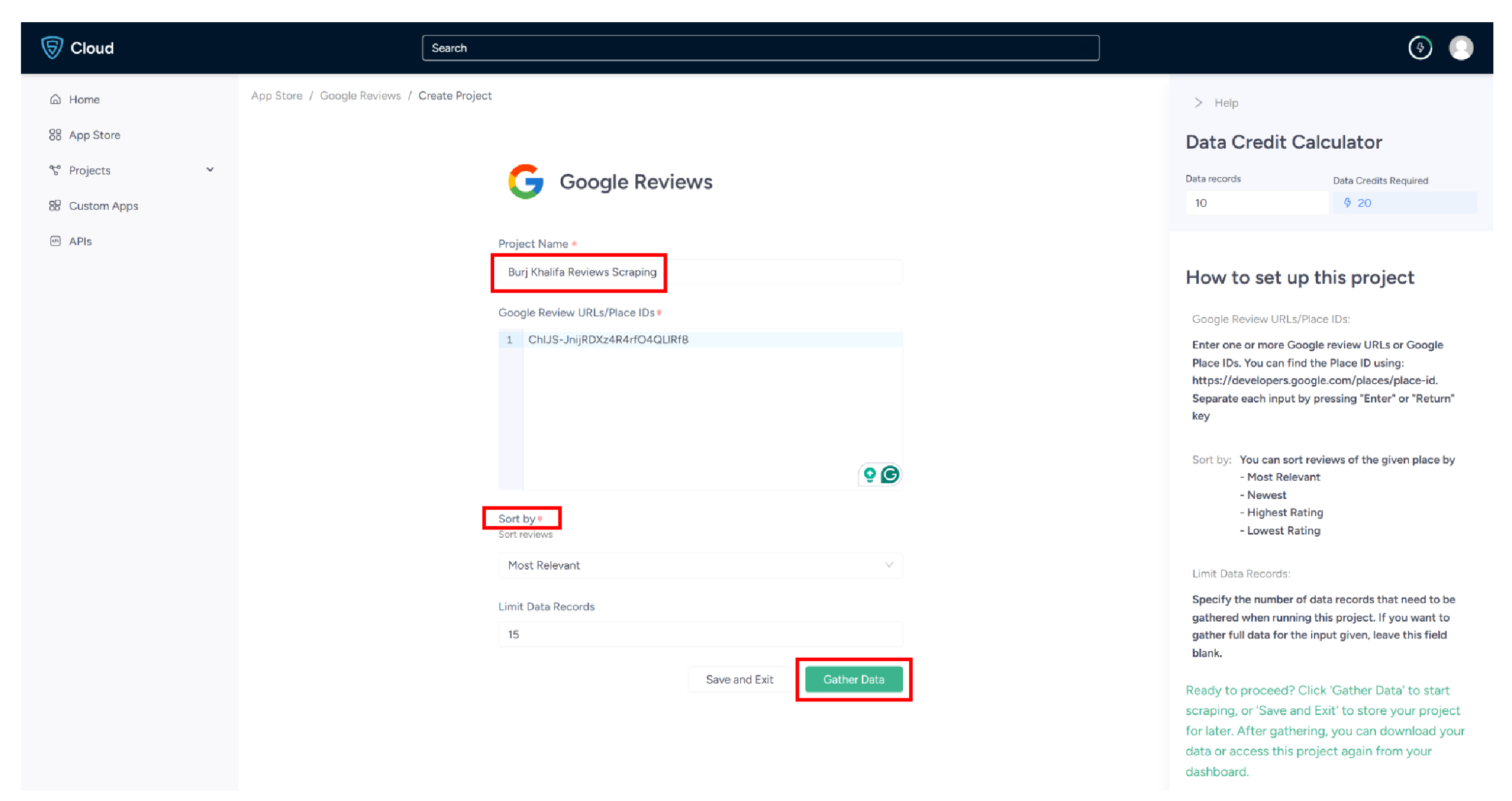

5. In the field provided, enter a project name, Google Review URL, or Google place ID and the maximum number of records you want to gather.

You can also sort the reviews by Most Relevant, Newest, Highest Rating, and Lowest Rating. Then, click the Gather Data button to start the scraper.

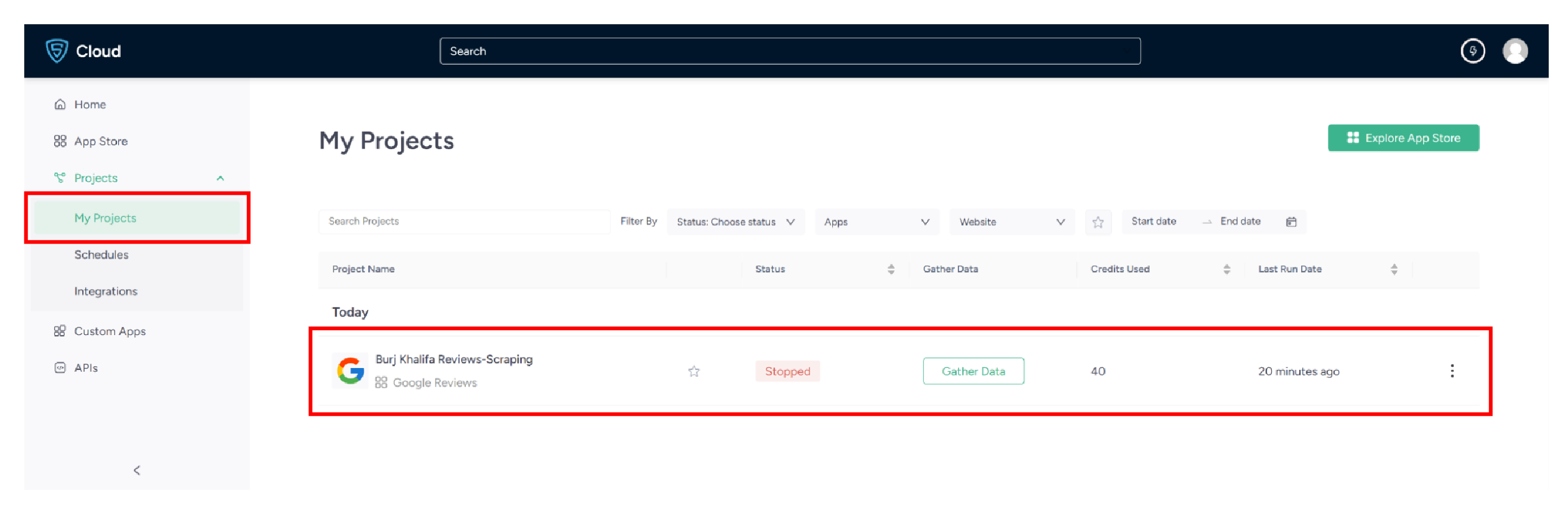

6. The scraper will start fetching data for your queries, and you can track its progress under the Projects tab.

7. Once it is finished, you can view the data by clicking on the project name. A new page will appear, and under the Overview tab, you can see and download the data.

8. You can also pull Google Reviews data into a spreadsheet from here. Just click on Download Data, select Excel, and open the downloaded file using Microsoft Excel.

Scrape Google Reviews and Ratings

Using Google Reviews Scraper API by ScrapeHero Cloud

The ScrapeHero Cloud Google Reviews API is an alternate tool for extracting reviews from Google. This user-friendly API enables those with minimal technical expertise to obtain user review data effortlessly from Google.

Here are steps to configure and utilize this API:

1. Sign up or log in to your ScrapeHero Cloud account.

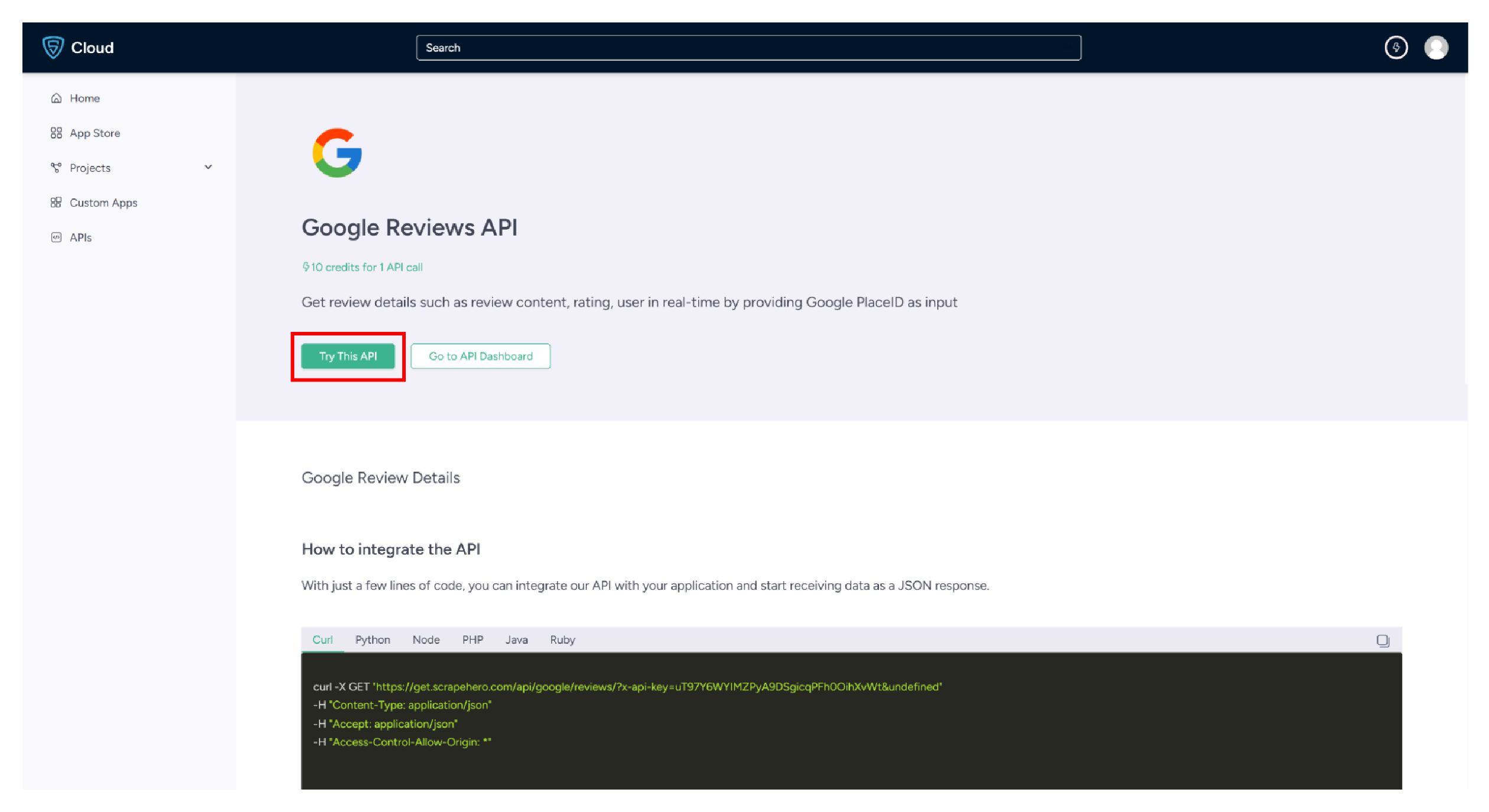

2. Go to the Google Reviews scraper API by ScrapeHero Cloud in the marketplace.

3. Click on the Try this API button.

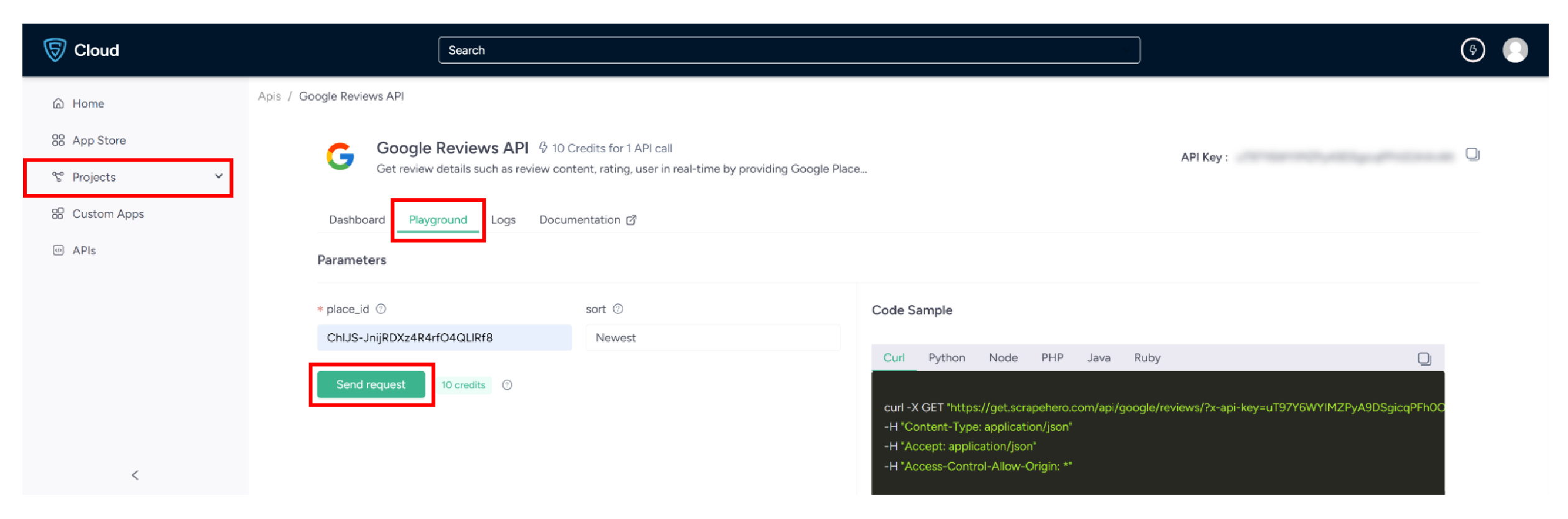

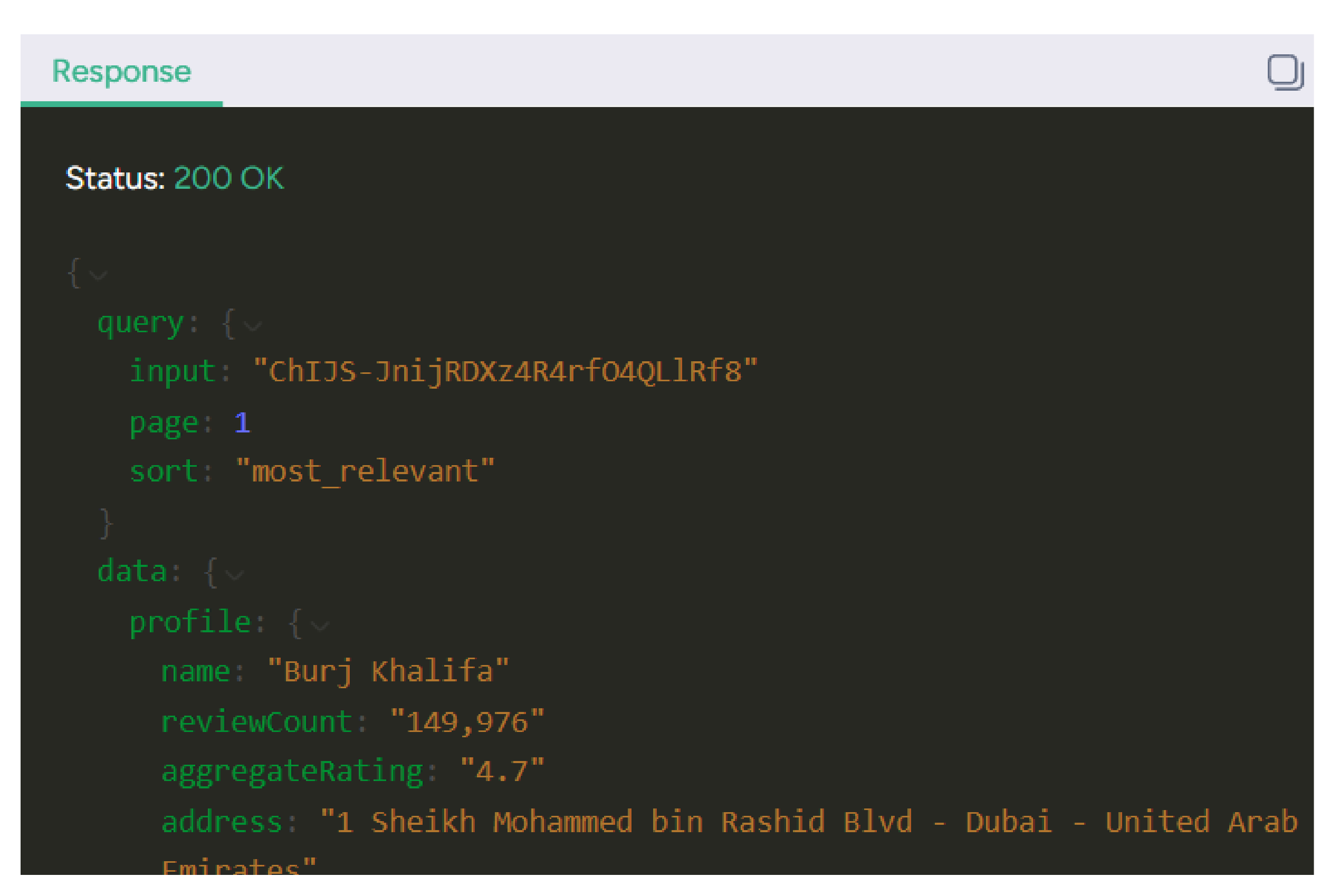

9. In the field provided enter the Google place ID. You can also sort the view if you want. Click Send request.

10. You will get the results in the response window on the bottom right side of the page.

Uses cases of Google Reviews Data

If you’re unsure why you should scrape reviews from Google, here are some use cases where this data would be helpful:

-

Business Reputation Management

Organizations monitor their public image and analyze customer opinions about their products and services through thorough review analysis.

-

Competitor Analysis

Businesses examine competitors’ Google Reviews data to understand their competitive landscape better.

-

Product Development

Review data helps businesses focus on important areas of their products or services.

-

Marketing

Using Google Reviews, organizations create better marketing strategies that target their desired audience effectively.

-

Customer Insights

This data provides valuable insights into how customers use and feel about products or services.

Read More: How to Scrape Google Without Coding

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data

Frequently Asked Questions

Is it possible to scrape Google Reviews?

Yes. Google Reviews is extracting customer feedback from the Google Knowledge Panel associated with a specific business or locale. You can scrape Google Reviews using different methods.

What is the subscription fee for the Google Reviews Scraper by ScrapeHero?

ScrapeHero provides a comprehensive pricing plan for both Scrapers and APIs. To know more about the pricing, visit our pricing page.

Is it legal to scrape Google reviews?

The legality of web scraping depends on the legal jurisdiction, i.e., laws specific to the country and the locality. Gathering or scraping publicly available information is not illegal.

Generally, Web scraping is legal if you are scraping publicly available data.

Please refer to our Legal Page to learn more about the legality of web scraping.