Web scraping is useful across various industries for building different projects where data gathering and analysis are involved. In most cases, large volumes of constantly updated data are required to create such projects.

This article enlists the top 15 ideas for web scraping projects in Python, from building a tech job portal to a personalized global news aggregator, that are suitable for beginners across different sectors and levels of expertise.

Idea 1: Building Your Own Tech Job Portal by Scraping Glassdoor Data

Develop your tech job portal by scraping data from Glassdoor like company reviews and salary information. This data will help users gain valuable insights, especially on up-to-date job market trends and opportunities in the tech job market.

Steps To Create the Tech Job Portal:

- Set up your Python environment by installing the necessary libraries

- Understand Glassdoor’s layout to identify the elements containing data

- Write a scraper to fetch pages, parse the HTML, and handle pagination

- Clean the scraped data using Pandas for any missing values

- Develop job portal interface with search and filter functionalities

- Deploy the application using a suitable platform

Must-Use Tools:

- Python: Primary programming language

- BeautifulSoup: For parsing HTML and XML documents

- Pandas: For data manipulation and analysis

- Flask/Django: For creating the web application

- Heroku/AWS: For deploying the application

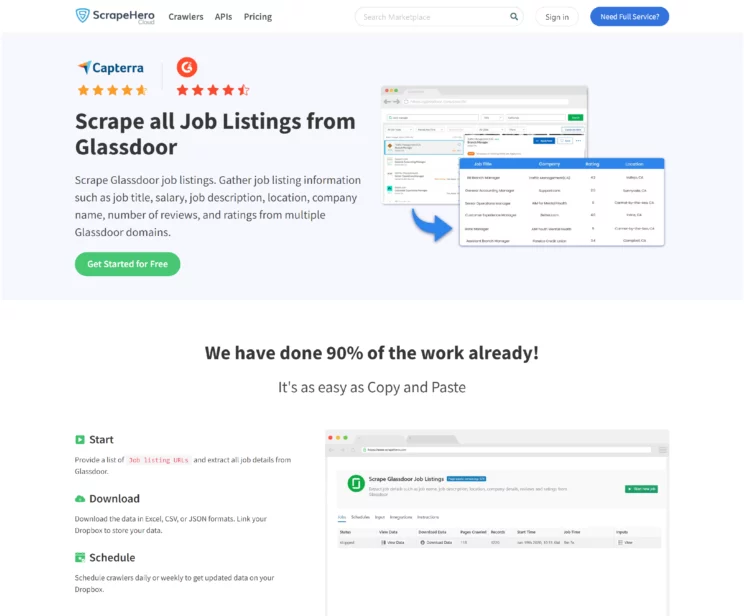

An Alternative to Scrape Glassdoor Data: ScrapeHero Glassdoor Listings Scraper

You can use ScrapeHero Glassdoor Listings Scraper, which is a prebuilt scraper from ScrapeHero Cloud exclusively designed to extract valuable data from Glassdoor, such as company reviews, salary reports, and job listings. This quick and efficient solution for data extraction is ideal if you seek an approach that minimizes the need for complex coding and setup.

Idea 2: Building a Dynamic Search Engine Rank Tracker for Businesses

Create a search engine rank tracking system as one of the web scraping project ideas to track the rank of specific keywords in search engine results. This system is useful for businesses and SEO professionals to track the performance of their websites and adjust their strategies accordingly.

Steps to Create the Search Engine Rank Tracking System:

- Define target keywords and domains

- Fetch search engine results using a web scraping tool or API

- Parse, analyze, and store the results

- Develop a user-friendly dashboard and implement features like graphs

- Set up scheduled tracking at regular intervals

- Optimize the system, ensuring scalability and reliability

- Deploy and monitor the system to adjust as necessary to ensure accurate data

Must-Use Tools:

- Python: Primary programming language for backend development

- BeautifulSoup: For web scraping

- Selenium: For rendering JavaScript if needed

- Flask/Django: For building the web application

- Celery: For managing asynchronous task queues

- PostgreSQL/MySQL: For data storage

- Heroku/AWS: For hosting the application

Idea 3: Developing a Reliable Health Information Aggregator

Build a system to scrape health and medical websites and collect data regarding diseases, treatments, and drugs for researchers, healthcare professionals, and the general public. This system will provide centralized and reliable health information.

Steps to Develop the Health Information Aggregator:

- Identify reputable health and medical websites to scrape

- Set up a virtual environment to manage dependencies

- Develop scraping scripts to extract data like disease descriptions, symptoms, etc

- Clean and organize the data to ensure consistency and accuracy

- Develop a user-friendly web interface using a Python framework

- Schedule scripts to run at regular intervals

- Thoroughly test the system and validate the accuracy of the data

- Deploy the web application on a cloud platform to make it accessible to users globally

Must-Use Tools:

- Python: Primary programming language

- BeautifulSoup: For web scraping

- Flask/Django: For building the web application

- SQLAlchemy: For database management

- PostgreSQL/SQLite: For storing data

- Heroku/AWS: For hosting the application

Idea 4: Creating a Real Estate Data Scraper for Zillow for Market Analysis

Build a scraper to extract real estate data from Zillow. This will be one of the interesting web scraper project ideas which will open vast opportunities for understanding real estate market trends, investment opportunities, or data analysis. This system enables users to analyze market trends, compare property values, and make informed real estate decisions.

Steps to Create the Real Estate Data Scraper:

- Identify the specific data fields you want to scrape from Zillow

- Set up your Python environment

- Write the scraper to fetch web pages, parse the HTML, and extract the data

- Clean and store the extracted data appropriately

- evelop a data analysis interface and implement features like data visualization

- Automate and schedule data collection to keep the data up-to-date

- Test and debug the scraper thoroughly, and handle worse scenarios

- Deploy the application to a cloud platform

Must-Use Tools:

- Python: For scripting and automation

- BeautifulSoup: For HTML parsing and web scraping

- Pandas: For data manipulation and cleaning

- Flask/Django: For creating the web application

- SQLite/CSV: For data storage

- Plotly/Matplotlib: For data visualization

- Celery with Beat: For task scheduling

- Heroku/AWS: For deploying the application

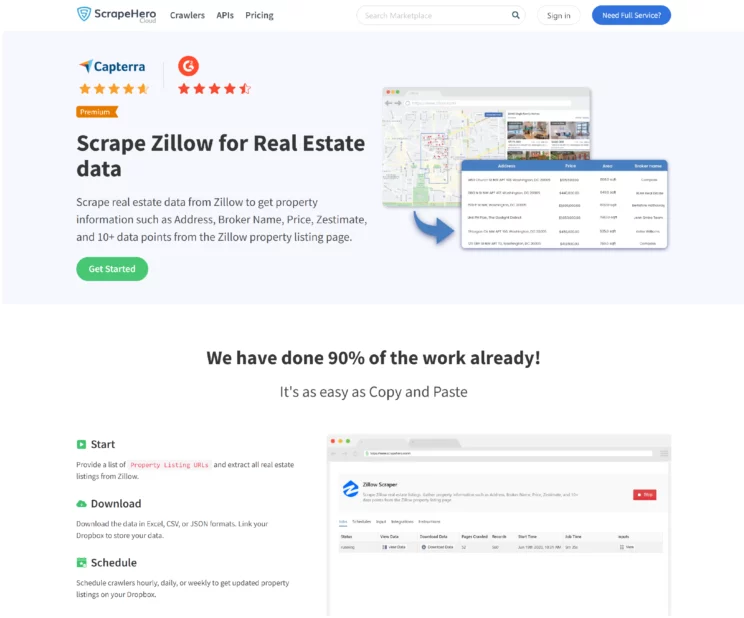

An Alternative to Scrape Zillow Data: ScrapeHero Zillow Scraper

You can consider using the prebuilt scraper from ScrapeHero Cloud, the ScrapeHero Zillow Scraper, which can extract all the essential data like addresses, broker names, and prices that you need for your project. You can use this premium scraper by subscribing to any of our paid plans.

Idea 5: Developing a Cutting-Edge Sports Performance Analytics System

Create a system for statistical analysis of sports data that can predict performance outcomes, evaluate player and team strengths, and offer insights into competition levels across various sports. This project can target sports enthusiasts, statisticians, and data scientists alike.

Steps to Create a Sports Performance Analytics System:

- Identify sources of sports data, such as APIs (e.g., ESPN)

- Clean the collected data and structure it into a database for easy access

- Use statistics to analyze the data and build predictive models

- Create visual representations of data and develop dashboards

- Automate the data collection and schedule to run updates at set intervals

- Test and validate the system thoroughly for reliability and effectiveness

- Deploy the system on a web server or cloud platform

Must-Use Tools:

- Python: For all backend scripting

- BeautifulSoup: For web scraping

- Pandas/Numpy: For data manipulation and numerical calculations

- Scikit-learn/Statsmodels: For statistical modeling and machine learning

- Matplotlib/Seaborn/Plotly: For data visualization

- Plotly Dash/Streamlit: For building interactive web applications

- APScheduler/Celery: For task scheduling

- Flask/Django: For creating the application framework

- Heroku/AWS: For hosting the application

Idea 6: Creating a Grocery Price Comparison Tool to Maximize Savings

Develop an application that scrapes grocery data as one of the web scraping project ideas, especially the prices from various store websites, compares prices across these platforms, tracks discounts, and generates cost-effective shopping lists. It helps consumers make informed, budget-friendly shopping decisions.

Steps to Develop the Grocery Price Comparison Tool:

- Identify popular grocery websites

- Install Python and the necessary libraries

- Use scraping tools to extract data and handle complexities

- Clean and store the data for easy access and manipulation

- Develop algorithms to compare the same product prices across different stores

- Build a user-friendly interface and implement features like customizable alerts

- Deploy the application on the cloud and set up tasks for regular updates

- Conduct thorough testing to ensure the application is reliable and user-friendly

Must-Use Tools:

- Python: For scripting and backend logic

- BeautifulSoup: For web scraping

- Pandas: For data manipulation

- Flask/Django: For building the web application

- SQLite/PostgreSQL: For database management

- Celery: For managing periodic tasks and updates

- Bootstrap: For frontend design

- Heroku/AWS: For hosting the application

Idea 7: Building a Comprehensive Database for Video Games

Develop a video game database by scraping data from major online gaming platforms such as Steam and the Epic Games Store. Gamers can find detailed information about video games, including prices, reviews, ratings, release dates, and more, using this database.

Steps to Build a Comprehensive Video Game Database:

- Determine the specific data points to scrape, such as game titles

- Install Python and the relevant libraries for the project

- Develop scraping scripts. To extract the required information from stores

- Clean and organize the data in a structured format

- Build a frontend interface and implement features like sorting

- Schedule your scraping scripts to run at regular intervals

- Deploy the application. On a cloud to make it accessible to users worldwide

- Thoroughly test the application and launch the database publicly

Must-Use Tools:

- Python: Primary programming language

- BeautifulSoup/Scrapy: For scraping HTML and handling requests

- Flask/Django: For creating the web application backend

- SQLAlchemy/SQLite: For database management

- Bootstrap/Vue.js: For frontend development

- Celery: For managing periodic updates and background tasks

- Heroku/AWS: For hosting the application

Idea 8: Building a Lead Generation System to Connect with Potential Customers

Create a lead generation system by extracting emails and phone numbers from online forums for marketing purposes, ensuring compliance with all relevant data protection laws and regulations.

Steps to Build the Lead Generation System:

- Set up a virtual environment to manage project dependencies

- Choose libraries that can handle the complexities of web pages

- Use regex or HTML libraries to extract emails and phone numbers

- Validate the extracted data and remove duplicates

- Store the data securely

- Build a simple interface for users to interact with the data

- Test, deploy, and monitor the system thoroughly

Must-Use Tools:

- Python: For all scripting and automation

- BeautifulSoup: For web scraping

- Regular Expressions (Regex): For pattern matching in text extraction

- Pandas: For data manipulation and cleaning

- SQLite/PostgreSQL: For secure data storage

- Flask/Django: For creating the application interface

- SSL/TLS: For data encryption and secure connections

Idea 9: Developing a Hotel Pricing Intelligence System by Scraping TripAdvisor

Create a hotel pricing analytics tool by scraping TripAdvisor, which offers insights for travelers, hotel managers, and industry analysts. This system helps to track hotel pricing trends, compare rates across different regions, and analyze pricing strategies during various seasons.

Steps to Build the Hotel Pricing Intelligence System:

- Setup your Python environment

- Scrape hotel data, such as hotel names, pricing, locations, etc., from TripAdvisor

- Clean the extracted data and store it in a structured format

- Analyze the data for pricing patterns and geographical differences

- Develop a user-friendly interface and implement features like customized reports

- Schedule your scraping scripts to run at regular intervals

- Test the system extensively and deploy the application to a cloud platform

Must-Use Tools:

- Python: For scripting and backend logic

- BeautifulSoup: For web scraping

- Pandas/Numpy: For data manipulation and analysis

- Matplotlib/Seaborn: For data visualization

- Flask/Django: For building the web application

- SQLAlchemy/PostgreSQL: For database management

- Heroku/AWS: For hosting the application

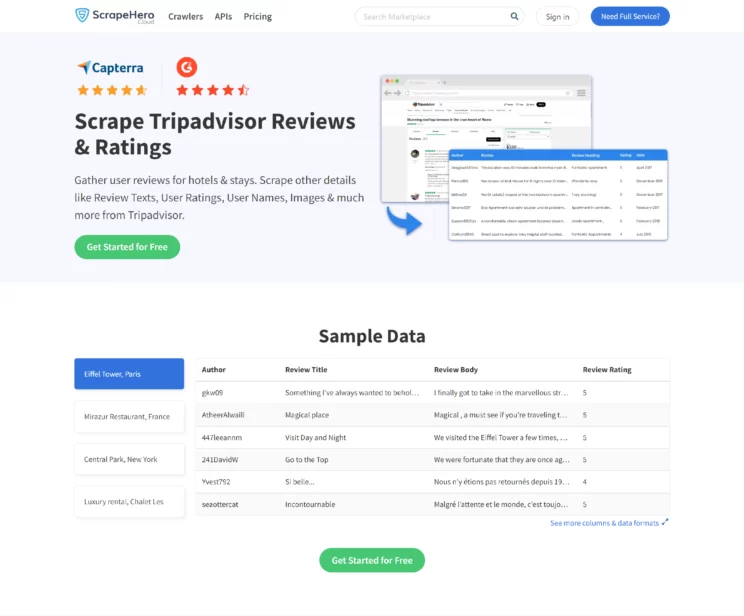

An Alternative to Scrape TripAdvisor Data: ScrapeHero TripAdvisor Scraper

To scrape details from TripAdvisor, specifically the reviews, you can use the ScrapeHero TripAdvisor Scraper from ScrapeHero Cloud. Using this scraper, you can also gather details like review texts, user ratings, and much more. These prebuilt scrapers are easy to use, free of charge up to 25 initial credits, and no coding is involved from your side.

Idea 10: Building a System for Political Sentiment Analysis on Twitter

Create insightful projects on web scraping such as scraping and analyzing Twitter posts using specific hashtags related to political parties. This system can analyze the sentiments of U.S. citizens towards the party and identify trends in public opinion.

Steps to Build the Political Sentiment Analysis System:

- Set up your Python environment for accessing Twitter

- Access Twitter data using APIs

- Extract relevant data from tweets and clean the text data

- Employ natural language processing (NLP) tools for sentiment analysis

- Aggregate and visualize sentiment data to identify trends

- Build a web interface where users can view sentiment analysis results

- Set up a scheduler to run your data collection and analysis periodically

- Deploy the system to a server and update the application as necessary

Must-Use Tools:

- Python: For all backend scripting

- Tweepy: For interfacing with the Twitter API

- NLTK/spaCy: For natural language processing

- TextBlob/VADER: For sentiment analysis

- Pandas: For data manipulation

- Matplotlib/Seaborn/Plotly: For data visualization

- Flask/Django: For web application development

- Heroku/AWS: For deployment

Idea 11: Creating an Automated Product Price Comparison Tool To Find the Best Deals

Build an automated product price comparison system for consumers by scraping data from various e-commerce websites. This system helps in getting the best deals online by comparing the prices of similar products across different platforms in real-time.

Steps to Build the Automated Product Price Comparison Tool:

- Select some popular e-commerce websites to scrape

- Install Python and the necessary libraries for web scraping

- Clean the extracted data and organize it in a structured way

- Develop algorithms to match products and compare prices across websites

- Develop a user-friendly interface with filters for refined searches

- Schedule scraping tasks to update the product data regularly

- Test the application extensively, and deploy the system on a cloud server

Must-Use Tools:

- Python: Primary programming language for scripting

- BeautifulSoup: For web scraping tasks

- Pandas: For data manipulation and cleaning

- Flask/Django: For building the web application

- SQLAlchemy/SQLite/PostgreSQL: For database management

- Celery: For managing periodic scraping tasks

- Heroku/AWS: For hosting the application

- Bootstrap: For frontend design

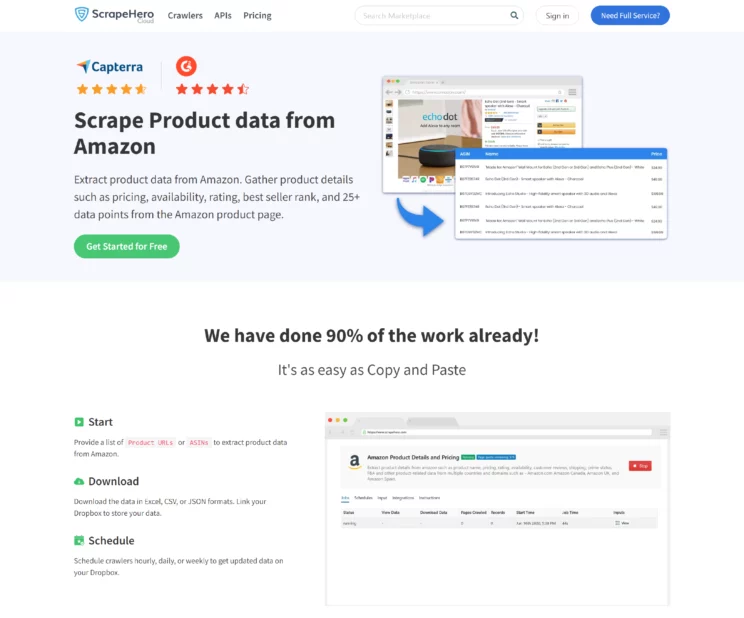

An Alternative to Scrape Amazon: ScrapeHero Amazon Product Details and Pricing Scraper

If you want to scrape Amazon for product data such as pricing, availability, rating, best seller rank, and other data points, then you can consider using ScrapeHero Amazon Product Details and Pricing Scraper. Our prebuilt scrapers are very cost-effective and reliable, offering a no-code approach to users without extensive technical knowledge.

Idea 12: Developing a Comprehensive Learning Resource Aggregator for Students

Create a platform for students and lifelong learners to aggregate educational materials from different online sources. This way, users can find content on a single organized platform categorized by subject, difficulty level, and learning style to suit their needs.

Steps to Build the Learning Resource Aggregator:

- Compile a list of educational websites and online course platforms

- Setup your Python environment

- Use web scraping libraries to extract relevant information, like course titles

- Clean and organize the data and remove any inconsistencies, if any

- Implement algorithms to automatically categorize content

- Build a user-friendly interface and incorporate different features

- Set up automated scraping processes to regularly update the database

- Test the platform and deploy it on a cloud service

Must-Use Tools:

- Python: For all backend scripting

- BeautifulSoup: For web scraping

- Pandas: For data manipulation

- Flask/Django: For building the web application

- SQLAlchemy/SQLite/PostgreSQL: For database management

- Celery: For managing periodic tasks

- Bootstrap: For frontend design

- Heroku/AWS: For hosting the application

Idea 13: Building a Cultural Events Insight Tool to Understand the Trends

Build an application to scrape and analyze data on cultural events, art exhibitions, and public lectures from various cultural websites. The application can provide insightful trends in cultural engagement, which can ultimately help event organizers tailor their services.

Steps to Build the Cultural Events Insight Tool:

- Identify the websites of cultural institutions, museums, etc

- Install Python and the necessary libraries for web scraping

- Use web scraping tools to extract detailed information about events

- Clean and store the data for easy access and analysis

- Analyze the data for cultural trends and utilize visualization tools

- Develop a recommendation system based on the analysis

- Build a user-friendly web interface where users can interact with the data

- Schedule regular updates to the scraping scripts

- Thoroughly test and deploy the application

Must-Use Tools:

- Python: For scripting and backend operations

- BeautifulSoup: For web scraping

- Pandas: For data manipulation

- Matplotlib/Seaborn/Plotly: For data visualization

- Flask/Django: For web application development

- SQLAlchemy/SQLite/PostgreSQL: For database management

- Celery: For handling periodic tasks

- Heroku/AWS: For hosting the application

Idea 14: Building a Personalized Movie and TV Show Recommendation Engine

Create a personalized movie and TV show recommendation tool using Python as one of the projects on web scraping. Here extract data from websites like IMDb and Rotten Tomatoes. This engine should enhance the viewing experience of the users with content aligned with their preferences and past reviews.

Steps to Build the Movie and TV Show Recommendation Engine:

- Gather data like movie reviews, user ratings from IMDb, and Rotten Tomatoes

- Setup your Python environment

- Extract relevant information such as movie titles, ratings, reviews, and metadata

- Clean the data and organize it into a structured format for analysis

- Implement machine learning for personalized recommendation generation

- Develop a system for users to create profiles and rate movies

- Create a user-friendly web interface, integrating different features

- Thoroughly test the system and deploy the recommendation engine on the cloud

Must-Use Tools:

- Python: For all backend development

- BeautifulSoup: For web scraping

- Pandas/Numpy: For data manipulation

- Scikit-Learn/Surprise: For machine learning and recommendation algorithms

- Flask/Django: For web application development

- SQLAlchemy/PostgreSQL: For database management

- Heroku/AWS: For deployment

Idea 15: Building a Personalized Global News Aggregator for News Updates

Develop a customized news aggregator as one of the web scraper project ideas incorporating web scraping from multiple international sources. This system also categorizes content by topic or region and offers a personalized news feed to users based on their preferences.

Steps to Build a Personalized Global News Aggregator:

- Identify relevant news sources

- Install Python and the necessary libraries

- Use web scraping libraries to extract news articles and handle dynamic content

- Clean and organize the scraped data

- Implement a system for user profiles and news preference selection

- Develop an algorithm to filter and rank news articles

- Design a user-friendly web interface for both desktop and mobile users

- Set up scheduled tasks to scrape new articles periodically

- Conduct thorough testing and deploy the application on a cloud platform

Must-Use Tools:

- Python: For scripting and backend operations

- BeautifulSoup: For web scraping

- Pandas: For data manipulation

- Flask/Django: For building the web application

- SQLAlchemy/SQLite/PostgreSQL: For database management

- Celery: For managing periodic scraping tasks

- Bootstrap: For frontend design

- Heroku/AWS: For deployment

An Alternative to Scrape News: ScrapeHero News API

ScrapeHero News API allows you to extract data from web sites, automate business operations with RPA, and boost internal apps and workflows with online data integration. ScrapeHero APIs ensure data accuracy and reliability with minimal setup, avoiding complex coding.

Wrapping Up

Web scraping opens the door for many innovative projects. Whether you want to enhance your market research or conduct a competitive analysis, you can explore numerous web scraping possibilities with the right tools. The 15 web scraping projects using Python listed in this article will assist you in starting your scraping journey effortlessly.

However, navigating anti-scraping measures is crucial when it comes to web scraping. With a decade of expertise in overcoming these challenges, ScrapeHero can provide custom solutions across various industries.

ScrapeHero web scraping services have been catering to global clients over the years, ensuring the highest ethical and legal standards.

Frequently Asked Questions

A real-life example of web scraping is Travel booking sites, which use this method to gather real-time data on flight prices, hotel rates, and availability from various airlines and hotel websites.

To start a web scraping project, understand your requirements. Then, identify the target websites and choose the necessary tools to scrape. Set up your environment and begin coding your scraper.

The best tool for web scraping depends on your project’s needs. Python libraries like BeautifulSoup and Requests are widely used for their flexibility.

Also, consider using ScrapeHero Cloud, as this is a better alternative for your scraping needs. You will get access to easy-to-use scrapers and real-time APIs, which are affordable, fast, and reliable. They offer a no-code approach to users without extensive technical knowledge.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data