Web crawling is crucial for search engines to gather data and navigate the web effectively.

However, the crawling process may often encounter specific challenges covering technical setups to strategic implementation.

This article will discuss some of the significant web crawling challenges. You can also read in detail about the solutions to overcome these challenges.

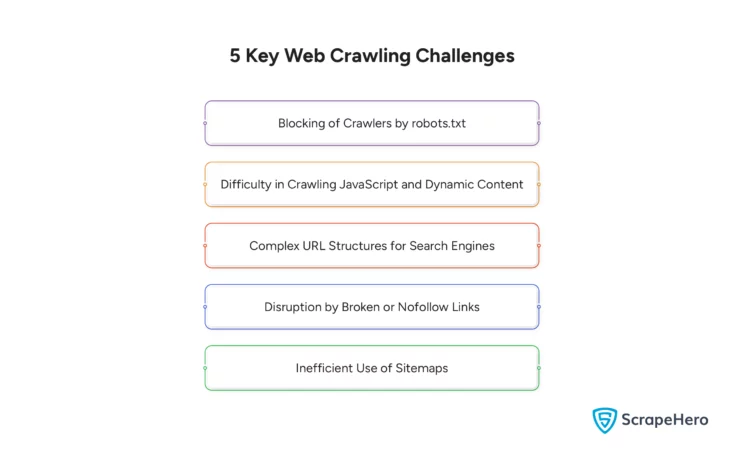

What Are the Common Web Crawling Challenges?

Before moving to the challenges of web crawling, you need to have a deeper understanding of how crawling differs from scraping.

For a better understanding read our complete guide on web crawling with Python.

Due to the diverse and dynamic nature of the websites, web crawlers face numerous challenges that complicate their data collection efforts.

Here are some of the significant web crawler issues:

- Blocking of Crawlers by robots.txt

- Difficulty in Crawling JavaScript and Dynamic Content

- Complex URL Structures for Search Engines

- Disruption by Broken or Nofollow Links

- Inefficient Use of Sitemaps

1. Blocking of Crawlers by robots.txt

Websites can block crawlers using the robots.txt file to prevent overloading their servers and protect sensitive data.

This blocking can hinder crawlers’ data collection by preventing them from visiting important pages and indexing.

2. Difficulty in Crawling JavaScript and Dynamic Content

Many websites use JavaScript to load content dynamically. This creates difficulty for crawlers, resulting in incomplete website indexing.

The web crawler may miss the content that only loads in response to user interactions or as part of a script’s execution.

3. Complex URL Structures for Search Engines

Crawlers often need clarification on websites with complex URL structures. These may include websites with extensive query parameters or session IDs.

Such URLs lead to issues like duplicate content and crawler traps, where the crawler gets stuck in an infinite loop, affecting search engines’ ability to crawl a site effectively.

4. Disruption by Broken or Nofollow Links

Broken links result in 404 errors, and the links marked with “nofollow” tell crawlers not to follow the linked page.

Such links waste crawler resources and negatively impact the user experience, disrupting the structure and flow of crawling.

5. Inefficient Use of Sitemaps

Sitemaps help search engines discover and index new and updated pages. Outdated or poorly structured sitemaps can lead to poor indexation.

These delays in page indexing or the omission of pages from search engine indexes affect a website’s visibility and search performance.

Solutions to Web Crawling Challenges

Addressing the issues mentioned is critical to enhancing a website’s crawlability. Here are some potential strategies for overcoming these challenges.

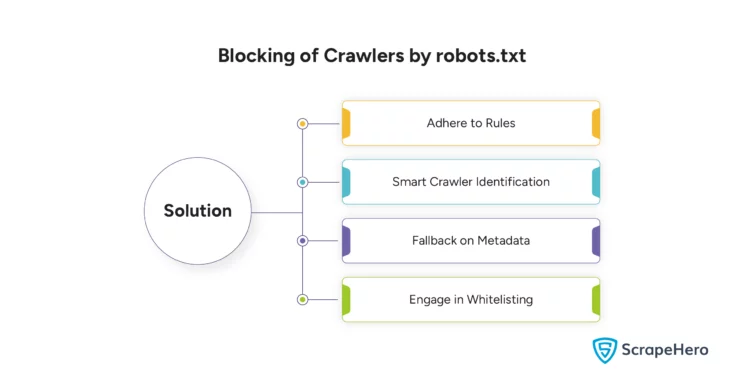

1. Solution for the Challenge: Blocking of Crawlers by robots.txt

There is a need to navigate robots.txt restrictions imposed by websites.

Consider the following solutions to overcome robots.txt restrictions:

- Adhere to Rules

- Smart Crawler Identification

- Fallback on Metadata

- Engage in Whitelisting

-

Adhere to Rules

Always respect the restrictions set by robots.txt to avoid the blocking of crawlers.

-

Smart Crawler Identification

The crawler user-agent should be correctly identified. If websites can categorize the crawler accurately, they may relax the rules.

-

Fallback on Metadata

Extract the information from the titles and meta descriptions found in the HTML head of links when there is a block.

-

Engage in Whitelisting

Get the crawler whitelisted, working with website administrators to get the mutual benefits of proper indexing.

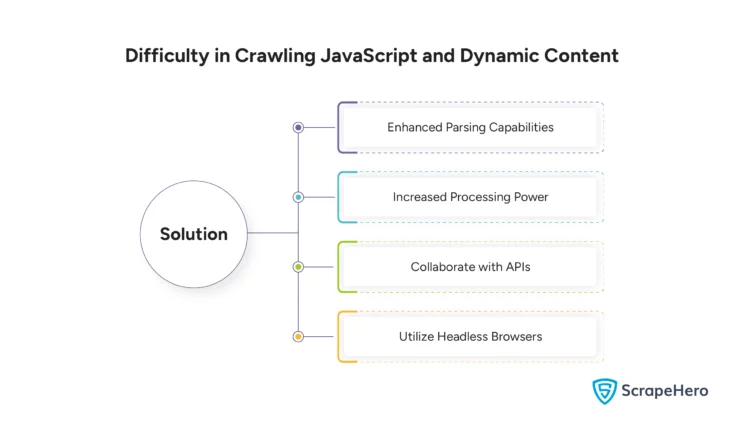

2. Solution for the Challenge: Difficulty in Crawling JavaScript and Dynamic Content

The difficulties encountered by the web crawler when crawling JavaScript and dynamic content need to be resolved.

Consider the following solutions when there is a difficulty in crawling JavaScript and dynamic content:

- Enhanced Parsing Capabilities

- Increased Processing Power

- Collaborate with APIs

- Utilize Headless Browsers

-

Enhanced Parsing Capabilities

Develop capabilities for the crawler so that it can execute and render JavaScript like modern browsers.

-

Increased Processing Power

Investing in more computational power to render complex web applications can reduce crawling issues.

-

Collaborate with APIs

Utilize APIs to fetch data directly instead of relying on HTML content whenever possible.

You can use ScrapeHero APIs to extract data from web pages and automate your business processes using RPA. -

Utilize Headless Browsers

Use headless browsers in the crawling process to extract dynamically generated content.

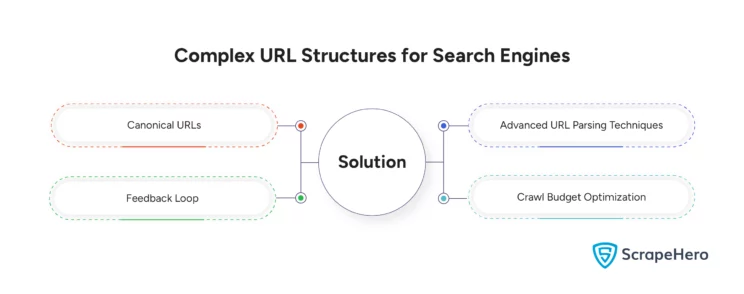

3. Solution for the Challenge: Complex URL Structures for Search Engines

Simplify the URL structures to ease the functioning of search engines.

Here are some solutions to deal with the challenge of complex URL structures for search engines:

-

Canonical URLs

Identify and prioritize canonical URLs to avoid duplication and reduce the crawling of redundant links.

-

Advanced URL Parsing Techniques

Implement sophisticated algorithms to adjust the crawling strategy based on URL patterns.

-

Feedback Loop

Analyze the success rate of URL crawling using machine learning and refine the approaches based on historical data.

-

Crawl Budget Optimization

Prioritize frequently updated URLs with higher value to manage the crawl budget more effectively.

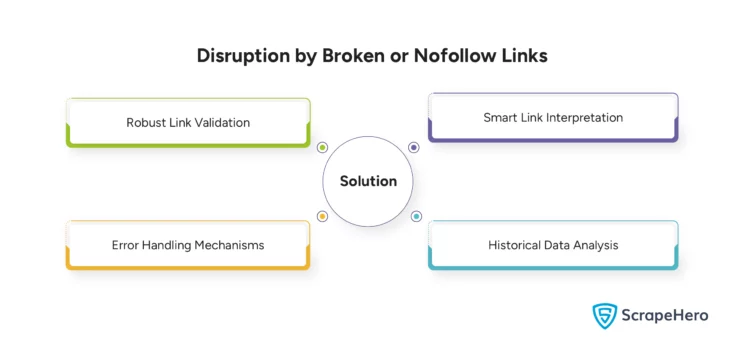

4. Solution for the Challenge: Disruption by Broken or Nofollow Links

Resolving the issues related to broken and nofollow links is essential.

You can solve the issue that occurs due to the broken or nofollow links by:

- Robust Link Validation

- Smart Link Interpretation

- Error Handling Mechanisms

- Historical Data Analysis

-

Robust Link Validation

Conserve resources by regularly checking, identifying, and skipping broken links.

-

Smart Link Interpretation

Know when to respect nofollow attributes based on the context and potential value of the linked content.

-

Error Handling Mechanisms

Manage and log issues related to broken links by implementing advanced error handling.

-

Historical Data Analysis

Adjust crawl strategies. Use historical data and focus on more reliable sources.

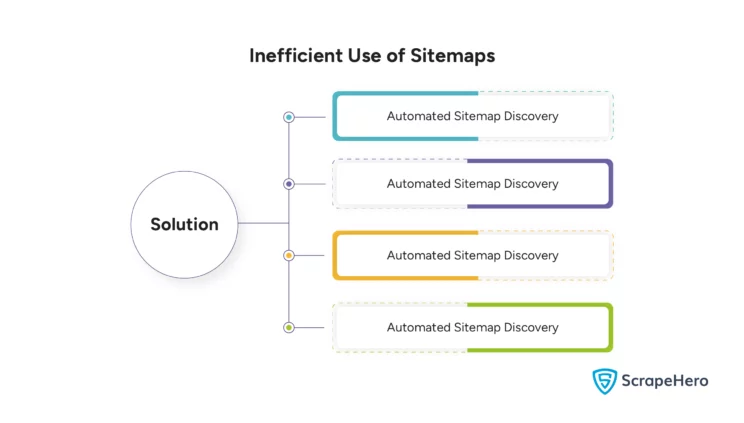

5. Solution for the Challenge: Inefficient Use of Sitemaps

Inefficient use of sitemaps may create a challenge for web crawlers.

To overcome the inefficient use of sitemaps, you need to maximize sitemap effectiveness. Consider the following:

- Automated Sitemap Discovery

- Dynamic Sitemap Monitoring

- Content Prioritization

- Integration of Sitemap Data

-

Automated Sitemap Discovery

The crawlers should develop capabilities to discover automatically and parse sitemaps from websites, even if they are not linked directly from the robots.txt.

-

Dynamic Sitemap Monitoring

Allow immediate reaction to newly listed or removed URLs by continuously monitoring sitemap files for changes.

-

Content Prioritization

For the newly added content, use sitemaps to prioritize the crawling and index it first.

-

Integration of Sitemap Data

To create a comprehensive understanding of site structure, integrate sitemap data with other crawl data sources.

Alternative Solutions To Deal With Common Web Crawler Challenges

The strategies discussed earlier can help a web crawler navigate and adapt to the web’s dynamic and complex nature.

However, a better alternative ensures more effective and efficient data collection: ScrapeHero web crawlers and ScrapeHero web crawling services.

1. Use ScrapeHero Web Crawlers

From handling JavaScript-heavy websites to overcoming IP blocks and rate limits, web crawlers encounter numerous challenges when crawling websites.

These challenges often affect the accuracy of data extraction.

ScrapeHero Crawlers, with their unique features, simplify the process and ensure data extraction accuracy, allowing you to download the data in various formats.

With no dedicated teams or hosting infrastructure, you can fetch the data according to your requirements, ultimately saving time and money.

Here’s how you use ScrapeHero Crawlers:

- Go to ScrapeHero Cloud and create an account.

- Search for any ScrapeHero crawler of your choice

- Add the crawler to your crawler list

- Add the search URLs or keywords to the input

- Click gather data

For instance, you can use our Google Maps crawler to extract local business information.

You can get a spreadsheet with a complete list of business names, phone numbers, address, website, rating, and more.

You can read the article on scraping Google Maps to learn the process in detail.

2. Consult ScrapeHero for Web Crawling Services

If your requirements are much larger, we recommend that you outsource ScrapeHero web crawling services for enterprises so that you can focus on your business.

Regarding large-scale data extraction, enterprises based on something other than data might need the infrastructure to set up servers or build an in-house team.

ScrapeHero web crawling services are specifically designed to meet the needs of enterprises that need custom crawlers to ensure ethical compliance with website policies and legality.

Businesses can rely on us to meet their large-scale web crawling needs effectively and efficiently.

Wrapping Up

Implementing specific solutions is necessary to overcome the challenges in web crawling and improve a site’s SEO performance.

As a custom solutions and services provider, ScrapeHero can resolve the issues of web crawling and fulfill our customers’ data needs.

Our industry experts can help other companies deal quickly and efficiently with customer requirements and provide meaningful, structured, and usable data to them.

Frequently Asked Questions

The challenges of large-scale web crawling include handling massive data volumes, dealing with dynamically loaded content, and managing IP blocking and rate limiting by servers.

The limitations of web crawlers include being restricted by robots.txt, complex URLs, and dynamic content, which can cause overloads.

Crawling a website is difficult due to the dynamic nature of content or broken or nofollow links.

To improve the issues of web crawling, enhance JavaScript rendering capabilities, simplify URL parsing, regularly update and use sitemaps, and optimize the handling of server responses and errors.

You can check crawl issues using tools like Google Search Console. It reports crawl errors, blocked resources, and overall site health.

Effective web crawler implementation involves:

- Respecting robots.txt rules

- Optimizing for JavaScript content

- Simplifying URL parsing

- Handling broken links smartly

Using a web crawler is not inherently illegal. However, if the crawler violates the website’s terms of service or involves unauthorized access to data, it may be subject to jurisdiction.

Read our article on this topic to gain a more in-depth understanding of the legality of web crawling.

Some open-source web crawling tools and frameworks include Apache Nutch, Heritrix, etc.

Read our article, Best Web Crawling Tools and Frameworks, to get the complete list.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data