How important is data manipulation in web scraping? Have you ever given it a thought?

In fact, effective data manipulation is a necessity for data professionals. It’s the cornerstone of processing, cleaning, and analyzing data.

As you know, Python has a rich ecosystem of libraries, including the ones that can manipulate datasets to improve the data workflow by serving unique purposes.

This article discusses the top 10 essential data manipulation libraries used in Python.

List of Python Libraries for Data Manipulation

These 10 libraries are considered the best Python data manipulation libraries used by data professionals to manipulate and analyze data efficiently.

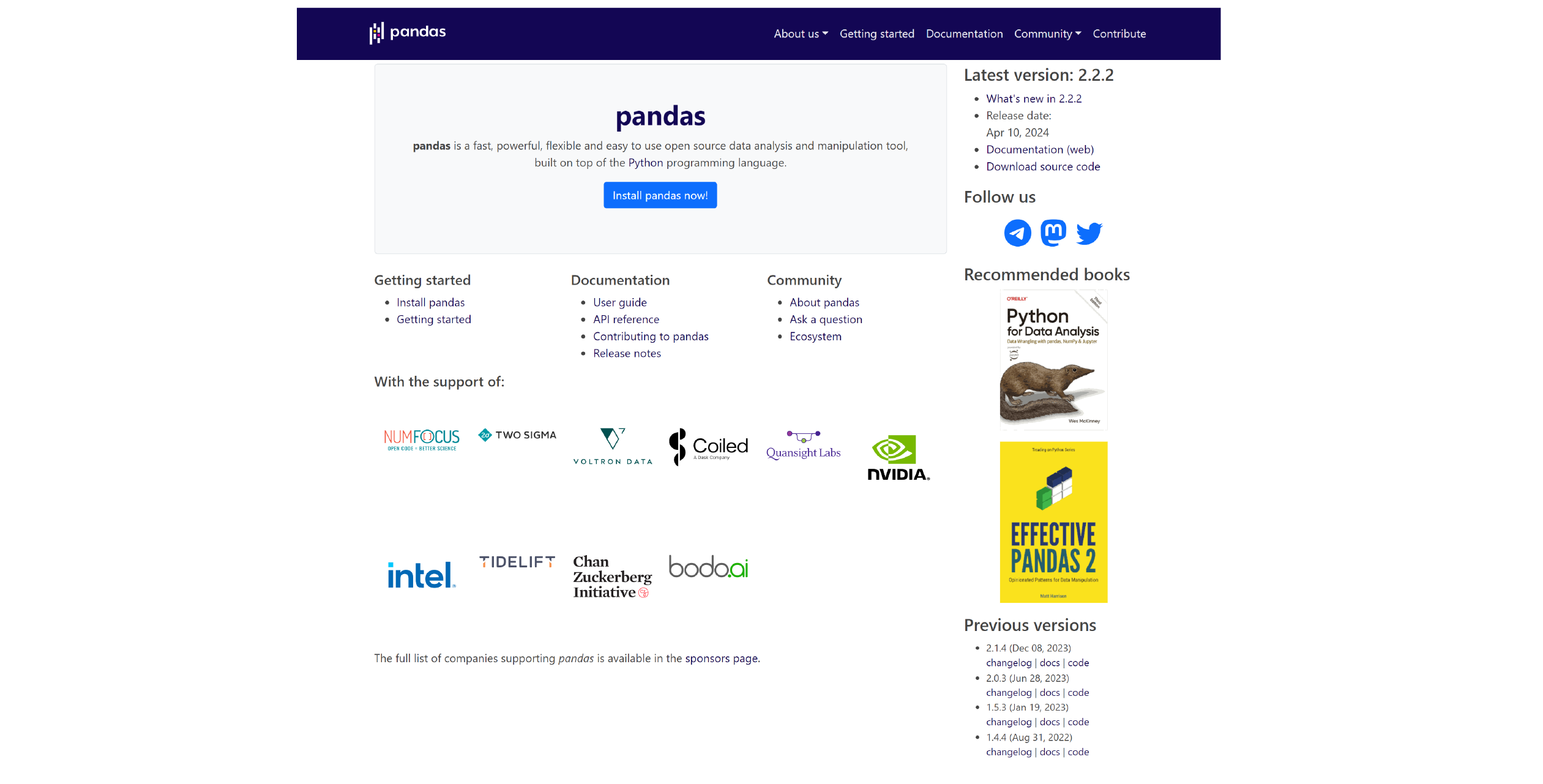

1. Pandas

Pandas is a flexible, open-source Python data manipulation and analysis library. It provides data structures like DataFrames and other functions essential for manipulating structured data.

Features

- It offers DataFrame and Series data structures

- It can easily handle missing data

- It has tools for input/output data from various formats (CSV, Excel, SQL, etc.)

- It handles time series data

Use Cases

- Data cleaning and preparation

- Statistical analysis

- Data visualization

- Time series analysis

Do you know that Python has a variety of data visualization libraries that can handle scraped data to create aesthetic and complex visualizations?

Read about the 10 best Python data visualization libraries in our article.

Pros

- It has an intuitive and easy-to-use syntax

- It has a comprehensive documentation

- It has extensive community support

- It can integrate well with other data analysis libraries

Cons

- It has limitations in handling massive datasets

- It consumes high memory

You can search for direct, ready-to-use POI location data, which is accurate, updated, and affordable, from the ScrapeHero data store without concern about handling massive datasets.

Example Usage Code

import pandas as pd

# Load data

df = pd.read_csv('data.csv')

# Display first 5 rows

print(df.head())

# Calculate summary statistics

print(df.describe())

# Filter data

filtered_df = df[df['column'] > 10]

# Group by and aggregate

grouped_df = df.groupby('category').sum()

print(grouped_df)

Pandas is an excellent choice for data manipulation. But do you know that it can also be used for web scraping?

Yes. You can scrape tabular data with Pandas. To find out how, read our article on scraping websites using Pandas.

2. NumPy

NumPy is an essential library for scientific computing with Python. It supports arrays, matrices, and many mathematical functions to operate on data structures.

Features

- It has multidimensional array objects (ndarray)

- It provides mathematical functions for linear algebra, statistics, etc.

- It supports random number generation

- It provides tools for integrating with C/C++ and Fortran code

Use Cases

- Numerical computations

- Linear algebra operations

- Statistical analysis

- Signal processing

Pros

- It has high performance due to vectorization

- It acts as a core library for many other scientific computing packages

- It has extensive documentation and community support

Cons

- It lacks higher-level data manipulation capabilities when compared to Pandas

Example Usage Code

import numpy as np

# Create array

arr = np.array([1, 2, 3, 4, 5])

# Perform operations

print(arr + 5)

print(np.mean(arr))

# Matrix operations

matrix = np.array([[1, 2], [3, 4]])

print(np.linalg.inv(matrix))

3. Dask

Dask is a parallel computing library used for analytics. It can provide scalable data manipulation by integrating Pandas and NumPy scaling Python code from laptops to large clusters.

Features

- It has parallel and distributed computation

- It can scale up to large datasets and clusters

- It has an interface similar to Pandas

- It supports real-time task scheduling

Use Cases

- Processing large datasets that don’t fit into memory

- Real-time data analysis

- Distributed computing

Pros

- It can scale computations across multiple cores or clusters

- It integrates well with existing Pandas and NumPy code

- It provides lazy evaluation, optimizing performance

Cons

- It may give a steeper learning curve for beginners

- For small datasets, it can can take up a lot of time and resources for task scheduling

Example Usage Code

import dask.dataframe as dd

# Load data

df = dd.read_csv('large_data.csv')

# Compute summary statistics

print(df.describe().compute())

# Filter data

filtered_df = df[df['column'] > 10].compute()

# Group by and aggregate

grouped_df = df.groupby('category').sum().compute()

print(grouped_df)

4. Polars

Polars is one of the best data manipulation libraries, implemented in Rust and Python. It is a high-performance DataFrame library that handles large datasets with exceptional speed and performance.

Features

- It has high performance due to Rust implementation

- It supports lazy evaluation

- It supports multi-threading

Use Cases

- Data preprocessing and cleaning

- Statistical analysis

- ETL processes

Are you struggling with your ETL processes? Here are some of the best ETL tools and products that help you simplify the process and manage your data pipeline effectively.

Pros

- It has a high-speed performance

- It uses less memory

- It has a flexible API

Cons

- It has a smaller community when compared to Pandas

- It has a less mature ecosystem

Example Usage Code

import polars as pl

# Load data

df = pl.read_csv('data.csv')

# Display first 5 rows

print(df.head())

# Calculate summary statistics

print(df.describe())

# Filter data

filtered_df = df.filter(pl.col('column') > 10)

# Group by and aggregate

grouped_df = df.groupby('category').sum()

print(grouped_df)

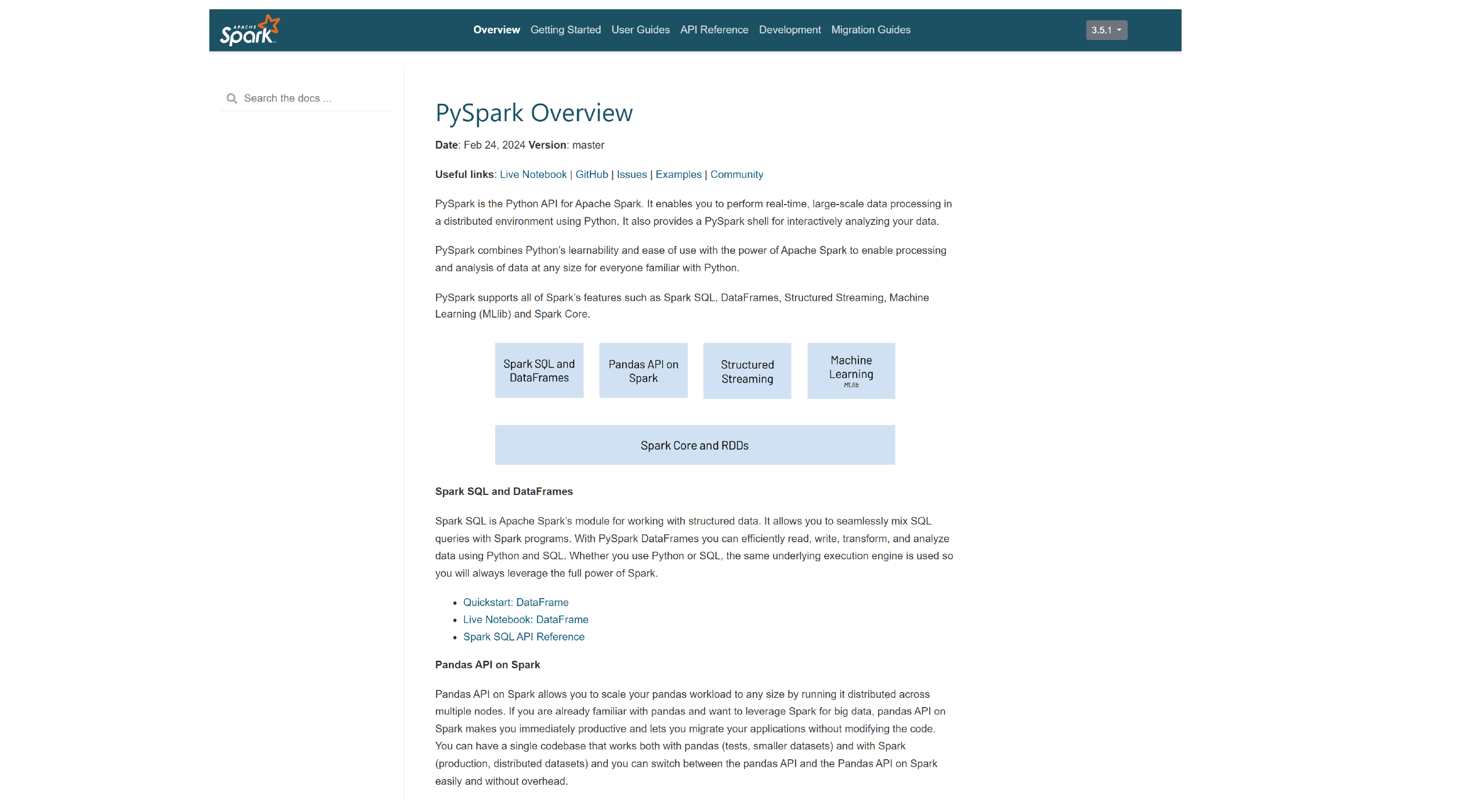

5. PySpark

PySpark is the Python API for Apache Spark. It is a distributed computing system known for its speed and ease of use in large-scale data processing and analytics. It provides a PySpark shell to analyze data.

Features

- It has distributed data processing

- It integrates with Hadoop

- It has in-memory computing

- It has advanced analytics capabilities (e.g., machine learning)

Use Cases

- Big data analytics

- Real-time stream processing

- Machine learning pipelines

Pros

- It can handle large datasets efficiently

- It integrates well with big data tools and platforms

- It is scalable and fault-tolerant

Cons

- It requires a Spark cluster for optimal performance

- It has a higher overhead for small datasets

Example Usage Code

from pyspark.sql import SparkSession

# Create Spark session

spark = SparkSession.builder.appName('example').getOrCreate()

# Load data

df = spark.read.csv('data.csv', header=True, inferSchema=True)

# Display first 5 rows

df.show(5)

# Calculate summary statistics

df.describe().show()

# Filter data

filtered_df = df.filter(df['column'] > 10)

filtered_df.show()

# Group by and aggregate

grouped_df = df.groupBy('category').sum()

grouped_df.show()

6. Vaex

Vaex, a high-performance DataFrame library similar to Polars, is designed for lazy and out-of-core data processing. It is competent and can handle large datasets that don’t fit into memory.

Features

- It supports out-of-core DataFrame processing

- It offers lazy evaluation

- It provides fast visualization and statistics

- It utilizes memory-mapping techniques

Use Cases

- Handling huge datasets

- Data exploration and visualization

- Statistical analysis

Pros

- It can handle datasets more prominent than memory efficiently

- It provides a faster performance

- It has low memory usage

Cons

- It has a limited ecosystem when compared to Pandas

- It has less community support

Example Usage Code

import vaex

# Load data

df = vaex.open('large_data.hdf5')

# Display first 5 rows

print(df.head())

# Calculate summary statistics

print(df.describe())

# Filter data

filtered_df = df[df['column'] > 10]

# Group by and aggregate

grouped_df = df.groupby(by='category', agg='sum')

print(grouped_df)

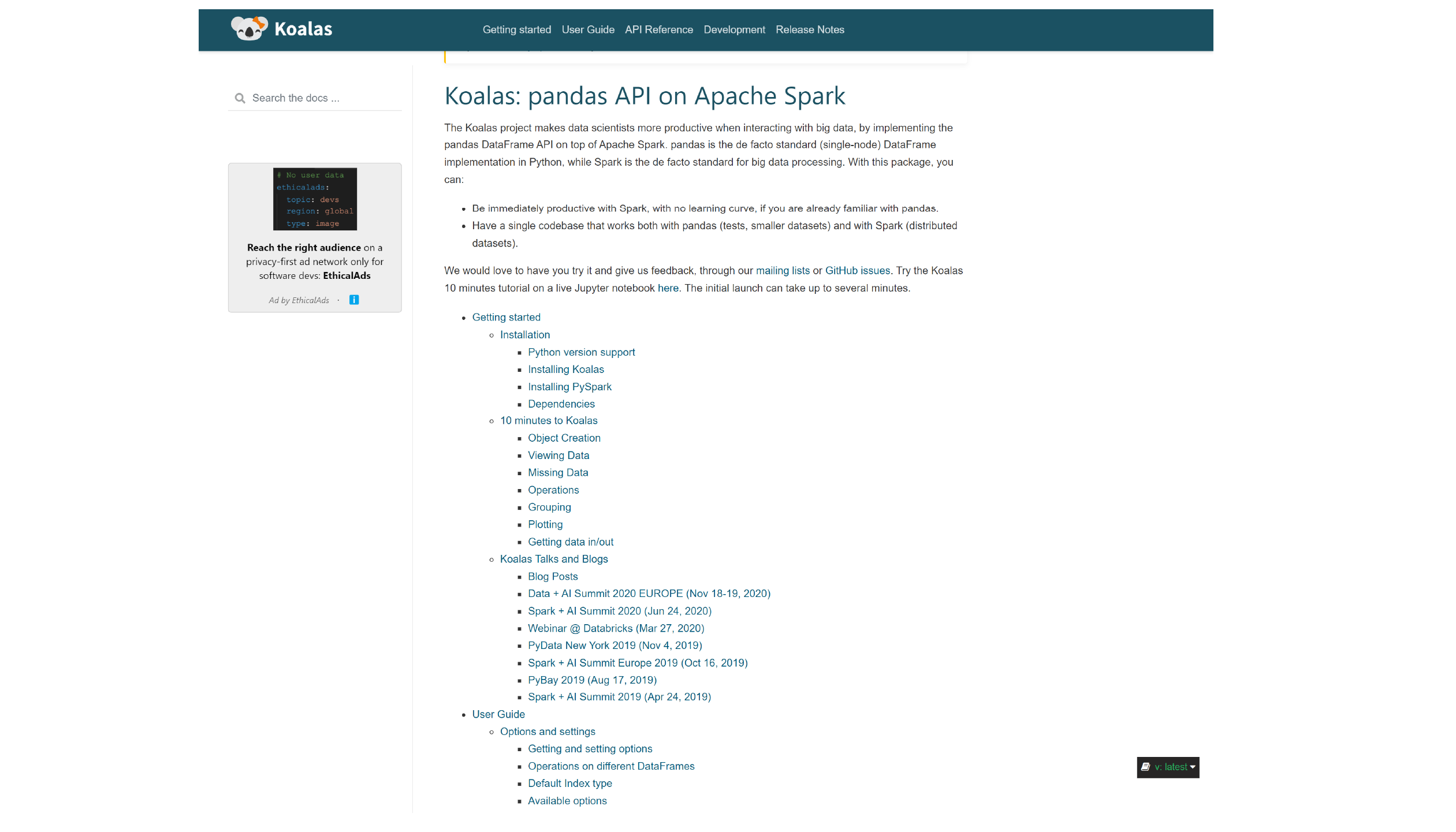

7. Koalas

Koalas provides a Pandas-like API on top of Apache Spark. It helps data scientists to be more productive when interacting with big data. It bridges the gap between Pandas and Apache Spark, making writing Pandas code easier.

Features

- It has Pandas-compatible API

- It can seamlessly integrate with Apache Spark

- It supports distributed processing

Use Cases

- Transitioning from Pandas to Spark

- Large-scale data processing

- Data preparation for machine learning

Pros

- It has a syntax similar to Pandas

- It makes use of Spark’s scalability

- It helps in facilitating code migration from Pandas to Spark

Cons

- When compared to native Spark, its performance can be lower

- It requires Spark setup

Example Usage Code

import databricks.koalas as ks

# Load data

df = ks.read_csv('data.csv')

# Display first 5 rows

print(df.head())

# Calculate summary statistics

print(df.describe())

# Filter data

filtered_df = df[df['column'] > 10]

# Group by and aggregate

grouped_df = df.groupby('category').sum()

print(grouped_df)

8. Modin

Modin is a parallel DataFrame library that can be considered a drop-in replacement for Pandas. It can accelerate workflows by scaling Pandas operations and distributing the workload across all available CPU cores.

Features

- It is a drop-in replacement for Pandas

- It supports parallel computation

- It is scalable to large datasets

Use Cases

- Speeding up existing Pandas code

- Handling larger-than-memory datasets

Pros

- Its API is similar to that of Pandas

- It has significant performance improvements

- It is easy to integrate with existing code

Cons

- It involves unnecessary complexity and computational overhead for small datasets

- It relies on external frameworks like Dask and Ray

- It does not support all Pandas operations

Example Usage Code

import modin.pandas as pd

# Load data

df = pd.read_csv('data.csv')

# Display first 5 rows

print(df.head())

# Calculate summary statistics

print(df.describe())

# Filter data

filtered_df = df[df['column'] > 10]

# Group by and aggregate

grouped_df = df.groupby('category').sum()

print(grouped_df)

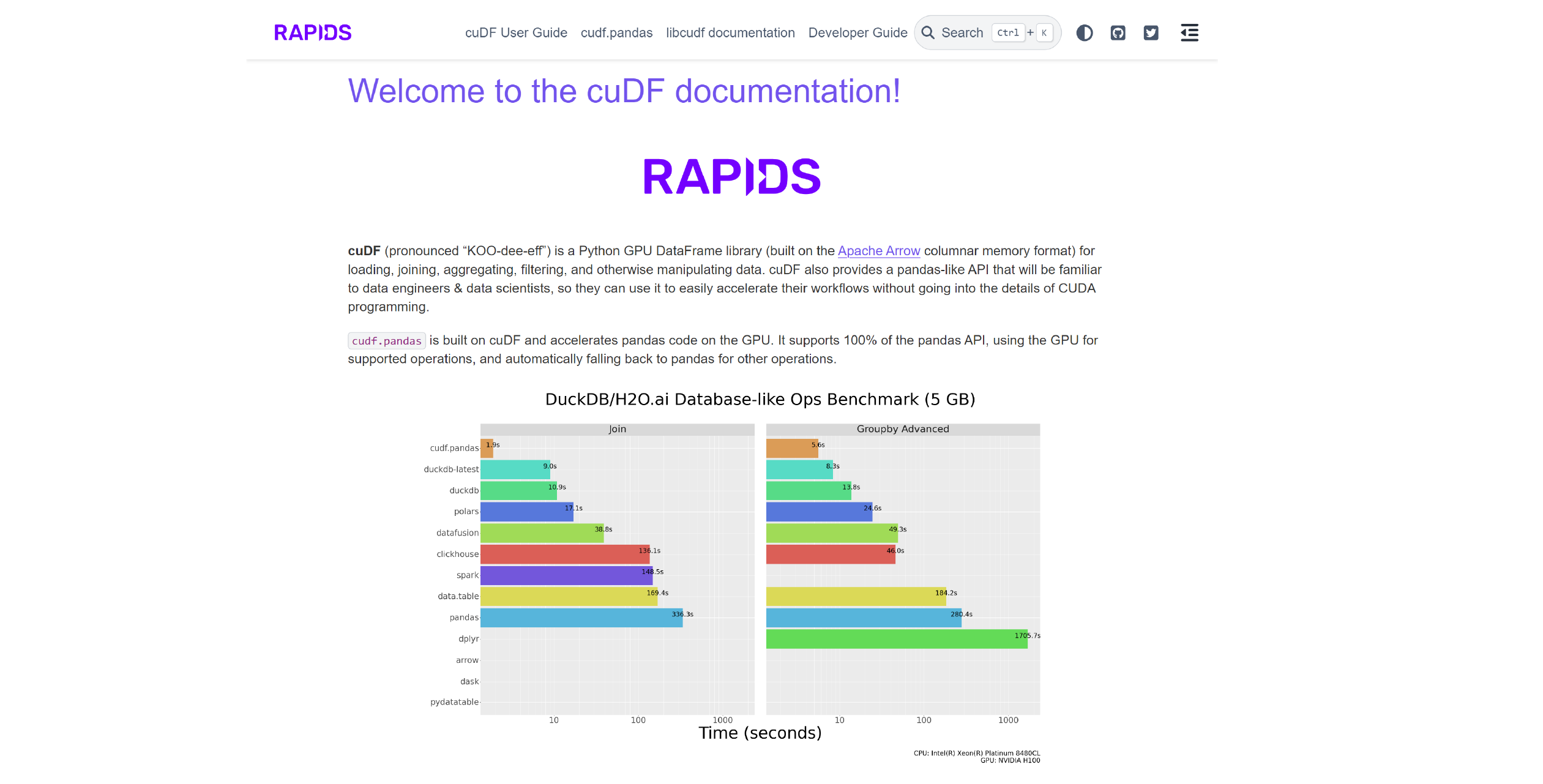

9. cuDF

cuDF is a GPU DataFrame library from RAPIDS that is built on the Apache Arrow columnar memory format. It can load, join, aggregate, filter, and otherwise manipulate data using NVIDIA GPUs.

Features

- It is compatible with Pandas

- It can integrate well with the RAPIDS AI ecosystem

Use Cases

- High-performance data processing

- Large-scale data manipulation

- Data preparation for GPU-based machine learning

Pros

- It performs extremely fast with GPUs

- It can scale well with large datasets

- It can integrate seamlessly with other RAPIDS libraries

Cons

- It requires NVIDIA GPU hardware

- It has a smaller community when compared to Pandas

Example Usage Code

import cudf

# Load data

df = cudf.read_csv('data.csv')

# Display first 5 rows

print(df.head())

# Calculate summary statistics

print(df.describe())

# Filter data

filtered_df = df[df['column'] > 10]

# Group by and aggregate

grouped_df = df.groupby('category').sum()

print(grouped_df)

10. SciPy

SciPy is a fundamental library built on NumPy. It provides scientific and technical computing in Python, including optimization, integration, interpolation, eigenvalue problems, and more.

Features

- It has advanced mathematical functions and algorithms

- It supports optimization and root-finding

- It supports signal and image processing

- It can deal with sparse matrices and linear algebra

Use Cases

- Scientific research

- Engineering simulations

- Advanced mathematical computations

Pros

- It has an extensive collection of scientific algorithms

- It has a strong integration with NumPy

- It is well-documented and widely used

Cons

- It is not designed for high-level data manipulation tasks

- It can be complex for beginners

Example Usage Code

from scipy import optimize

import numpy as np

# Define a function to minimize

def f(x):

return x**2 + 10*np.sin(x)

# Find the minimum

result = optimize.minimize(f, x0=0)

print(result.x)

Data manipulation and data extraction are interdependent, and one cannot exist without the other.

See our post on the top Python data extraction libraries if you’re interested in knowing the libraries used to extract data from various sources.

Wrapping Up

Data manipulation libraries in Python can offer a wide range of data manipulation and analysis capabilities.

However, data manipulation presents specific challenges, such as handling complex data sets, optimizing performance, or ensuring scalability.

So, it is better to outsource web scraping services to a reputed service provider like ScrapeHero, who can handle the complete scraping process for you, providing you with a convenient and stress-free solution.

You do not have to invest in building a scraping team, as we can handle all the challenges that come with web scraping expertly and professionally.

By using ScrapeHero’s web scraping service, you get enterprise-grade, custom, hassle-free data from us according to your specifications.

Frequently Asked Questions

Python is excellent for data manipulation due to its readability and the extensive support it offers through libraries.

Some prominent libraries used for data analysis in Python include Pandas, NumPy, Matplotlib, and SciPy.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data