In web scraping, the part HTTP clients play is crucial. But what exactly are HTTP clients, and how do they help with scraping?

This article will give you an overview of some of the popular HTTP clients in Python for web scraping and help you decide which one best suits your use case.

What Is a Python HTTP Client?

An HTTP client in Python is a specific implementation or program used alongside Python parsing libraries like BeautifulSoup or html5lib.

HTTP clients enable your scraper to make GET or POST requests to web servers or APIs and retrieve information from them.

In simple terms, Python HTTP clients fetch the raw HTML from a web page. HTTP clients are also used together with proxy servers for scraping.

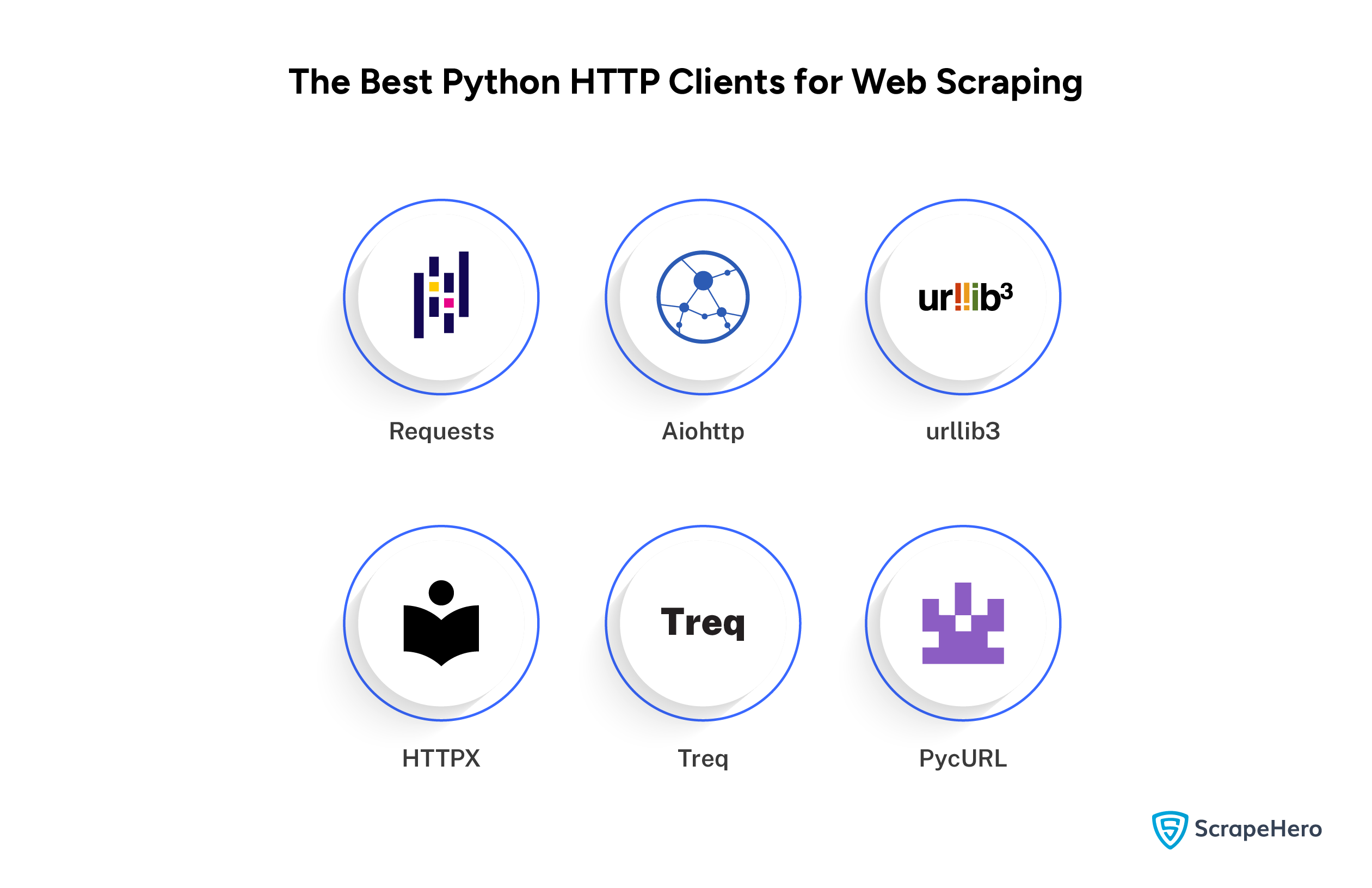

Best Python HTTP Clients for Web Scraping

Some of the prominent HTTP clients used for web scraping that enable HTTP communication by sending requests or receiving responses are:

1. Requests

Requests is a Python HTTP client for web scraping. Its straightforward approach to sending HTTP requests has made it a popular choice among developers.

It allows users to send various HTTP requests and effectively handle different types of authentication, redirections, and cookies.

Given is an example of sending GET requests using the Requests when web scraping:

import requests

response = requests.get('https://example.com')

print(response.text)

Now, let’s calculate the response time of the HTTP client Requests by including a time module in the code.

You can replace the website url https://example.com with https://www.scrapehero.com/ and measure the response time.

import requests

import time

start_time = time.time()

response = requests.get('https://example.com')

end_time = time.time()

response_time = end_time - start_time

print(f"Requests response time: {response_time:.4f} seconds")Result:

The response time for the HTTP client Requests for receiving responses from the ScrapeHero website is 0.2818 seconds.

2. AioHTTP

AioHTTP is a versatile asynchronous HTTP client/server framework that caters to both client-side and server-side web programming needs.

AioHTTP uses asyncio and handles large volumes of requests. It is also ideal for high-performance applications that need non-blocking network calls.Given is an example of making an asynchronous request using AioHTTP for web scraping:

import aiohttp

import asyncio

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

async with aiohttp.ClientSession() as session:

html = await fetch(session, 'https://example.com')

print(html)

asyncio.run(main())Just as in the previous example of Requests, you can also calculate the response time of aiohttp using the time module.

According to the calculation, the time it takes to receive responses from the ScrapeHero website is 0.2772 seconds.

3. urllib3

urllib3 is one of the fastest HTTP clients that can handle multiple requests. Since it supports concurrent requests, you can scrape multiple pages at the same time.

urllib3 features connection pooling, which means it reuses existing connections for requests, significantly enhancing efficiency and performance in network operations.

An example of using urllib3 to send a GET request is:

import urllib3

http = urllib3.PoolManager()

response = http.request('GET', 'https://example.com')

print(response.data.decode('utf-8'))

Here, the response time taken for urllib3 to get a response from the ScrapeHero website is 0.2074 seconds.

Interested in knowing more about how to use urllib for web scraping? Then, read our article on web scraping using urllib.

4. HTTPX

HTTPX is a fully featured HTTP client for web scraping which supports HTTP/2 & asynchronous requests.

HTTPX is also fast and efficient for I/O-bound and concurrent code, with built-in support for streaming significant data responses.

You can make a GET request using HTTPX like:

import httpx

response = httpx.get('https://example.com')

print(response.text)

The response time taken for HTTPX to get a response from the ScrapeHero website is 0.4443 seconds.

5. Treq

Treq is built on top of Twisted’s HTTP client. Requests inspire it and provide a higher-level, more convenient API than Twisted’s primary HTTP client.

Treq is suitable for asynchronous applications and handles asynchronous HTTP requests easier with a simple API. It also supports higher-level HTTP client features.

This is how Treq handles asynchronous HTTP requests in Python.

from treq import get

from twisted.internet import reactor

def done(response):

print('Response:', response.content())

reactor.stop()

get('https://example.com').addCallback(done)

reactor.run()

The response time taken for Treq to get a response from the ScrapeHero website is 0.2428 seconds.

6. PycURL

PycURL is a Python interface to libcurl. It is highly efficient and an excellent option for those who need a speedy, well-performing client.

PycURL is used in scenarios where advanced network operations, such as multiple concurrent connections and extensive protocol capabilities, are required.

Here’s a simple example of how to make an HTTP GET request using PycURL.

import pycurl

from io import BytesIO

buffer = BytesIO()

c = pycurl.Curl()

c.setopt(c.URL, 'https://example.com')

c.setopt(c.WRITEDATA, buffer)

c.perform()

c.close()

print(buffer.getvalue().decode('iso-8859-1'))

The response time taken for HTTPX to get a response from the ScrapeHero website is 0.5981 seconds.

See how cURL handles multiple requests simultaneously and scrapes from different web pages at once in our article on web scraping with cURL.

A Comparison Table of the Python HTTP Clients for Scraping

Here’s a comparison table for different HTTP clients in Python for web scraping, highlighting some of their prominent features:

| Feature | Requests | AioHTTP | urllib3 | HTTPX | Treq | PyCurl |

| Synchronous | Yes | No | Yes | Yes | No | Yes |

| Asynchronous | No | Yes | No | Yes | Yes | Yes |

| HTTP/2 Support | No | Yes | No | Yes | No | Yes |

| Session Support | Yes | Yes | Yes | Yes | Yes | No |

| Cookies Handling | Yes | Yes | Yes | Yes | Yes | No |

| Built-in JSON Support | Yes | Yes | No | Yes | Yes | No |

| Low-Level Control | No | No | Yes | No | No | Yes |

| Response Time (Seconds) | 0.2818 | 0.2772 | 0.2074 | 0.4443 | 0.2428 | 0.5981 |

Wrapping Up

Each HTTP client has unique features that can cater to different web scraping needs, from simple blocking requests to complex asynchronous operations.

For web scraping, you need to identify which HTTP client best suits your scraping requirements.

Web scraping requires a deep understanding of its technicalities and expertise that might be beyond your core business operations.

Navigating these complexities, along with the legal aspects involved in web scraping, is challenging for businesses.

So, you require a specialized web scraping service like ScrapeHero to take care of your data needs.

We understand the challenges you face with web scraping, and we’re here to help you solve your data-related problems and deliver quality data.

Frequently Asked Questions

To create an HTTP client in Python, you can use libraries like Requests, HTTPX, or their simple API to send requests.

HTTPlib is a Python module providing a low-level interface for HTTP services. HTTP client is a general term for any tool or library that manages HTTP requests.

The most commonly used Python library to handle HTTP requests is Requests.

In Python, the most popular HTTP client node is Requests due to its simplicity and robust feature set.

To perform a POST request using HTTPX in Python, you can use: `httpx.post(‘https://example.com/api’, json={‘key’: ‘value’})`. This sends JSON data to the specified URL.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data