Can you even imagine a life without large language models like ChatGPT and Gemini?

Probably not; that’s how much they have become integrated into our lives.

Since their introduction, they have been utilized for various purposes, including text generation, creating poetry, coding, and in medical and legal areas.

These machine-learning models are trained on large datasets. But do you know what the data sources for LLMs are and how they are acquired?

The performance of an LLM is directly related to the quality and quantity of the data it is trained on. Partner with ScrapeHero web scraping service to get the most diverse and comprehensive data.

With ScrapeHero Cloud, you can download data in just two clicks!Don’t want to code? ScrapeHero Cloud is exactly what you need.

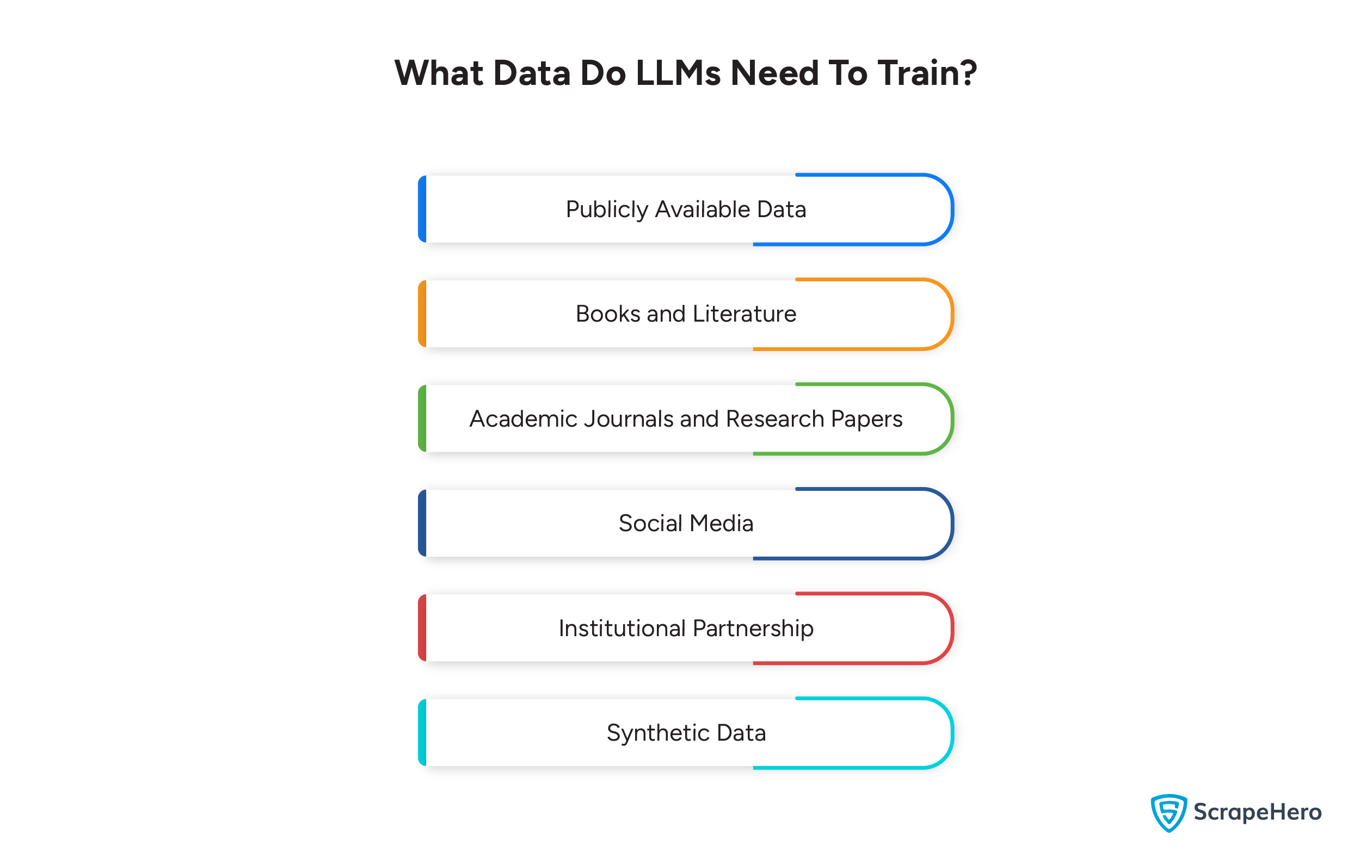

What Data Do LLMs Need to Train?

It is common knowledge that a large language model trained on a diverse and comprehensive dataset will be better equipped to handle diverse tasks and produce more accurate and relevant outputs.

LLMs rely on a variety of data sources to build their capabilities. We have listed the primary categories below:

-

Publicly Available Data

This includes data from websites, articles, blogs, and forums. Publicly accessible datasets can also be accessed.

-

Books and Literature

Digitized books are another source used in LLM data collection. These enrich the model with linguistic structures and enhance its understanding of language.

-

Academic Journals and Research Papers

These LLM data sources offer specialized knowledge and terminology that are useful for training models in specific domains.

-

Social Media

User-generated content from platforms like Twitter and Reddit also forms data sources for LLMs. They can be used to train the model with current trends and informal language usage.

-

Institutional Partnerships

Collaborations with universities or research institutions can yield access to curated datasets that are not publicly available.

-

Synthetic Data

Artificially manufactured data is also a primary LLM data source. It is often used to lessen the bias in datasets.

How to Get Data for LLMs

LLMs require vast amounts of data; the more, the better. Following are some of the methods used in the process of LLM data collection.

-

Web Scraping

Partnering with a professional web scraping service like ScrapeHero can help extract large volumes of data from websites efficiently.

-

Crowdsourcing

LLM data collection can also be done by engaging a community to gather data, but this may be less controlled in quality.

-

Data Licensing

It is also possible to purchase access to proprietary datasets that are owned by a company or research group.

-

Public Datasets

Many organizations release datasets for public use, which can be used as data sources for LLMs.

Why is Web Scraping the Best Way to Get Data for LLMs

Out of the many methods available for LLM data collection, web scraping is the best way to obtain data for the following reasons.

- Access to a Wider Range of Data: Web scraping allows you to access data from a variety of sources, including websites, forums, and social media platforms.

- Cost-Effective: Web scraping is a cost-effective way to obtain large amounts of data compared to the other options.

- Efficient: Web scraping is the automated collection of data, allowing you to collect data quickly and efficiently.

- Customizable: You can customize your web scraping process to extract only the data you need.

Why Choose ScrapeHero as your Web Scraping Service

ScrapeHero is a reliable web scraping service provider that can help you obtain high-quality data for training your LLMs.

Mentioned below are some of the benefits you would have by choosing ScrapHero as your web scraping partner:

- Expertise in Extracting Training Data for ML and AI: ScrapeHero is a web scraping service with over a decade of experience. We have the capabilities to scale and crawl the internet to help train AI models.

- Best Web Crawling Infrastructure: Our platform is built for scale and is capable of crawling the web at thousands of pages per second and extracting data from millions of web pages daily.

- Automated Data Delivery to Any Location: We collect, process, and distribute data from various online sources to multiple destinations, including cloud storage services. This can be beneficial for efficiently integrating web-sourced data for training LLMs.

- Scalability and Flexibility: ScrapeHero can handle large-scale data extraction projects and can be customized to meet your specific needs.

- Compliance: ScrapeHero adheres to all relevant data privacy laws and regulat

- Technical Support: ScrapeHero provides excellent technical support to help you with any issues you may encounter.

Since the quality and diversity of the data used to train LLMs can impact their performance, it is best to obtain data from someone who has the experience and expertise, like ScrapeHero.

Connect with ScrapeHero to obtain training data for machine learning and artificial intelligence to develop a powerful and versatile LLM.