Scraping data from Shein can be challenging due to its dynamic content and anti-scraping measures. However, a browser automation library, like Selenium, can help you scrape dynamic web pages. Here’s a step-by-step guide on how to scrape Shein data using Selenium.

Data Scraped from Shein

This tutorial scrapes Shein product data from its super-deals page.

- Product name

- Product Price

- Product URL

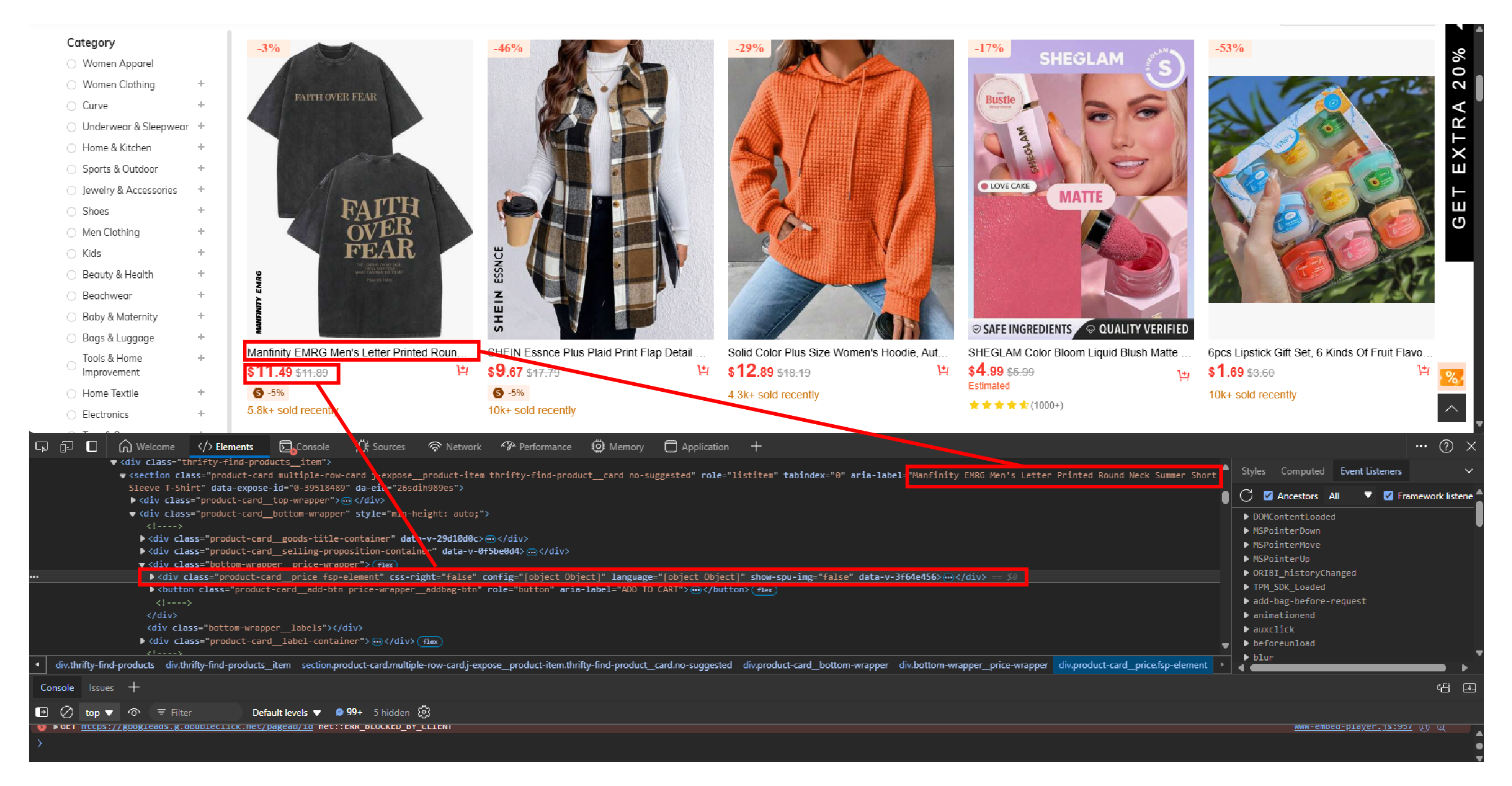

You can use the browser’s inspect panel to determine which HTML elements on Shein’s home page contain the details:

- Right-click on a product data, like price

- Click ‘Inspect’

Scrape Shein Data: The Environment

The code uses Selenium Python to fetch the HTML source code of Shein’s super-deals page. Selenium’s ability to interact with browsers makes it excellent for scraping e-commerce websites like Shein.

For parsing the source code, the code uses BeautifulSoup.

Both Selenium and BeautifulSoup are external libraries, so you need to install them, which you can do with Python pip.

pip install bs4 seleniumTo lean

The code also uses three packages from the Python standard library:

- json to save the extracted data to a JSON file

- urllib.parse to make relative links absolute

- time to delay the script execution

Scrape Shein Data: The Code

Begin your code to scrape Shein data by importing the packages mentioned above.

import json

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.by import By

from urllib.parse import urljoin

from time import sleepThe code only imports two Selenium modules: webdriver and By. The webdriver module interacts with the browser (navigating to a URL, setting browser options, etc.) and the By module lets you specify how to locate an HTML element (by XPaths, class names, etc.)

This tutorial scrapes a specific number of Shein’s super-deals pages. Therefore, the scraper requires two inputs: the URL of the page and the number of pages to be scraped. It’s better to store these in different variables, allowing you to change them without touching your scraper’s main code.

source = "https://us.shein.com/super-deals"

max_pages = 5Selenium browser is faster in headless mode. You can start the browser in headless mode by adding the argument “–headless=new” to the browser’s options and launching the browser with it.

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

browser = webdriver.Chrome(options=options)Note: ChromeOptions() is used when you are using the Chrome browser while running Selenium. You need to use other methods corresponding to the browser used.

After launching the browser, use the get() method with the source variable as the argument to visit the super-deals page.

browser.get(source)You can now extract the product details, but first, declare an empty array to store the details.

products = []Use a loop to extract product details from multiple pages. The number of iterations in the loop will be according to max_pages.

In the loop:

1. Pause the script execution for 5 seconds to allow all the products on the page to load.

sleep(5)2. Get the HTML source code using Selenium’s page_source attribute.

response = browser.page_source3. Pass the source code to BeautifulSoup for parsing.

soup = BeautifulSoup(response,'lxml')4. Find all the section elements holding the product details.

product_list = soup.find('div',{'class':'thrifty-find-products'}).find_all('section')5. Iterate through the sections and

- Extract name, URL, and price

- Append the details to the array defined before the loop

- Navigate to the next page if the current page is not greater than max_pages

for section in product_list:

#extract details

try:

name = section['aria-label']

url = section.a['href']

price = section.find('span',{'class':'product-item__camecase-price'}).text

except:

continue

#append the details to the array

products.append(

{

"Name":name,

"Price":price,

"URL": urljoin("https://shein.com",url)

}

)

#navigate to the next page

if i < max_pages or i == max_pages:

try:

browser.find_element(By.XPATH,f"//span[@class='sui-pagination__inner sui-pagination__hover' and contains(text(),'{i}')]").click()

except:

print("No more pages")

break

Close the browser after the loop completes.

browser.quit()Finally, save the extracted Shein product data to a JSON file.

with open("shein.json",'w') as f:

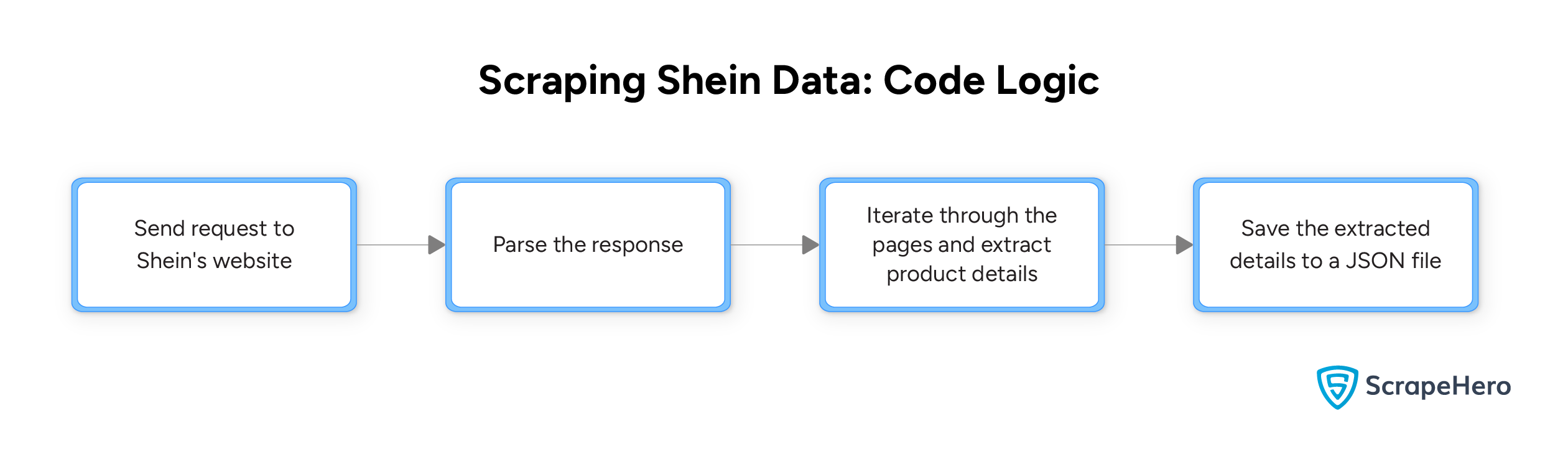

json.dump(products,f,indent=4,ensure_ascii=False)Here is a flowchart showing the entire process.

Code Limitations

Shein has strong anti-scraping mechanisms like captcha challenges and IP rate limiting. To overcome this, you might need to rotate proxies and solve captchas. This code doesn’t do that, which also means that the code is unsuitable for large-scale web scraping.

Moreover, you must keep watching Shein’s website for any changes in its HTML structure because this code relies on it to extract the product details.

Why Not Use a Web Scraping Service?

So you can use Selenium WebDriver and BeautifulSoup to scrape Shein data. This tutorial showed how you can scrape their super-deals page; similarly, you can scrape their other pages by altering the code.

You also need to alter the code for large-scale web scraping or whenever Shein changes its HTML structure. However, you can avoid all that by choosing ScrapeHero’s Web Scraping Service.

ScrapeHero is a fully-managed web scraping service provider capable of building large-scale web scraping and crawling.

FAQ

Although it’s legal to scrape a public website, Scraping Shein or any website without permission may violate its terms of service. It’s important to consult a legal expert to ensure compliance. Check out this page on the legality of web scraping to learn more.

Shein has rate limits and IP bans prevent scraping. Rotating proxies allows you to send requests from different IPs, reducing the chance of getting blocked.

Scraping Shein without browser automation is difficult due to the site’s use of JavaScript to load content dynamically. However, if you don’t want to use Selenium, you can use other browser automation libraries like Playwright or Puppeteer.

Here are the steps for web scraping without getting blocked by Shein

Use rotating proxies

Mimic human behavior (like random delays between requests)

Respect the site’s robots.txt file

Avoid to many requests

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data