Did you know that bypassing the anti-scraping measures implemented by websites unethically can lead to serious legal repercussions?

It’s true. Websites implement anti-scraping measures to protect their data and maintain server integrity.

While navigating around anti-scraping measures may appear to be a technical challenge, it is fundamentally an ethical consideration.

This article will guide you on how to ethically avoid anti-scraping measures by following some best practices.

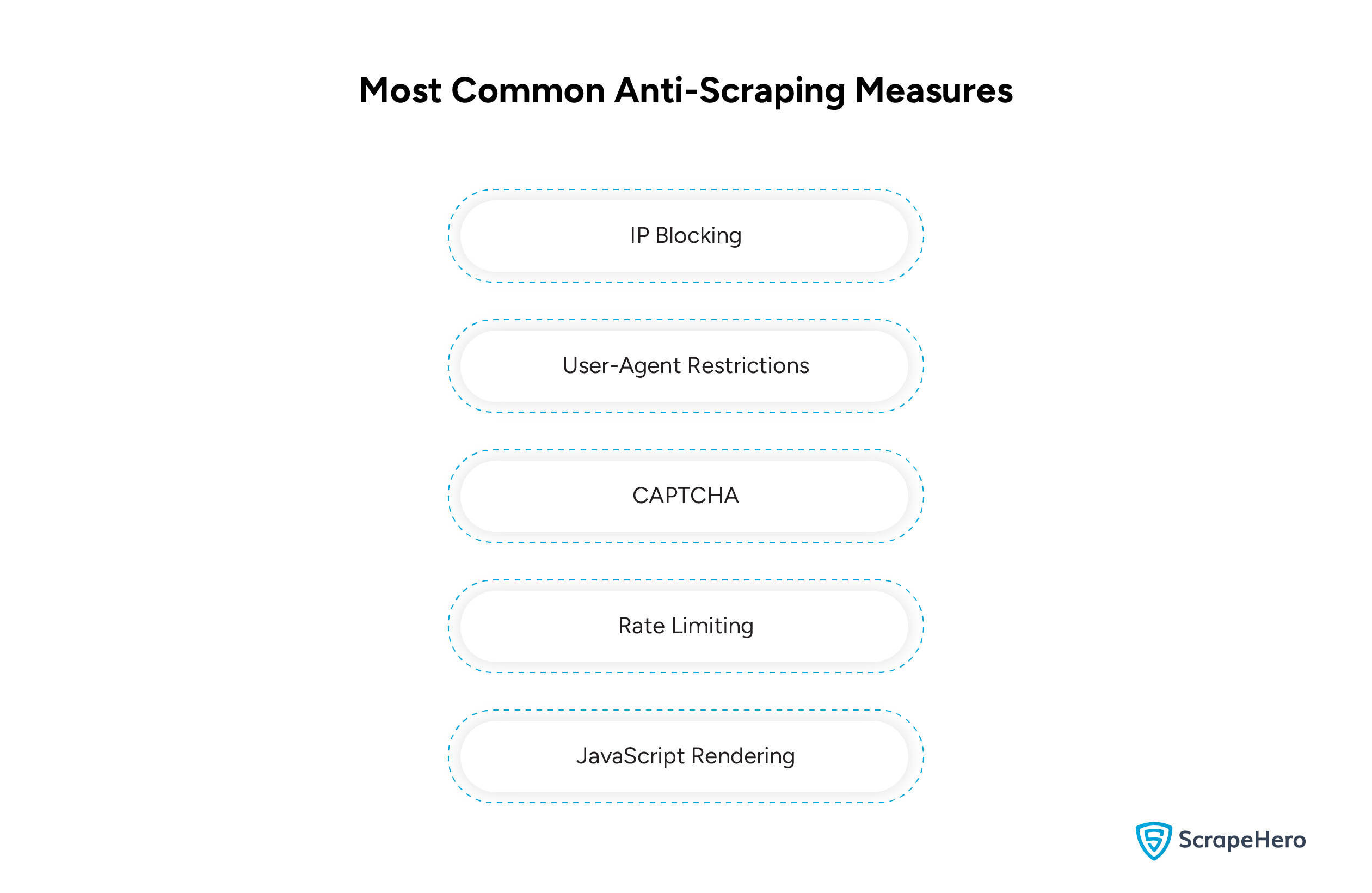

Common Anti-Scraping Measures

Anti-scraping measures are strategies that websites implement to prevent automated bots from accessing their content.

These measures can range from simple IP address blocking to complex methods like CAPTCHA verification.

In order to counter the anti-scraping measures ethically, you must understand its various types. Some of the most common types of anti-scraping measures include:

1. IP Blocking

When a website detects an unusually high volume of requests from a single IP address, which is a common sign of a scraping attempt, it blocks the IPs.

2. User-Agent Restrictions

Websites can identify the user-agent header and determine whether the request comes from a legitimate browser or a bot.

3. CAPTCHA

CAPTCHAs distinguish between human visitors and bots by giving the users different challenges to solve.

4. Rate Limiting

To protect resources and maintain server performance, websites impose limits on the number of requests within a specific timeframe.

5. JavaScript Rendering

Some websites are complex as they might have dynamically generated content using JavaScript, which is difficult for scrapers that don’t handle JavaScript well.

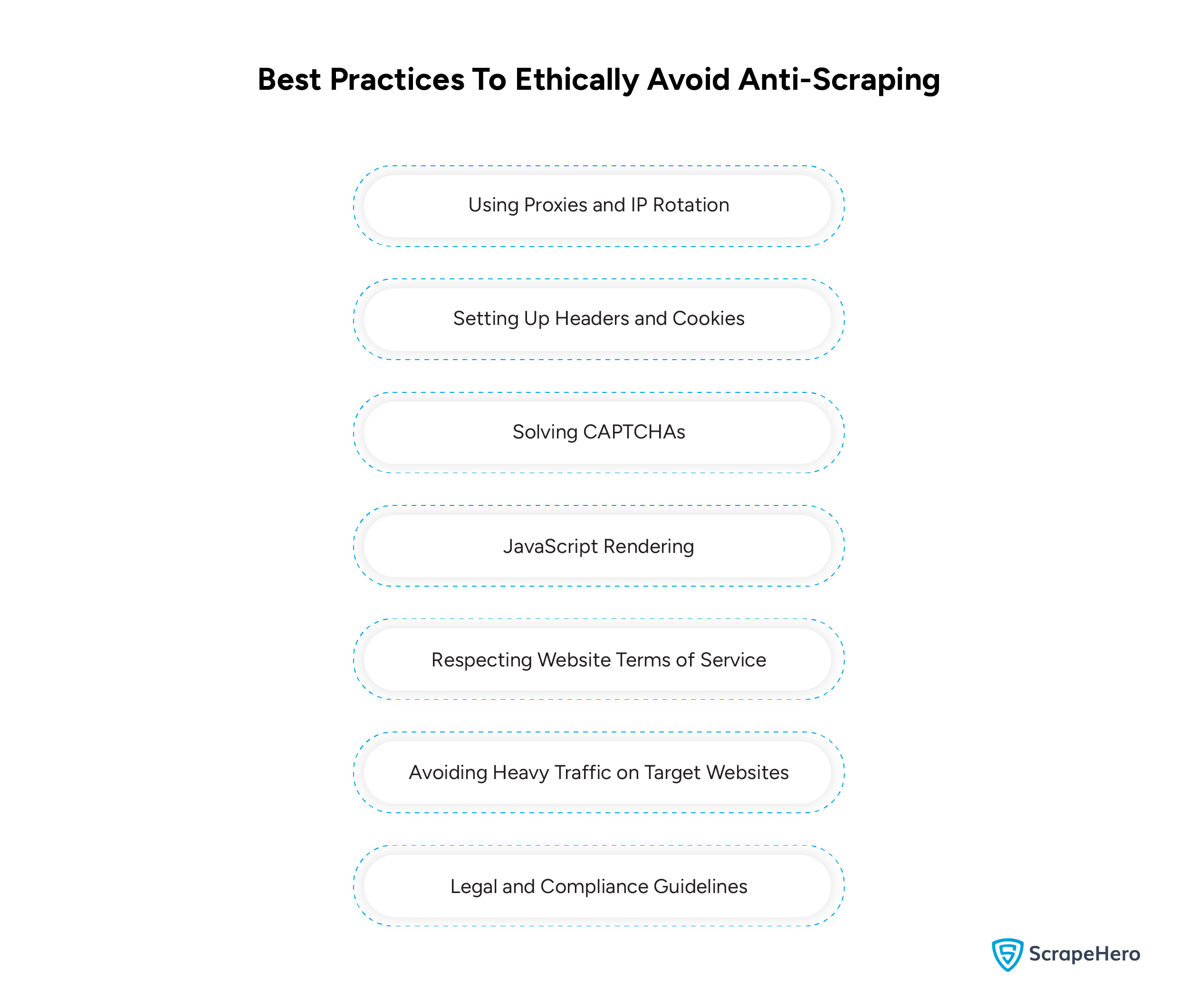

Best Practices for Avoiding Anti-Scraping Measures

When web scraping, you should ensure that your actions do not affect the website or its users.

Following these methods, you can respect legal and ethical boundaries and avoid being blocked.

- Using Proxies and IP Rotation

- Setting Up Headers and Cookies

- Solving CAPTCHAs

- JavaScript Rendering

- Respecting Website Terms of Service

- Avoiding Heavy Traffic on Target Websites

- Legal and Compliance Guidelines

1. Using Proxies and IP Rotation

To avoid IP blocks by websites when sending multiple requests from a single IP, use proxies and IP rotation.

In IP rotation, you are distributing your requests across different locations, which mimic organic traffic patterns.

For this, you can use residential proxies from real devices in various regions and prevent suspicion.

-

Ethical Consideration

Use reputable residential proxies to mimic human behavior instead of data center proxies, which are actually associated with spamming or malicious activities.

2. Setting Up Headers and Cookies

By configuring HTTP headers and cookies properly, you can avoid detection. Websites usually analyze user-agent headers and determine whether it’s a legitimate browser or a bot.

You can set the user-agent to reflect an actual browser and maintain cookies from previous sessions to make it appear more human-like.

-

Ethical Consideration

Do not use deceptive user-agents, and do not scrape behind paywalls or restricted access pages without permission.

3. Solving CAPTCHA

CAPTCHAs distinguish between humans and bots, and to bypass CAPTCHA systems, you can use services like 2Captcha.

Such services employ machine learning or human workers to solve CAPTCHA challenges.

Also, these services enhance automation efficiency and ensure ethical web scraping practices.

-

Ethical Consideration

Use CAPTCHA solving only on websites where scraping is allowed to avoid conflicting with the website’s purpose.

4. JavaScript Rendering

To scrape data from websites using JavaScript and dynamically load content, you need to use headless browsers like Puppeteer or Selenium.

These headless browsers can render the JavaScript before extracting the data and allow you to scrape content that may not be immediately visible in the HTML source.

Many websites use JavaScript to load content dynamically, as traditional scraping methods don’t work here.

-

Ethical Consideration

To reduce the strain your scraping activities place on the server, you need to limit your requests, respect rate limits, and add delays between scrapes.

5. Respecting Website Terms of Service

Before scraping, you need to review a website’s terms of service (ToS). Some websites prohibit scraping or require you to use their official APIs.

Ignoring such rules may result in legal action. Official APIs can offer structured and authorized access to data, avoiding legal and ethical concerns.

-

Ethical Consideration

Use official APIs where possible and follow the guidelines established by the website to avoid ethical and legal issues.

6. Avoiding Heavy Traffic on Target Websites

When too many requests are made to a website’s servers in a short period, an ethical concern may arise.

These requests slow down or even crash the websites, impacting the experience for regular users.

You can minimize the load on the server by throttling requests and spacing them out over time.

-

Ethical Consideration

Make sure that the limiting request rates and adding delays between actions mimic human-like behavior.

7. Legal and Compliance Guidelines

When web scraping, you must comply with local and international laws, such as the Computer Fraud and Abuse Act (CFAA) and the California Consumer Privacy Act (CCPA).

The CFAA prohibits unauthorized access to protected computer systems, and the CCPA regulates the collection of personal information from California residents.

Even when you can scrape websites for job listings, it is illegal to scrape contact details or personal email addresses without consent.

-

Ethical Consideration

Avoid scraping personal or sensitive information without permission to ensure that the data you scrape complies with the legal requirements.

How Does ScrapeHero Help You Ethically Navigate Anti-Scraping Measures?

To avoid anti-scraping measures, you need technical expertise and ethical responsibility, and you must use techniques like header settings, CAPTCHA solving, etc.

To handle such anti-scraping measures, enterprises may need to set up an internal team, maintain and train them, and pay more than hiring a web scraping service provider.

ScrapeHero can provide a reliable solution in such situations, as we offer complete data pipeline management and help clients navigate complex regulations.

As a fully managed web scraping service, we can bypass anti-scraping measures and provide you with valuable data without compromising integrity.

Frequently Asked Questions

Yes. Websites detect web scraping in various ways, such as unusual traffic patterns, IP blocks, and CAPTCHAs.

It entirely depends on intent and compliance with legal restrictions.

Ethical web scraping is when you respect site terms, don’t harm users or businesses, or collect sensitive or private data without consent.

Cloudflare is a popular anti-bot service that blocks automated web scraping activities using techniques such as bot detection, rate limiting, and CAPTCHAs.

Web scraping impacts cybersecurity when websites lack proper protections, leading to data theft by malicious scrapers.

The legality of web scraping varies by jurisdiction. However, if it complies with website terms and doesn’t infringe on privacy rights, it is considered legal.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data