Did you know that web scrapers often face the same geo-restriction technology used by platforms like Netflix to manage localized content?

Geo-restrictions/geo-blocking are not just a speed bump; they are measures designed to prevent scraping operations and restrict access based on location.

To overcome such barriers, you need a strategic combination of advanced tools and techniques that ensure legitimate access to content.

This blog will guide you through the different challenges of geo-restrictions in web scraping and explain in detail how to overcome them effectively.

Why Do Websites Implement Geo-Restrictions?

Websites implement geo-restrictions mainly because of legal concerns. They need to adhere to regional laws and regulations to ensure that content access aligns with local policies.

Market segmentation is also another reason why websites go for geo-restrictions. Many businesses target specific audiences by tailoring content based on geographic location.

Also, geo-blocking helps to prevent scraping attempts from foreign bots, prevents misuse, and protects sensitive information or proprietary content.

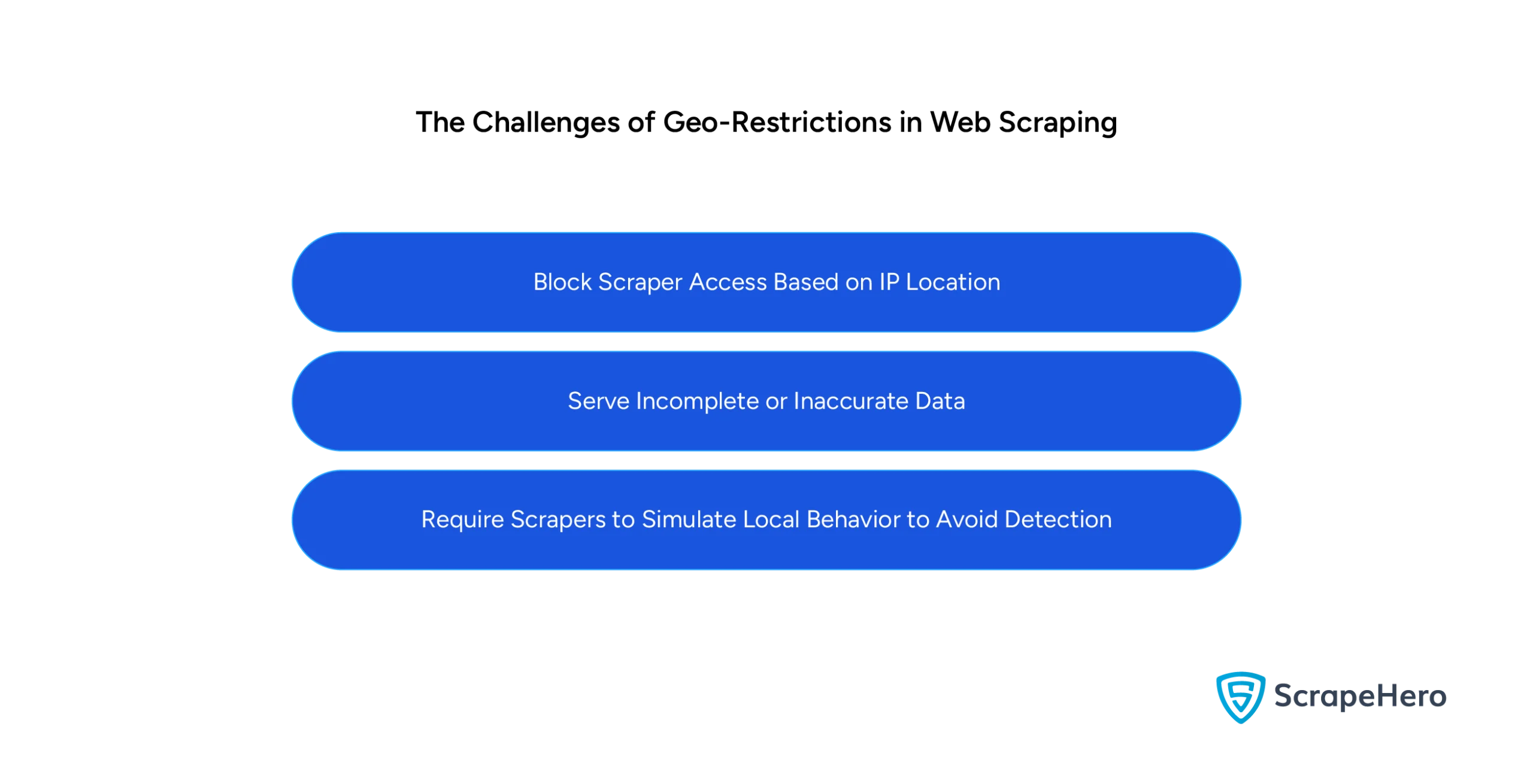

The Challenges of Geo-Restrictions in Web Scraping

Geo-restrictions impose significant challenges for you in web scraping. Some of the key issues associated with geo-restrictions are discussed below:

- Block Scraper Access Based on IP Location

- Serve Incomplete or Inaccurate Data

- Require Scrapers to Simulate Local Behavior to Avoid Detection

1. Block Scraper Access Based on IP Location

Websites can detect and block IP addresses from unauthorized geographic regions, creating a digital barrier.

Due to this restriction, scrapers cannot access content that is specific to certain areas, limiting the scope of data collection.

As a result, businesses targeting global markets need help in obtaining localized information that is necessary for informed decision-making.

2. Serve Incomplete or Inaccurate Data

Sometimes, instead of blocking, some websites provide incomplete or outdated data to requests originating from restricted regions.

This is a tactic to ensure that the users outside the target area are not able to derive meaningful insights.

This is a challenge for scrapers as the data collected is often unreliable or unusable for analysis, leading to flawed business decisions later.

3. Require Scrapers to Simulate Local Behavior to Avoid Detection

Some websites with advanced anti-scraping mechanisms identify suspicious activity by monitoring the user behavior.

In most cases, they look for browsing patterns, language settings, and interactions that align with a user’s behavior.

When scrapers try to simulate these behaviors by employing tools like dynamic user-agents, it adds significant complexity to bypass detection.

Effective Methods to Overcome Geo-Restrictions

You can get around geo-restrictions by using different methods. These are some of the most effective ways to bypass geo-blocking:

- Using Proxies

- Rotating IPs and User-Agents

- Using VPNs

- Tor and Ethical Considerations

- Using Smart DNS

1. Using Proxies

Proxies mask your actual geographic origin, routing your requests through a server in a different location.

They are essential for web scraping without getting blocked as they mimic legitimate user behavior thus bypassing restrictions.

If you are using residential proxies, then they are routed through real user devices, making them highly effective and challenging to detect.

On the other hand, data center proxies are more likely to be flagged by advanced anti-scraping systems even though they are faster and more cost-effective.

Proxies bypass geo-restrictions because they mimic actual user behavior, reducing the chances of detection.

They are highly reliable, especially for web scraping, when websites implement strict antibot measures or analyze traffic patterns and try to block suspicious activity.

2. Rotating IPs and User-Agents

By rotating IP addresses, you can ensure that multiple requests appear to come from different users. This prevents detection by anti-scraping systems.

If you combine IP address rotation with user-agent rotation, which simulates different browser or device types, the scraping will look more natural.

When websites have tracking systems that monitor repeated requests or identical user-agents, using these techniques can avoid blocks when scraping.

Also, by rotating IPs, you can maintain anonymity and bypass geo-restrictions that rely on identifying user location.

You can also ensure smoother data extraction and reduce the risk of being flagged or banned if you are implementing such strategies.

However, you must ensure proper configuration to avoid inconsistencies or inefficiencies during data collection.

3. Using VPNs

VPNs (Virtual Private Networks) can encrypt the internet connection, allowing you to choose a server in a location where the target website is accessible.

VPNs mask your actual IP address and make it appear as if you are browsing from a permitted region.

Using VPNs is a valuable method, particularly for small-scale scraping tasks, but they are less effective for large-scale scraping as they typically offer fewer IP addresses.

Furthermore, advanced anti-scraping mechanisms may detect VPNs and flag and block the connection.

But despite this, VPNs remain a reliable choice for secure and private access to region-specific content.

4. Tor and Ethical Considerations

The Tor network makes it impossible to trace your original location, routing your internet traffic through multiple servers.

While Tor can assist in bypassing geo-restrictions, its slower speeds and lack of stability make it unsuitable for high-volume scraping tasks.

Since Tor is often associated with activities that violate terms of service, it raises serious ethical and legal concerns.

Also, you must understand that Tor has shared network infrastructure, which introduces reliability issues, making it less suitable for commercial data scraping needs.

So, when using Tor, users must be cautious and ensure compliance with local laws and regulations.

5. Using Smart DNS

Innovative DNS services do not change your IP address; instead, they route specific traffic related to geo-blocked websites through a server in the required location.

Compared to VPNs or proxies, Smart DNS services bypass restrictions while maintaining faster connection speeds.

It is suitable for bypassing content filtering on websites that rely heavily on geo-blocking but lack the encryption and security features provided by other tools like VPNs.

Also, Smart DNS services are ideal for scenarios where speed is critical and encryption is not a priority.

However, you must be aware that Smart DNS services might not work with websites that employ advanced detection systems or dynamic geo-restrictions.

How ScrapeHero Web Scraping Service Can Help

To overcome geo-restrictions in web scraping, you require the right tools, knowledge, and ethical practices.

Although there are many effective methods, such as Proxy servers, VPNs, and IP rotation, choosing the right approach based on your specific needs can be challenging.

A web scraping service like ScrapeHero can take care of everything for you, handling all the processes involved in web scraping, from bypassing antibot methods to delivering quality-checked data.

With over a decade of industry experience, we always prioritize compliance and transparency to ensure successful and sustainable scraping.

Frequently Asked Questions

To bypass geo-restrictions, you can use proxies, VPNs, or Smart DNS and mask your geographic location.

To unblock geolocation, you can adjust your browser settings or use tools like VPNs to simulate another location.

The legality of using a VPN to bypass geo-restrictions depends on the region. In most countries, using a VPN isn’t illegal, but bypassing restrictions might breach the terms of service.

To reset geolocation permissions, you must clear your browser cache or reset location settings by default.