Are you worried that your competitor is offering promotional offers that you aren’t? Here’s the solution. Amazon has a Today’s Deals page, which displays new deals and promotions. With Amazon deal scraping, you can monitor the deals on the page to track your competitors’ offers.

This tutorial shows you how to scrape Amazon deals using Python requests and BeautifulSoup. Let’s start.

Data Scraped from Amazon Deals Page

Here’s what this tutorial scrapes from the Amazon deals page:

- Deal Name

- ASIN (Amazon Standard Identification Number).

- Base Price

- Price to Pay

- Savings

All this data is available in a script inside the HTML source code. So you don’t have to worry about XPaths for Amazon deal scraping.

Step-by-Step: How to Scrape and Monitor Amazon Deals

Set Up the Environment

This code needs three external packages for Amazon deal scraping:

- requests: for handling HTTP requests

- BeautifulSoup: for parsing HTML source code

- lxml: for using with BeautifulSoup

You can install them using pip.

pip install requests Beautifulsoup4 lxmlYou’ll also need four packages included in the Python standard library:

- re: To manage regular expressions

- time: To implement pauses during script execution

- random: To get a random number

- json: To handle JSON data

Import all these modules:

import requests, re, json

from bs4 import BeautifulSoup

from time import sleep

from random import randint

Define a Function to Extract Deals

This code uses a function, get_deals(), to extract deals from Amazon’s Today’s Deals page.

It starts by locating the appropriate script tag; this tag will have a string that contains ‘% off’ in it because it stores the discount values. Therefore:

- Select all the script tags

- Iterate through them

- Find the one with the text ‘% off’ in it

scripts = soup.find_all('script')

reqScript = ''

for script in scripts:

if '% off' in script.text:

reqScript = script.stringThen, you can extract the JSON string from the script tag’s text content with RegEx using the re module. You need to manually analyze the JSON string to determine the appropriate RegEx pattern.

Here, the pattern is “assets\.mountWidget\(‘slot-14’, (\{.+\})\)”

json_string = re.findall(r"assets\.mountWidget\('slot-14', (\{.+\})\)",reqScript)[0]Next, parse the JSON string and extract the required data points from the correct keys; this requires some tedious searching. Moreover, you need to use a try-except to catch any errors.

json_data = json.loads(f'[{json_string}]')

products = json_data[0]['prefetchedData']['entity']['rankedPromotions']

for i in range(len(products)):

try:

asin = products[i]['product']['entity']['asin']

relative_url = products[i]['product']['entity']['links']['entity']['viewOnAmazon']['url']

url = 'https://amazon.com'+ relative_url

name = relative_url.split('/')[1].replace('-',' ')

base_price = products[i]['product']['entity']['buyingOptions'][0]['price']['entity']['basisPrice']['moneyValueOrRange']['value']['amount']

price_to_pay = products[i]['product']['entity']['buyingOptions'][0]['price']['entity']['priceToPay']['moneyValueOrRange']['value']['amount']

savings = products[i]['product']['entity']['buyingOptions'][0]['price']['entity']['savings']['percentage']['displayString']The above code first parses the JSON string using json.loads().

You can see that the entire string was enclosed in square brackets. That’s because there were three dict objects in the string, and json.loads() can only parse a single object. By enclosing them with square brackets, you create a single list object.

While searching for keys, first, the above code gets the value of the key ‘rankedPromotions,’ which contains all the available deals. It then iterates through them and gets the required data points, including the savings and the URL.

Finally, the code below stores the required data in a dict with the ASIN as the key—this ensures that the same deal is not extracted twice—and returns the dict.

deals[asin] = {

"Name":name,

"URL":url,

'Base Price':base_price,

'Price to Pay':price_to_pay,

'Savings': savings

}

except Exception as e:

continue Send Requests to Amazon

get_deals() accepts a dict to store extracted deals and a BeautifulSoup object.

This object is the parsed HTML code of Amazon’s Today’s Deal page. It contains a JSON string inside a script tag that holds the deals you need.

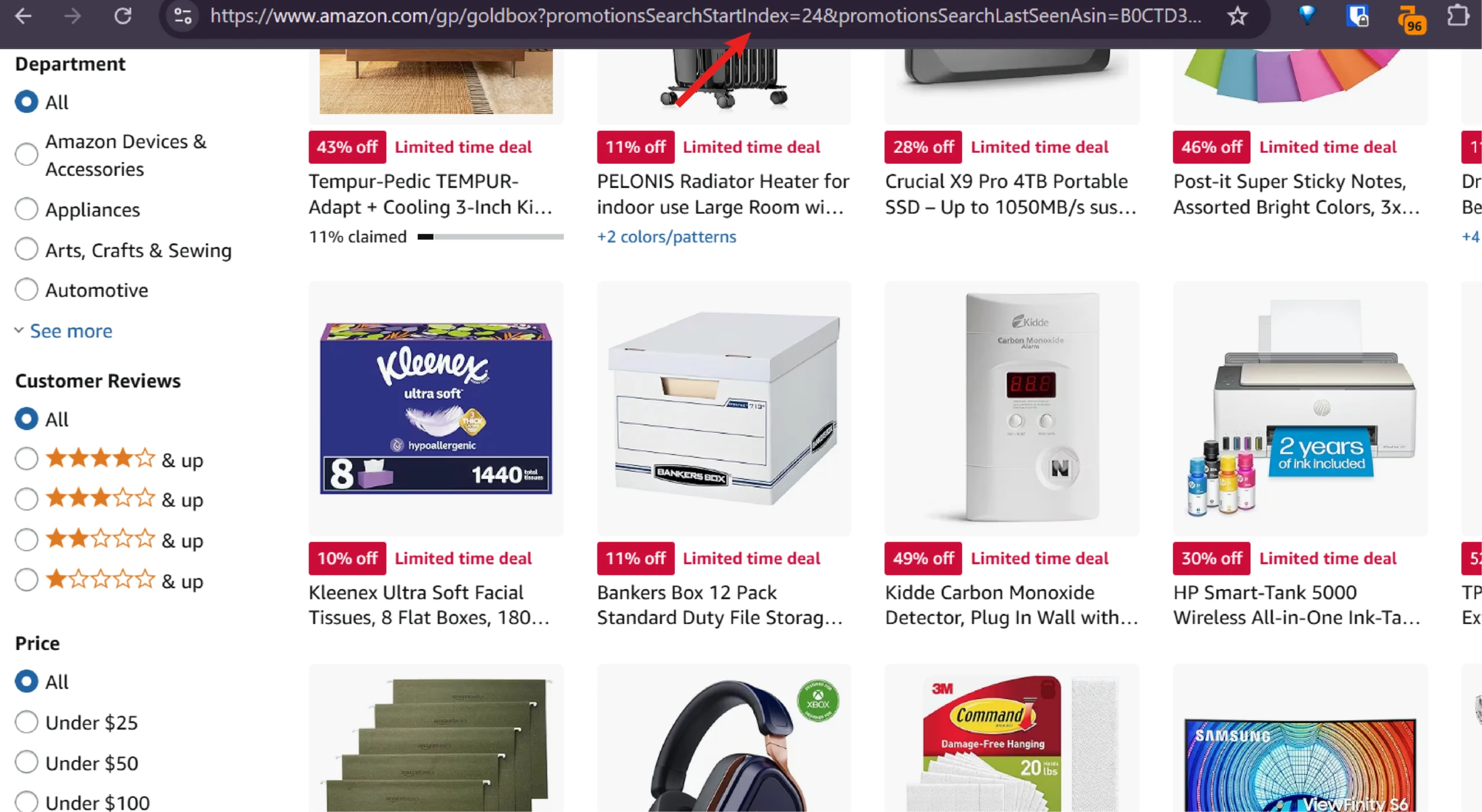

However, this string only holds some deals, and the number of deals present depends on the request URL’s start index. This index tells the server to include a fixed number of deals in the JSON string starting from a particular deal.

This means you need to make multiple requests with varying start indexes to get all the deals on the page.

And that’s what this code does.

Here, a loop is used to make a series of GET requests, and in each iteration, the start index will be 6 higher than that of the previous iteration. The number 6 was chosen because when you scroll the deals page, you can see the start index increasing by 5-9.

The loop runs for a total of 30 iterations, and in each iteration, it:

- Calculates the number of deals inside a dict that you create before the loop begins

- Makes a request to the URL with a start index equal to 8 times the loop count.

- Pauses the script execution for 2-4 seconds

- Calls get_deals() that extracts the available deals and stores them in the dict mentioned previously

- Calculates the new number of deals inside the dict

- Breaks the loop if the previous number of deals is equal to the updated number of deals.

for i in range(30):

previous_deals = len(deals)

response = requests.get(f'https://www.amazon.com/gp/goldbox?promotionsSearchStartIndex={7*i}',headers=headers)

sleep(randint(2,4))

soup = BeautifulSoup(response.text,'lxml')

get_deals(deals,soup)

updated_deals = len(deals)

if previous_deals == updated_deals:

print("All deals extracted")

breakStore the Data

Finally, store the code in a JSON file using json.dump()

with open('deals.json','w',encoding='utf-8') as f:

json.dump(deals,f,ensure_ascii=False,indent=4)

print(len(deals))The results of Amazon deal scraping will look like this:

"B0DSQZWBL6": {

"Name": "SAMSUNG Smartphone Unlocked Processor Silverblue",

"URL": "https://amazon.com/SAMSUNG-Smartphone-Unlocked-Processor-Silverblue/dp/B0DSQZWBL6",

"Base Price": "1499.99",

"Price to Pay": "1299.99",

"Savings": "13%"

}Here’s the complete code:

import requests, re, json

from bs4 import BeautifulSoup

from time import sleep

from random import randint

def get_deals(deals,soup):

scripts = soup.find_all('script')

reqScript = ''

for script in scripts:

if '% off' in script.text:

reqScript = script.string

json_string = re.findall(r"assets\.mountWidget\('slot-14', (\{.+\})\)",reqScript)[0]

json_data = json.loads(f'[{json_string}]')

products = json_data[0]['prefetchedData']['entity']['rankedPromotions']

for i in range(len(products)):

try:

asin = products[i]['product']['entity']['asin']

relative_url = products[i]['product']['entity']['links']['entity']['viewOnAmazon']['url']

url = 'https://amazon.com'+ relative_url

name = relative_url.split('/')[1].replace('-',' ')

base_price = products[i]['product']['entity']['buyingOptions'][0]['price']['entity']['basisPrice']['moneyValueOrRange']['value']['amount']

price_to_pay = products[i]['product']['entity']['buyingOptions'][0]['price']['entity']['priceToPay']['moneyValueOrRange']['value']['amount']

savings = products[i]['product']['entity']['buyingOptions'][0]['price']['entity']['savings']['percentage']['displayString']

deals[asin] = {

"Name":name,

"URL":url,

'Base Price':base_price,

'Price to Pay':price_to_pay,

'Savings': savings

}

except Exception as e:

continue

if __name__ == '__main__':

deals = {}

headers = {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,"

"*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"accept-language": "en-GB;q=0.9,en-US;q=0.8,en;q=0.7",

"dpr": "1",

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "none",

"sec-fetch-user": "?1",

"upgrade-insecure-requests": "1",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36",

}

for i in range(30):

previous_deals = len(deals)

response = requests.get(f'https://www.amazon.com/gp/goldbox?promotionsSearchStartIndex={7*i}',headers=headers)

sleep(randint(2,4))

soup = BeautifulSoup(response.text,'lxml')

get_deals(deals,soup)

updated_deals = len(deals)

if previous_deals == updated_deals:

print("All deals extracted")

break

with open('deals.json','w',encoding='utf-8') as f:

json.dump(deals,f,ensure_ascii=False,indent=4)

print(len(deals))Monitor Amazon Deals Over Time

To monitor the deals, schedule the script to run periodically using:

- Cron Jobs (Linux/macOS) or Windows Task Scheduler can trigger your script at fixed intervals (hourly, daily, etc.).

- Cloud Services like AWS Lambda, Azure Functions, or other scheduling tools remove the need for a personal server.

- Scraping Pipelines with Apache Airflow or similar scheduling software that can routinely run your Python script.

Code Limitations

- Amazon’s Page Layout Changes: Expect the site’s HTML to evolve. If your scraper fails to find the JSON string, that’s your first clue something changed.

- Changes in JSON structure: Even if your scraper found the JSON string, its structure could have changed, meaning it won’t be able to extract the details.

- Anti-scraping measures: Amazon might block your scraper or even your IP address if you don’t use techniques like throttling and IP rotation.

Conclusion

Scraping Amazon deals allows you to get daily discounts. Extract essential data points—title, price, discount, and URL—periodically to track items that offer the most significant savings. You can use Python’s Requests and Beautiful Soup libraries to do so.

However, you have to maintain the code yourself and deal with anti-scraping measures that will be more apparent in large-scale scraping.

ScrapeHero’s web scraping service can help you out. We can build enterprise-grade scrapers that handle these obstacles, so you don’t have to. Our services also cover complete data pipelines, including robotic process automation and AI/ML solutions.