The robots.txt of a website tells you which pages a scraper can access. Manually reading and implementing it can be inefficient, but you can programmatically parse robots.txt for web scraping to improve efficiency.

This article discusses how to respect robots.txt without losing the web-scraping efficiency.

Parsing Robots.txt Using Urllib

A popular way to parse robots.txt for web scraping is by using the RobotFileParser() class from Python’s urllib.robotparser:

1. Import RobotFileParser

from urllib.robotparser import RobotFileParser2. Create a RobotFileParser() object.

parser = RobotFileParser()3. Pass the absolute URL of the robots.txt file to the object.

parser.set_url(‘https://example.com/robots.txt’)4. Use the read() method to parse it.

parser.read()Now, you can check whether a particular path is accessible using the can_fetch() method, which will return a boolean answer.

parser.can_fetch(‘Googlebot’, ‘/path/to/check’)You can also use custom code to parse the robots.txt file for efficient web scraping, but you need to understand its syntax.

The Syntax of Robots.txt

Generally, Robots.txt files have these fields that specify different things:

- user-agent: Name of the bot

- allow: The path of the page that the bot can access

- disallow: The path of the page that the bot is not allowed to access

- sitemap: The sitemap’s complete URL

user-agent

This field specifies a bot, and all the rules below it will apply to that particular bot. It is case-sensitive.

The user agent is essentially the bot’s name. For example, Google’s crawlers identify themselves as Googlebot.

You may also find wild cards ‘*’ instead of a name, which means the rules following the user-agent line apply to all the bots.

So if your bot’s name is ‘scraping-bot,’ the rules under ‘user-agent: scraping-bot’ and ‘user-agent: *’ are applicable

allow

This field tells the pages your bot can access. It can have a path of a file or one of these patterns:

- ‘/’: The entire website

- ‘/$’: Only the root directory

- ‘/something’: Paths starting with ‘something’

- ‘/somefolder/’: Anything inside ‘/somefolder/’

- ‘*.js’: Paths that contain ‘.js’

- ‘*.js$’: Paths that end with .js

- ‘something*.js’: Paths that contain ‘something’ and ‘.js’ in the same order

Though each allow field will contain one path or pattern, there may be several fields.

disallow

Similar to the allow field, the disallow fields will also contain URLs or patterns. However, the paths or patterns will point to the pages your scraper is not permitted to access.

sitemap

This field has the absolute URL of the sitemap.

Note: Both allow and disallow fields use relative URLs, while the sitemap needs an absolute URL.

The general syntax of a robots.txt file is:

user-agent: *

disallow: /path/your/scraper/is/not/allowed/to/crawl

user-agent: botname2

allow: /path/your/scraper/is/allowed/to/crawl

disallow: /path/your/scraper/is/not/allowed/to/crawl

sitemap: https://example2.com/sitemap.xml

Parsing Robots.txt Using Custom Code

Now that you know the syntax of robots.txt, you can create a custom code for ethical and efficient scraping. To do so, you’ll need a RegEx library like re.

Start by importing urllib.request and re. Both these modules come with Python’s standard library.

import urllib.request, reparse_robots_txt()

Now, define a function that accepts the text inside a robots.txt file and returns the list of rules.

Inside the function, whether or not the robot_text file is empty and return an empty dict if it is.

if not robots_text:

return {}Next, define a dict to store the extracted rule.

rules = {}Initialize a variable that stores the current user agent. This variable will tell you which user agent the allow or disallow lines apply to.

current_user_agent = NoneSplit the text inside the robots.txt file into lines, which enables you to iterate through them and extract the rules.

lines = robots_text.strip().split("\n")In each iteration:

- Skip the code if the line starts with ‘#’ because those are comments.

- Check if the line contains a colon (:), and if it does:

- Create a key-value pair where the key will be one of the fields.

- Assign the value to the variable current_user_agent if the key is “user agent”

- Update the rules dict with the key-value pair if the key is either ‘allow’ or ‘disallow’ and is in current_user_agent.

- Add the key-value pair to the rules dict as the value of the key ‘global’ if the key is ‘sitemap’

for line in lines:

line = line.strip()

# skipping empty lines or comments

if not line or line.startswith("#"):

continue

# Splitting into key-value pairs

if ":" in line:

key, value = map(str.strip, line.split(":", 1))

key = key.lower()

# handling user agent

if key == "user-agent":

current_user_agent = value

if current_user_agent not in rules:

rules[current_user_agent] = {"allow": [], "disallow": []}

# handling allow disallow

elif key in ("allow", "disallow") and current_user_agent:

rules[current_user_agent][key].append(value)

# handling other fields

else:

if "global" not in rules:

rules["global"] = {}

rules["global"][key] = valueThe function will give you the rules but doesn’t tell you whether or not a particular path is accessible by your scraper. So create a function check_permission() that accepts a rules dict, a user agent, and a path and returns a boolean value.

However, before that define two functions match_rule() and check() that check_permission() will use.

match_rule()

This function matches a path and a rule pattern using RegEx and returns a boolean value. The code will iterate through the rules and call match_rule() for each.

def match_rule(rule_pattern, path):

"""Convert robots.txt pattern to regex and check if it matches the path."""

# Escaping special regex chars, except * and $

pattern = re.escape(rule_pattern).replace(r"\*", ".*").replace(r"\$", "$")

if not pattern.endswith("$"):

pattern += ".*"

return bool(re.match(pattern, path))check()

The function starts by getting all the disallow rules. If there are none, it returns true because the absence of disallow rules means your bot can access the entire site.

disallowed = [(p, len(p)) for p in agent_rules.get("disallow", []) if match_rule(p, path)]If there are disallow rules, the function will get the longest one. You need the longest matching rule because multiple rules may match:

- something

- /something*.pdf

- /something*.pdf?before=

The longest rule takes precedence. That means if there is a shorter disallow rule and a longer allow rule, your scraper will follow the allow rule.

So after getting the longest-matching disallow rule, get the longest-matching allow rule and check which one is longer.

If the longest one is an allow rule, return true; otherwise, return false.

if disallowed:

longest_disallow = max(disallowed, key=lambda x: x[1])[0]

allowed = [(p, len(p)) for p in agent_rules.get("allow", []) if match_rule(p, path)]

if allowed:

longest_allow = max(allowed, key=lambda x: x[1])[0]

if len(longest_allow) >= len(longest_disallow):

return True

return Falsecheck_permission()

Create a function check_permission() that accepts the rules dict, a user-agent name, and a path and returns a boolean value.

Start by checking whether the rules dict is empty. If there are no rules, that means all pages are accessible.

if not rules or not isinstance(rules, dict):

return True # No rules means everything is allowedNext, normalize the path to ensure that it starts with ‘/’

if not path.startswith("/"):

path = "/" + pathFinally, check whether the rules include your user_agent or the wild card ‘*’, and call check() to determine the access.

if user_agent in rules:

agent_rules = rules[user_agent]

# Check disallow rules

return check(agent_rules,path)

# Fall back to wildcard (*) rules

if "*" in rules:

agent_rules = rules["*"]

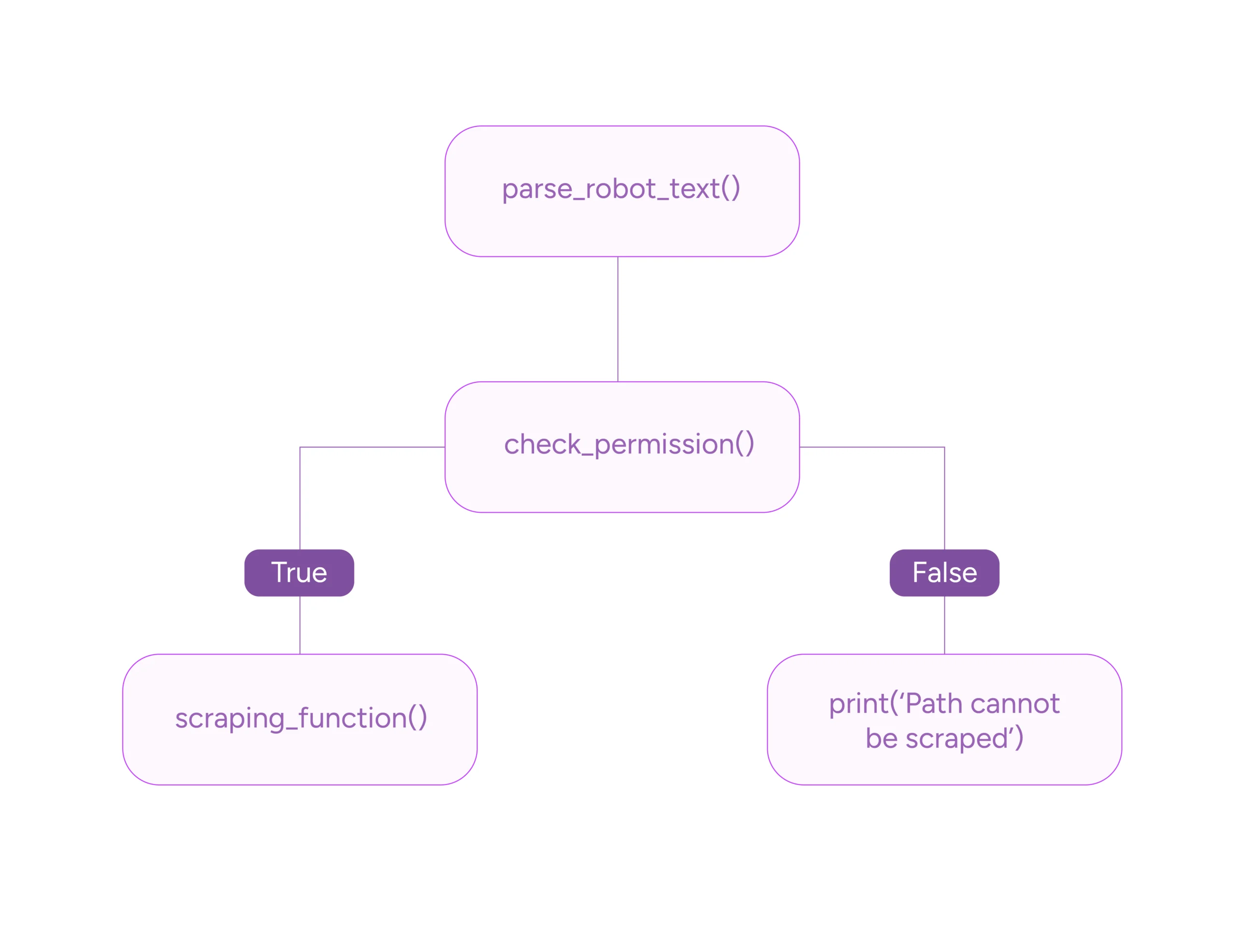

return check(agent_rules,path)You can now use these functions to programmatically determine whether or not to scrape. For instance, if you have a scraping function, you can conditionally call it depending on the output of the check permission.

robots_txt = urllib.request.urlopen("https://tumblr.com/robots.txt").read().decode('utf-8')

rules = parse_robots_txt(robots_txt)

permission = check_permission(rules,'Slurp','/tagged/cat')

if permission == True:

scraping_function()

else:

print("The path can't be scraped")Here’s a flowchart showing the process.

Here’s the complete code.

import urllib.request, re

def parse_robots_txt(robots_text):

"""Parse robots.txt content manually for demonstration."""

if not robots_text:

return {}

rules = {}

current_user_agent = None

# Splitting into lines and process

lines = robots_text.strip().split("\n")

for line in lines:

line = line.strip()

# Skip empty lines or comments

if not line or line.startswith("#"):

continue

# Splitting into key-value pairs

if ":" in line:

key, value = map(str.strip, line.split(":", 1))

key = key.lower()

# Handling User-agent

if key == "user-agent":

current_user_agent = value

if current_user_agent not in rules:

rules[current_user_agent] = {"allow": [], "disallow": []}

# Handling Allow/Disallow

elif key in ("allow", "disallow") and current_user_agent:

rules[current_user_agent][key].append(value)

# Handling other fields

else:

if "global" not in rules:

rules["global"] = {}

rules["global"][key] = value

return rules

# defining match_rule()

def match_rule(rule_pattern, path):

"""Convert robots.txt pattern to regex and check if it matches the path."""

# Escaping special regex chars, except * and $

pattern = re.escape(rule_pattern).replace(r"\*", ".*").replace(r"\$", "$")

if not pattern.endswith("$"):

pattern += ".*"

return bool(re.match(pattern, path))

# defining check()

def check(agent_rules,path):

disallowed = [(p, len(p)) for p in agent_rules.get("disallow", []) if match_rule(p, path)]

if disallowed:

longest_disallow = max(disallowed, key=lambda x: x[1])[0]

allowed = [(p, len(p)) for p in agent_rules.get("allow", []) if match_rule(p, path)]

if allowed:

longest_allow = max(allowed, key=lambda x: x[1])[0]

if len(longest_allow) >= len(longest_disallow):

return True

return False

return True

# defining check_permission()

def check_permission(rules,user_agent,path):

"""Check if a user agent can fetch a path, supporting * and $ in rules."""

if not rules or not isinstance(rules, dict):

return True # No rules means everything is allowed

# Normalizing path (ensure it starts with '/')

if not path.startswith("/"):

path = "/" + path

# Checking for specific user agent rules first

if user_agent in rules:

agent_rules = rules[user_agent]

return check(agent_rules,path)

# Checking the rules for all user agents

if "*" in rules:

agent_rules = rules["*"]

return check(agent_rules,path)

if __name__ == "__main__":

robots_txt = urllib.request.urlopen("https://exanoke.com/robots.txt").read().decode('utf-8')

rules = parse_robots_txt(robots_txt)

permission = check_permission(rules,'Googlebot','/path/to/check')

if permission == True:

scraping_function()

else:

print("The path can't be scraped")

Wrapping Up: Why Use a Web Scraping Service

In conclusion, programmatically parsing robots.txt for web scraping allows you to scrape without losing efficiency. You can use packages like urllib.robotparser or create a custom code.

But you don’t have to worry about these technicalities if you only want data. Just use a web scraping service like ScrapeHero.

ScrapeHero is a web-scraping service capable of building enterprise-grade scrapers and crawlers. Our experts at ScrapeHero can take care of robots.txt and other scraping technicalities. In addition to data extraction, our services extend to building custom RPA and AI solutions.