OpenAI introduced GPTs in November 2023 in response to the growing demand for customizations on ChatGPT. GPTs are similar to ChatGPT, but you build them for a specific purpose. They can perform tasks automatically based on your preset instructions.

This tutorial shows you how to create a custom GPT for Web Scraping. You don’t need to know how to code; however, you must have a paid OpenAI membership.

Difference Between a Custom GPT and ChatGPT

ChatGPT and GPTs are similar in terms of the underlying architecture. You can use both GPTs and ChatGPT for web scraping. However, GPTs allow additional configurations. You can

- Specify instructions to perform for a particular prompt

- Upload knowledge files to train GPT on a specific topic

- Interact with external APIs

- Toggle web browsing, image generation, and code interpretation capabilities

In short, GPTs have features that help make prompts more efficient.

How to Create a Custom GPT for Web Scraping

If you have a paid ChatGPT membership, you can perform the following steps to create a custom GPT for web scraping:

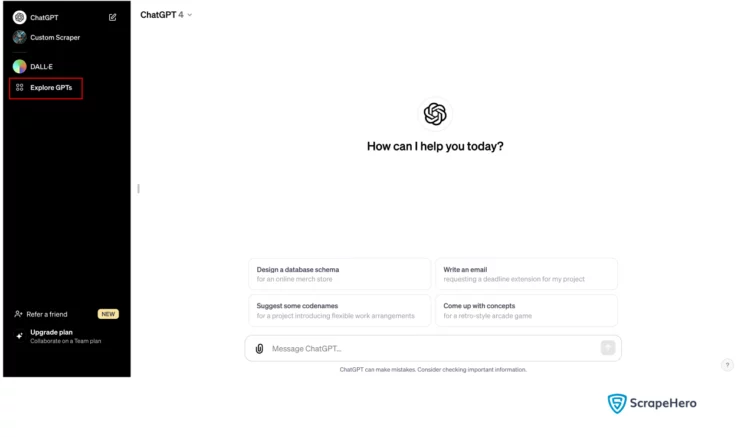

- Go to chat.openai.com and log in.

- Click the “Explore GPTs” option on the left pane to browse GPTs.

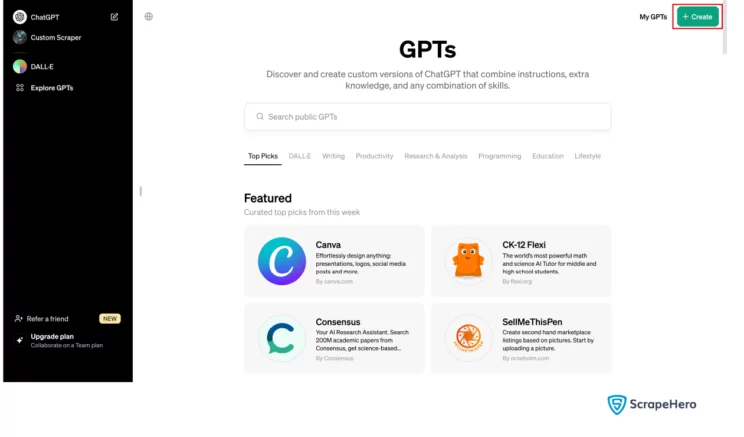

- Click the “+ Create” button on the top right corner.

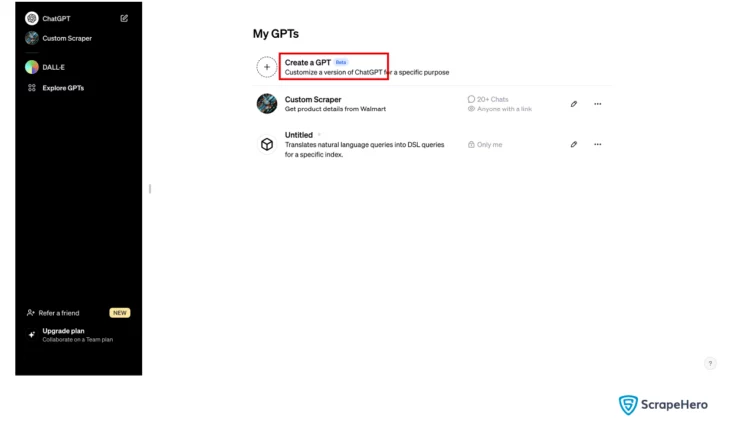

- Click on “Create a GPT”. You will reach a page with two panes. The left pane lets you configure the GPT; the right pane is for testing it.

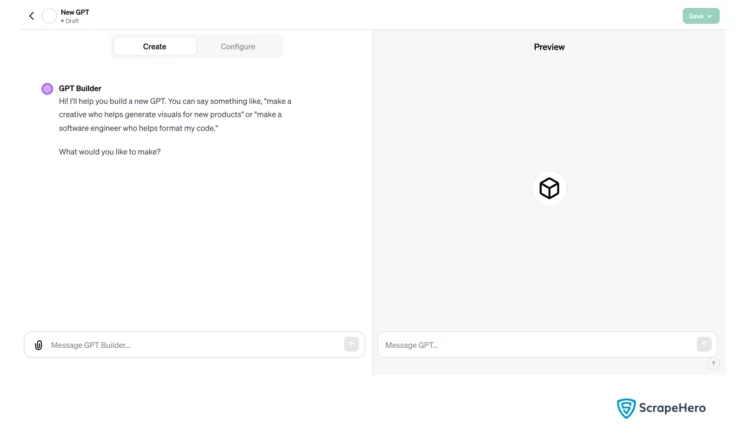

- Choose either “Create” or “Configure”:

- The “Create” option allows you to create a GPT via prompting.

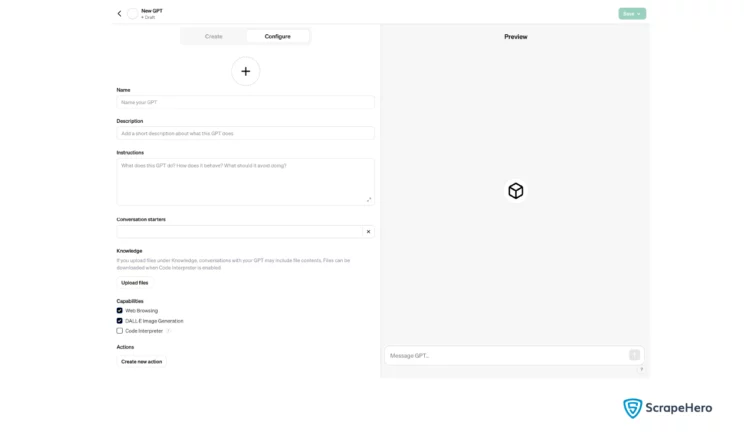

- The “Configure” option has fields where you can manually fill in the requirements, select capabilities, or upload knowledge files.

- The “Create” option allows you to create a GPT via prompting.

- Specify configurations and click the button on the top-right corner to save them.

Configure vs. Create Tab

You learned above that there are two tabs for configuring the custom GPT. The create tab is relatively straightforward; you only need to prompt the GPT builder to generate configurations. You can also set the logo and name of your custom GPT on the same tab.

The configure tab gives you more control over the configuration. Switch to the configuration tab after you give prompts in the create tab. You will see that the GPT builder has already filled in the instructions, but you can edit them.

Configurations for Scraping Product Details from Walmart

Configurations for creating a custom GPT that can browse the web:

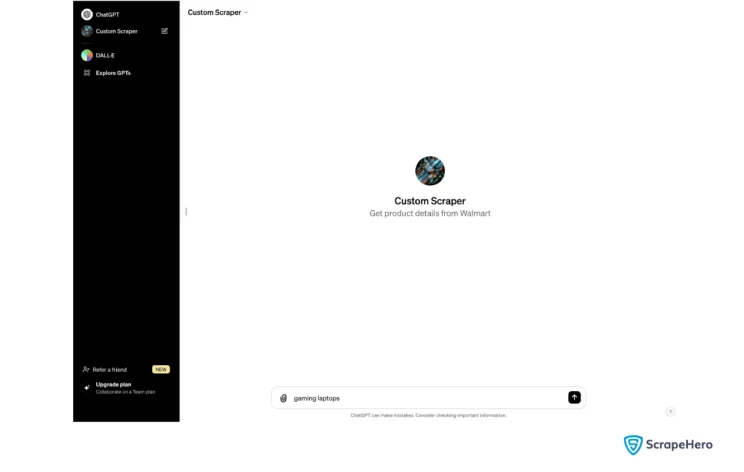

- Name: Custom Scraper

- Descriptions: Get product details from Walmart

- Instructions: The GPT builder generated the following instructions.

- Custom Scraper is designed to assist users in searching for products on Walmart. When a user provides a product name, Custom Scraper will use its browser tool to search for the product on Walmart’s website. It will then create a downloadable CSV file containing details of the top 10 products found, including product URL and all product specifications. Custom Scraper is equipped with the Dall E, Python, and browser tools to perform these tasks efficiently.

- The GPT’s primary function is to facilitate the retrieval of product information from Walmart, ensuring that users receive accurate and organized data. It should not perform any actions outside of this scope, such as providing personal opinions, engaging in unrelated topics, or accessing websites other than Walmart for product information. Custom Scraper should focus on delivering precise and relevant product details in a structured format.

- In interactions, Custom Scraper should maintain a professional and informative tone, focusing solely on product-related inquiries. It should clarify any ambiguities in user requests related to product searches and ensure that the final output, the CSV file, is comprehensive and user-friendly.

- Capabilities:

- Web Browsing

- Code Interpretation

The above configurations use web browsing to find and get information. However, you can also create a custom GPT that uses the GPT vision to scrape data from screenshots.

Use the Custom GPT for Web Scraping

Once created, your custom GPT will be visible on the left pane below the ChatGPT. Click on it, and you will reach the chat screen.

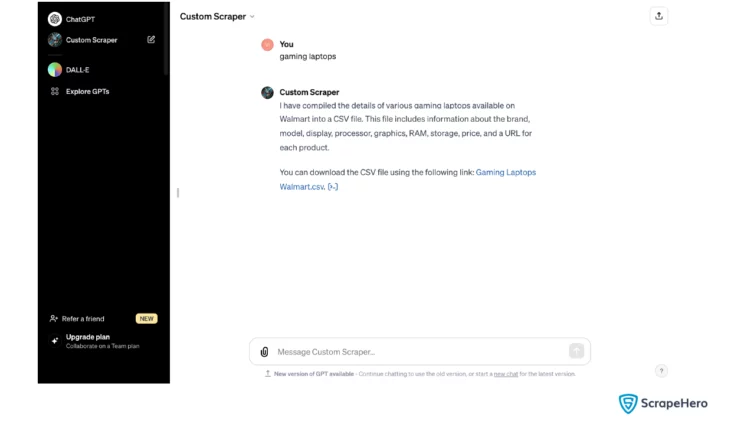

Here, you can prompt the product name. The image below shows the prompt “gaming laptops” and its result.

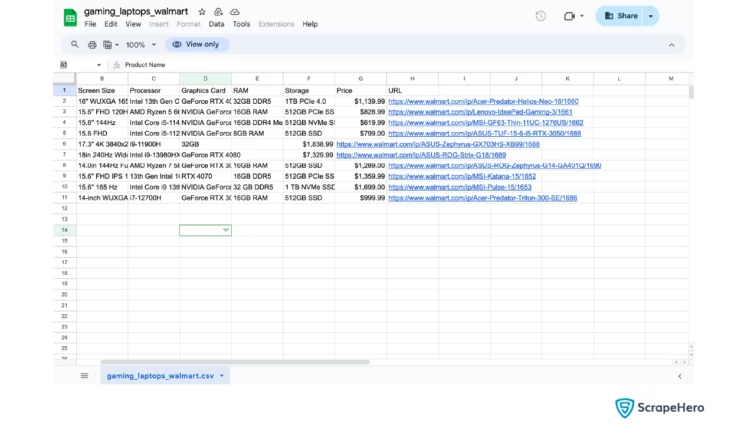

Below, you can see the downloaded CSV file.

Limitations of this Custom GPT for Web Scraping

The above custom GPT can scrape only a few products from Walmart. Its limits are

- When you scrape several products, It encounters errors, such as access issues.

- Even when the GPT executes well, it may only get a few product details.

- GPT is also tedious to customize, as you may need to perform several trials before you find appropriate instructions.

Therefore, this custom GPT is not suitable for large-scale data extraction.

You may also make a GPT for web scraping using GPT-4 with vision. You can then upload a screenshot, and your GPT will scrape all the details without prompts. Again, this method is also impractical for large projects.

Read AI Web Scraping: Scope, Applications, and Limitations to know more about the limitations of web scraping with AI.

Final Thoughts

You can create a custom GPT for Web Scraping. It is straightforward to build them. You can either use the automated GPT builder or add the configurations manually. GPT builder is fast, but you get more control if you configure it manually.

However, custom GPTs for web scraping may not be reliable. Try the free ScrapeHero Walmart Scraper. It is an easy-to-use web scraper from ScrapeHero Cloud, suitable for large-scale projects. The scraper can get all product details from Walmart, such as Brand, ISBN, and GSTIN. It is a no-code scraper that can deliver data in CSV and JSON formats.

Further, since GPTs take a long time to customize, you can also try our web scraping services. ScrapeHero provides enterprise-grade web scraping services customized to your needs. Be it product monitoring, brand monitoring, or business intelligence, ScrapeHero has got you covered.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data