Many websites deploy anti-bot technologies to prevent bots from scraping. But how do websites detect bots and block them? What technologies are used for bot detection when web scraping?

This article will answer your questions regarding bot detection. It covers the most commonly adopted bot protection techniques and how you can bypass these bot detection methods.

How Do Websites Detect and Prevent Bots From Scraping

Bots and humans can be distinguished based on their characteristics or their behavior. Websites, or the anti-scraping services websites employ, analyze the characteristics and behavior of visitors to distinguish the type of visitor.

These tools and products construct basic or detailed digital fingerprints based on the characteristics and interactions of these visitors with the website. This data is all compiled, and each visitor is assigned a likelihood of being a human or bot and either allowed to access the website or denied access.

This detection is done either as installed software or by service providers bundling this service into their CDN-type service or pure cloud-based subscription offerings that prevent bot traffic to a website before allowing access to anyone.

Where Can Websites Detect Scraper Bots?

The detection can happen at the client side (i.e., your browser running on your computer) or the server side (i.e., the web server or inline anti-bot technologies prevent bot traffic) or a combination of the two.

Web servers use different methods to identify and prevent bots from scraping. Some methods work by detecting the bots before they can get to the server, while others use services from the cloud. These cloud services can work in two ways: they either filter out the bots before they reach the website or they work together with the web server, using help from outside to detect scraper bots.

The problem is that this detection has false positives and ends up detecting and blocking regular people as bots or adding so much processing overhead that it makes the site slow and unusable. These technologies do come with costs (financial and technical) and have these trade-offs to consider.

Here are some of the areas where detection can occur:

- Server-side fingerprinting with behavior analysis

- Client-side or browser-side fingerprinting with behavior analysis

- A combination of both, spread across multiple domains and data centers

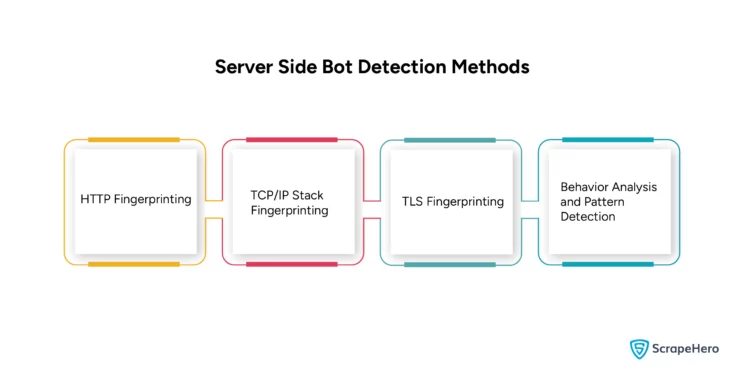

Server-Side Bot Detection

Server-side bot detection starts at the server level, which is on the web server of the website or devices of cloud-based services. There are a few types of fingerprinting methods that are usually used in combination to detect scraper bots from the server side.

Note that fingerprinting has a detrimental impact on global privacy because it allows seamless tracking of individuals across the Internet.

Various server-side bot detection methods include:

- HTTP Fingerprinting

- TCP/IP stack fingerprinting

- TLS Fingerprinting

- Behavior analysis and pattern detection

HTTP Fingerprinting

HTTP fingerprinting is done by analyzing the traffic a visitor to a website sends to the web server. Almost all of this information is accessible to the web server, and some of it can also be seen in the web server logs. It can reveal basic information about a visitor to the site, such as the

-

User-Agent

User-Agent gives information about the kind of browser, i.e., whether it is Chrome, Firefox, Edge, Safari, etc., and its version. -

Request Headers

Request headers such as referer, cookie, which the encoder accepts, whether they accept gzip compression, etc. -

The Order of the Headers

It is the sequence in which headers are sent that can reveal information about the browser’s configuration or operational environment. -

The IP Address

The IP address of the visitor who made the request from or finally accessed the web server (in case the visitor is using an ISP-based NAT address or proxy server).

HTTP fingerprinting is important for understanding visitor behavior, detecting bots, and enhancing security. It identifies and mitigates potential threats based on the unique digital signatures of web traffic.

TCP/IP Stack Fingerprinting

TCP/IP stack fingerprinting is the data a visitor sends to servers that reaches the server as packets over TCP/IP. The TCP stack fingerprint has details such as:

- Initial packet size (16 bits)

- Initial TTL (8 bits)

- Window size (16 bits)

- Max segment size (16 bits)

- Window scaling value (8 bits)

- “don’t fragment” flag (1 bit)

- “sackOK” flag (1 bit)

- “nop” flag (1 bit)

These variables are combined to form a digital signature of the visitor’s machine that has the potential to uniquely identify a visitor—bot or human.

Open-source tools such as p0f can tell if a User-Agent is being forged. It can even identify whether a visitor to a website is behind a NAT network or has a direct connection to the internet based on the browser’s settings, such as language preferences, etc.

TCP/IP stack fingerprinting is essential as it analyzes the details to figure out more about the computers trying to connect to or communicate with your system. It helps with security, managing network access, and detecting potential intruders.

TLS Fingerprinting

When a site is accessed securely over the HTTPS protocol, the web browser and a web server generate a TLS fingerprint during an SSL handshake. Most client User-Agents, such as different browsers and applications such as Dropbox, Skype, etc., will initiate an SSL handshake request in a unique way that allows for that access to be fingerprinted.

The open-source TLS fingerprinting library JA3 gathers the decimal values of the bytes for the fields mentioned in the client hello packet during an SSL handshake:

- SSL version

- Accepted ciphers

- List of extensions

- Elliptic curves

- Elliptic curve formats

It then combines those values together in order, using a “,” to delimit each field and a “-” to delimit each value in each field. These strings are then MD5 hashed to produce an easily consumable and shareable 32-character fingerprint. This is the JA3 SSL client fingerprint. MD5 hashes also have the benefit of speed in generating and comparing values and are very unique hashes.

TLS fingerprinting is used for various purposes, such as improving security by identifying and blocking connections from known malicious software or tracking users across websites without using traditional cookies. However, like browser fingerprinting, it also raises privacy concerns because it can be done without users’ knowledge or consent.

Behavior Analysis and Pattern Detection

Once a unique fingerprint is constructed by combining all the above, bot detection tools can trace a visitor’s behavior in a website or across many websites – if they use the same bot detection provider. They perform behavioral analysis on the browsing activity, which is usually

- The pages visited

- The order of pages visited

- Cross matching HTTP referer with the previous page visited

- The number of requests made to the website

- The frequency of requests to the website

This allows the anti-bot products to decide if a visitor is a bot or human based on the data they have seen previously and in some cases sends a problem such as a CAPTCHA to be solved by the visitor.

If the visitor solves the CAPTCHA – the visitor might be recognized as a user and if the CAPTCHA fails gets flagged as a bot and blocked.

Now, any requests that come from these fingerprints – HTTP, TCP, TLS, IP Address etc to any of the websites that use the same bot detection service will challenge visitors to prove themselves as a human.

The visitor or their IP address is usually kept in a blacklist for a certain period of time, and then removed from it if they do not see any more bot activity. Sometimes, persistently abused IP addresses are permanently added to global IP blocklists and denied entry to many sites.

It is relatively easier to bypass server side bot detection if web scrapers are fine tuned to work with the websites being scraped.

Note: The best way to understand every aspect of the data that moves between a client and a server as part of a web request is to use a proxy server in the middle, such as MITM, or look at the network tab of a web browser’s developer toolbar (accessed by F12 in most cases).

For deeper analysis beyond HTTP and lower down the TCP/IP stack, you can also use Wireshark to check the actual packets, headers, and all the bits that go back and forth between the browser and the website. Any or all of those bits can be used to identify a visitor to the website and consequently help fingerprint them.

Client-Side Bot Detection (Browser-Side Bot Detection)

Almost all of the bot detection services use a combination of browser-side detection and server-side detection to accurately block bots.

The first thing that happens when a site starts client-side detection is that all scrapers that are not real browsers will get blocked immediately.

The simplest check is if the client (web browser) can render a block of JavaScript. If it doesn’t, the detection pretty much flags the visitor as a bot.

While it is possible to block running JavaScript in the browser, most of the Internet sites will be unusable in such a scenario, and as a result, most browsers will have JavaScript enabled.

Once this happens, a real browser is necessary in most cases to scrape the data. There are libraries to automatically control browsers, such as

- Selenium

- Puppeteer and Pyppeteer

- Playwright

Browser-side bot detection usually involves constructing a fingerprint by accessing a wide variety of system-level information through a browser. This is usually invoked through a tracker JavaScript file that executes the detection code in the browser and sends back information about the browser and the machine running the browser for further analysis.

As an example, the navigator object of a browser exposes a lot of information about the computer running the browser. Here is a look at the expanded view of the navigator object of a Safari browser:

Below are some common features used to construct a browser’s fingerprint.

- User-Agent

- Current language

- Do not track status.

- Supported HTML5 features

- Supported CSS rules

- Supported JavaScript features

- Plugins installed in the browser

- Screen resolution, color depth

- Time zone

- perating system

- Number of CPU cores

- GPU vendor name and rendering engine

- Number of touch points

- Different types of storage support in browsers

- HTML5 canvas hash

- The list of fonts has been installed on the computer

Apart from these techniques, bot detection tools also look for any flags that can tell them that the browser is being controlled through an automation library.

- Presence of bot-specific signatures

- Support for non-standard browser features

- Presence of common automation tools such as Selenium, Puppeteer, Playwright, etc.

- Human-generated events such as randomized mouse movement, clicks, scrolls, tab changes, etc.

All this information is combined to construct a unique client-side fingerprint that can tag one as a bot or a human.

Bypassing the Bot Detection, Bot Mitigation, and Anti-Scraping Services

Developers have found many workarounds to fake their fingerprints and conceal that they are bots. For example

- Puppeteer Extra

- Patching Selenium/PhantomJS

- Fingerprint Rotation

But bot detection companies have been improving their AI models and looking for variables, actions, events, etc. that can still give away the presence of an automation library. Most poorly built scrapers will get banned with these advanced (or “military-grade”) bot detection systems.

To bypass such military-grade systems and scrape websites without getting blocked, you need to analyze what each of their JavaScript trackers does on each website and then build a custom solution to bypass them. Each bot detection company works with a different set of variables and behavioral flags to find bots.

Wrapping Up

Websites use a variety of bot detection and mitigation tools at different levels to prevent web scraping. To overcome such challenges, you require knowledge of website and network administration. At ScrapeHero, we can provide you with web scraping products and services that prevent you from being blacklisted.

If simple data scraping is your need, then consider making use of ScrapeHero Cloud, which offers pre-built crawlers and APIs. Since it is hassle-free, affordable, fast, and reliable, you can use it for scraping even without extensive technical knowledge.

For enterprise-grade web scraping concerns, it is better to consult ScrapeHero web scraping services, which are bespoke, custom, and more advanced. We are experts in overcoming the challenges of anti-bot techniques and providing the data you need.

Frequently Asked Questions

Web scraping can be detected through various methods, such as identifying unusual traffic patterns, detecting non-standard headers, monitoring the rate of requests, identifying the use of headless browsers, etc.

Google employs techniques to detect and block bot activity across its services. Some methods include rate and pattern analysis, CAPTCHA challenges, User-Agent and header analysis, behavioral analysis, etc.

Websites detect bots using various techniques. They can also deploy specific measures to block or prevent bot traffic. These methods range from simple checks to sophisticated analyses that involve machine learning, such as IP reputation, resource loading, honeypots, etc.

To bypass bot detection in web scraping, many mechanisms, such as respecting robots.txt, limiting request rates, using headers and rotating user agents, handling CAPTCHAs, etc., are used.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data