When web scraping, most target websites prevent scrapers from extracting their data. How can this blocking by websites be avoided?

One effective way to avoid such blocking is to use random IPs. In this blog, let’s learn to generate random IP addresses for web scraping in Python to maintain anonymity and prevent detection.

Why Use Random IPs?

Websites use various mechanisms to detect and block IPs from which numerous requests within a short period are made.

Using a single IP address can make you easily detectable and banned. By rotating IP addresses, your requests are distributed across multiple IPs, reducing the risk of being blocked.

Generating Random IPs for Web Scraping in Python

Before beginning to generate random IP addresses for web scraping, let’s understand how they are structured.

An IPv4 address consists of four numbers ranging from 0 to 255, separated by dots. For example, 192.168.1.1 is a typical IP address.

So, to generate random IP addresses, you need to generate four random numbers within the given range. You can use Python’s random module for this.

Step-by-Step Guide to Creating a Random IP Generator

- Import the required modules:

import random - Using the given function, you can generate a random IP by joining four random numbers, each between 0 and 255, with dots.

def generate_random_ip():<br /> return '.'.join(str(random.randint(0, 255)) for _ in range(4))<br /><br /># Example usage<br />random_ip = generate_random_ip()<br />print(random_ip)<br /> - To generate multiple randomly generated IP addresses for scraping, use a loop.

def generate_multiple_random_ips(n):<br /> return [generate_random_ip() for _ in range(n)]<br /><br /># Example usage<br />random_ips = generate_multiple_random_ips(5)<br />print(random_ips)<br />This function generates a list of n random IP addresses.

Advanced Random IP Address Generator in Python

Note that the method discussed generates completely random IPs, including IP addresses from reserved ranges like 192.168.x.x or 10. x.x.x.

Since reserved IP addresses are not routable on the public internet, they should be avoided for web scraping. To generate valid public IPs, filter out the reserved ranges.

Reserved IP Ranges

Some commonly reserved IP ranges are:

- 10.0.0.0 to 10.255.255.255

- 172.16.0.0 to 172.31.255.255

- 192.168.0.0 to 192.168.255.255

Filtering Reserved IPs

You can create a function to check if an IP is in a reserved range. Also, use this function to filter your generated IPs.

- Check if an IP is reserved:

import ipaddress def is_reserved_ip(ip): reserved_ranges = [ ipaddress.IPv4Network('10.0.0.0/8'), ipaddress.IPv4Network('172.16.0.0/12'), ipaddress.IPv4Network('192.168.0.0/16'), ipaddress.IPv4Network('127.0.0.0/8'), # Loopback address ipaddress.IPv4Network('169.254.0.0/16'), # Link-local address ipaddress.IPv4Network('0.0.0.0/8') # "This" network ] ip_obj = ipaddress.IPv4Address(ip) return any(ip_obj in network for network in reserved_ranges) # Example usage print(is_reserved_ip('192.168.1.1')) # True print(is_reserved_ip('8.8.8.8')) # False - Generate valid public IPs:

This function keeps generating random IPs for web scraping until it gets an IP address that is not in a reserved range.def generate_valid_random_ip(): while True: ip = generate_random_ip() if not is_reserved_ip(ip): return ip # Example usage valid_random_ip = generate_valid_random_ip() print(valid_random_ip) - Generate multiple valid public IPs:

def generate_multiple_valid_random_ips(n): ips = [] while len(ips) < n: ip = generate_valid_random_ip() if ip not in ips: # Ensure uniqueness ips.append(ip) return ips # Example usage valid_random_ips = generate_multiple_valid_random_ips(5) print(valid_random_ips)

Using Random IPs for Scraping

Now, you can use the generated list of random IPs to make HTTP requests using the requests library with a proxy.

- Install the requests library:

pip install requests - Make requests with random IPs:

import requests<br /><br />def fetch_with_random_ip(url, proxies):<br /> proxy = random.choice(proxies)<br /> response = requests.get(url, proxies={"http": proxy, "https": proxy})<br /> return response<br /><br /># Example usage<br />url = 'http://example.com'<br />proxies = ['http://' + ip for ip in generate_multiple_valid_random_ips(5)]<br />response = fetch_with_random_ip(url, proxies)<br />print(response.status_code)<br />Note that this function selects a random proxy from the list of generated IPs and makes a request using that proxy.

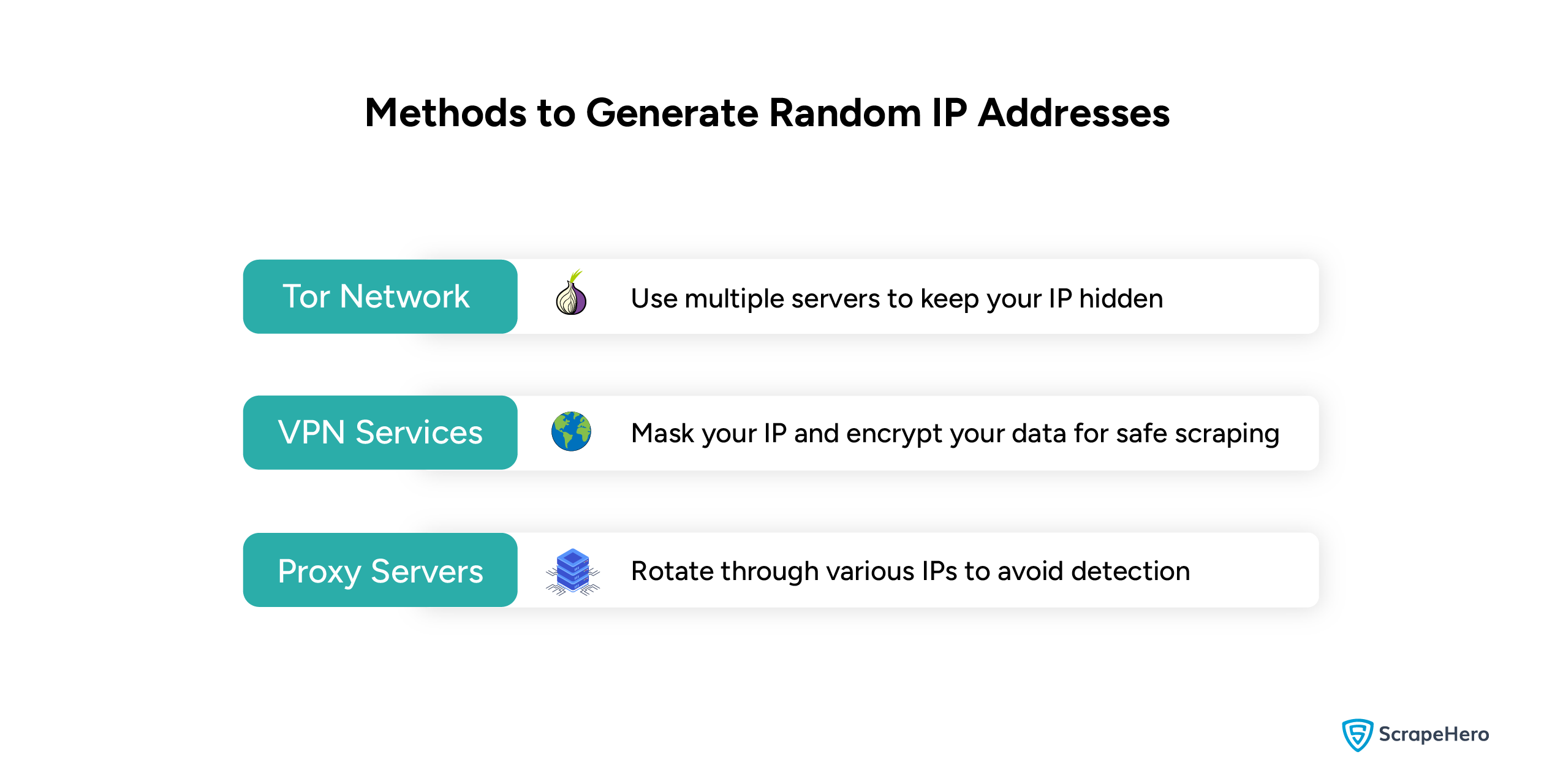

Different Methods to Generate Random IP Addresses for Web Scraping

Several methods are used to generate random IP addresses for web scraping, apart from creating random IPs programmatically. Some of these methods include:

1.Tor Network

Tor (The Onion Router) is a free, open-source software that hides the user’s location and usage from surveillance and traffic analysis.

It enables anonymous communication by routing traffic through a network of volunteer-operated servers.

Setting Up Tor With Python

Install the Tor browser and configure your requests to go through the Tor network to use Tor with Python.

-

Download and install the Tor browser

-

You can start the Tor service on your machine. On Linux, you can start it with:

sudo service tor start -

Install the requests and stem libraries. Stem is a Python controller library for Tor.

pip install requests stem -

Configure your requests to go through Tor:

import requests<br />from stem import Signal<br />from stem.control import Controller<br /><br />def get_tor_session():<br /> session = requests.session()<br /> session.proxies = {<br /> 'http': 'socks5h://127.0.0.1:9050',<br /> 'https': 'socks5h://127.0.0.1:9050'<br /> }<br /> return session<br /><br />def renew_tor_ip():<br /> with Controller.from_port(port=9051) as controller:<br /> controller.authenticate(password='YOUR_TOR_PASSWORD')<br /> controller.signal(Signal.NEWNYM)<br /><br /># Example usage<br />session = get_tor_session()<br />renew_tor_ip()<br />response = session.get('http://example.com')<br />print(response.text)Using this code, you can set up a session that routes traffic through the Tor network. You can also renew the Tor IP by sending a NEWNYM signal to the Tor controller.

2. VPN (Virtual Private Network)

A VPN masks your actual IP address and encrypts the data. It routes the internet traffic through a server operated by the VPN provider.

Many VPN services provide APIs to change IP addresses programmatically.

Using a VPN With Python

Most VPN providers do not provide a direct Python API, but you can control the VPN client using system commands or scripts.

-

Choose a VPN provider that allows API access or command-line control, such as NordVPN and ExpressVPN.

-

Connect to the VPN using Python.

import os

def connect_to_vpn(server):

os.system(f'nordvpn connect {server}')

def disconnect_vpn():

os.system('nordvpn disconnect')

# Example usage

connect_to_vpn('us123')

# Your scraping code here

disconnect_vpn()

3.Proxy Services

You can use proxy services to get different IP addresses that can be used to route your requests.

Depending on your specific requirements, you have the flexibility to choose the types of proxies that best suit your needs.

However, it’s advisable to opt for Residential Proxies as they are harder to detect.

Using Proxy Services with Python

- Find the right proxy provider that fits your needs

- You can configure your requests to use proxies:

import requests<br /><br />def fetch_with_proxy(url, proxy):<br /> response = requests.get(url, proxies={"http": proxy, "https": proxy})<br /> return response<br /><br /># Example usage<br />proxies = ['http://proxy1:port', 'http://proxy2:port']<br />for proxy in proxies:<br /> response = fetch_with_proxy('http://example.com', proxy)<br /> print(response.status_code)<br />Do you know that by following certain practices, you could bypass the blocking of websites? To learn how you can read our article on scraping websites without getting blocked.

ScrapeHero Web Scraping Services

Web scraping involves several challenges, such as IP bans, rate limits, honeypot traps, and slow page loading.

It would be hectic to try to bypass all these obstacles for enterprises. Enterprises that are not based on data need to set up an in-house team, which requires high initial setup costs, especially for servers.

The continuous need for tool maintenance and other technological updates may also be a major challenge. Your in-house team can also find difficulties in dealing with scalability issues.

A better alternative would be to consult a fully managed enterprise-grade web scraping service provider like ScrapeHero.

ScrapeHero’s web scraping service is specially tailored to meet various data requirements for both individuals and businesses that need custom scrapers.

Our global infrastructure makes large-scale data extraction easy and painless by handling complex problems.

That’s why our clientele, which ranges from global FMCG conglomerates to Big 4 accounting firms, still trust us to effectively and efficiently handle their data requirements.

Wrapping Up

Generating random IPs is an essential technique for web scraping as it maintains anonymity and avoids detection.

You must always ensure that you are using valid public addresses and stay out of reserved IP addresses.

If you are searching for a reliable data partner who can deliver unparalleled data quality, consistency, and enhancements through custom solutions, ScrapeHero is the final answer.

Frequently Asked Questions

An IP address generator in Python is a program that creates random or specific IP addresses using code.

It is used for various purposes, such as testing or simulating network traffic.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data