Google has become so popular that we rarely think of it as a search engine and more as the World Wide Web itself.

But did you know that Google was not the first search engine to make it possible to connect to the Internet? Many search engines had tried their luck before Google did and even after Google came up.

Because web crawling is at the base of the search engines we know today, tracing the history of web crawling would give us an idea of how the internet has evolved, too.

History of Web Crawling

The evolution of search engine web crawlers has significantly improved the accessibility and reliability of the Internet.

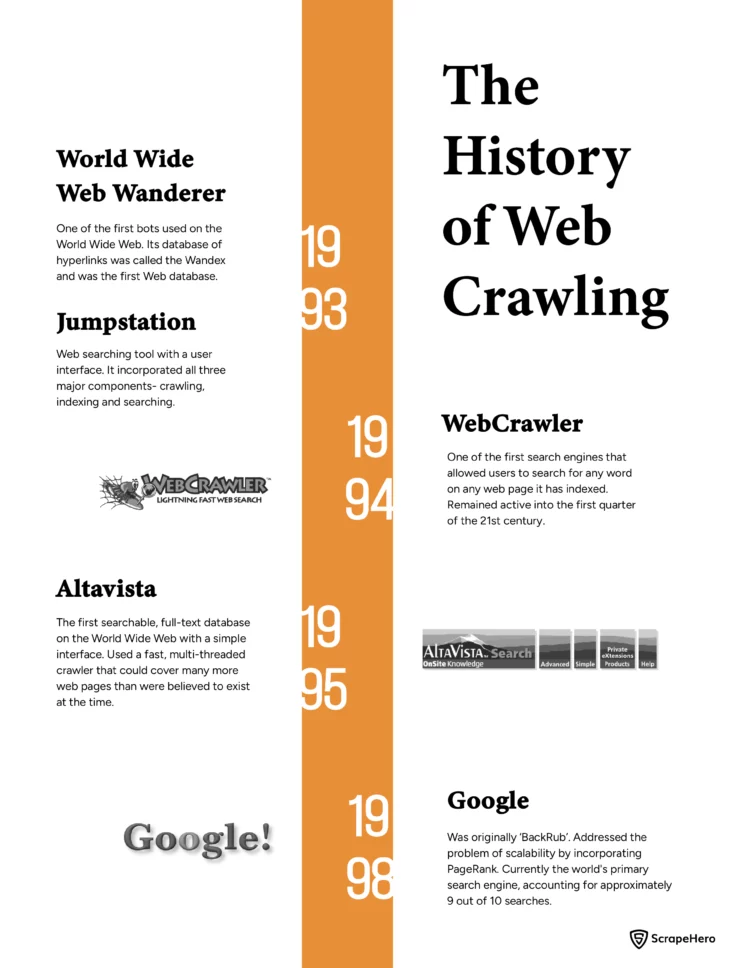

1993

World Wide Web Wanderer

- One of the first bots used on the World Wide Web.

- Developed by Mathew Gray to measure the size of the Web.

- Its database of hyperlinks was called the Wandex and was the first Web database.

- It has now passed into history.

Jumpstation

- Web searching tool with a user interface.

- Developed by Jonathon Fletcher.

- Incorporated all three major components- crawling, indexing and searching.

1994

WebCrawler

- One of the first search engines that allowed users to search for any word on any web page it has indexed.

- Developed by Brian Pinkerton.

- Had been the second most popular search engine at a point.

- Remained active into the first quarter of the 21st century.

1995

AltaVista

- The first searchable, full-text database on the World Wide Web with a simple interface.

- Used a fast, multi-threaded crawler that could cover many more web pages than were believed to exist at the time.

- It lost ground to Google and was purchased by Yahoo in 2003.

- It was shut down by Yahoo in 2013.

1998

- Google was originally ‘BackRub’ a research project by Larry Page and Sergey Brin started in 1996.

- Addressed the problem of scalability by incorporating PageRank.

- Today, Google is the world’s primary search engine, accounting for approximately 9 out of 10 searches.

Don’t want to code? ScrapeHero Cloud is exactly what you need.

With ScrapeHero Cloud, you can download data in just two clicks!

Impact of Web Crawlers on the Internet

No search engine has completely indexed the whole of the internet. In addition to this, thousands of new pages are being added to the web every single day.

But the internet is not a disorganized collection of websites, thanks to web crawlers. They have transformed the internet into a searchable and interconnected resource.

Here’s a closer look at its impact:

- Indexing the Web:

Search engines use web crawlers to explore the web, following links and analyzing content. They then store the extracted information in a giant index classified into categories.

This indexing process organizes the web, enabling users to efficiently retrieve relevant information. - Improved Search Efficiency:

Crawlers constantly update search engine indexes, ensuring results are relevant and up-to-date. This lets users find the information they need quickly and efficiently, rather than surfing through endless irrelevant links. - Website Visibility:

Had web crawling not been there, many websites would not be visible to search engines. A website is indexed and made discoverable through search queries because a web crawler has discovered it.

When a website frequently updates its content, crawlers index these changes, signaling freshness to search engines. This can positively affect a website’s ranking in search results. - Search Engine Development

Web crawling is fundamental to search engine development in a number of ways.- It is the data collection method that feeds the entire search engine system, allowing it to function and improve. Without crawlers, the search engine would have no information to work with and wouldn’t be able to function.

- Crawlers don’t just collect URLs; they also analyze the content of web pages. Search engines gain insights into what each webpage is about and the topics it covers during this process.

This understanding is crucial for ranking web pages and delivering relevant search results. - The effectiveness of a search engine lies in the quality of its ranking algorithms. Crawlers provide a constant flow of data that search engine developers can use to test and refine ranking algorithms.

- Crawlers can discover new information sources that traditional methods might miss. This can include new web pages, niche websites, or even entirely new domains.

Where are We Now in the Evolution of Web Crawling?

The first crawlers, like World Wide Web Wanderer, explored the web, mapping its structure and size. These basic programs laid the groundwork for future advancements.

Later, crawlers became integrated with search engines like WebCrawler and AltaVista. Users could now search for information across the indexed web, not just navigate links.

This marked a shift towards user-focused information retrieval.

Then came search engines like Google that addressed limitations by developing crawlers that could handle the ever-growing web. Google’s PageRank algorithm revolutionized search results by prioritizing relevant and authoritative content.

Search engines are now switching to AI-powered web crawlers that utilize machine learning to become more intelligent over time.

These web crawlers navigate the web more like humans, understanding the context and user intent within the content. This allows them to prioritize results that match not just keywords but also address the user’s information needs.

AI enables crawlers to learn and adapt over time. They can analyze past crawling patterns and user behavior to optimize their search for the most relevant information.

This shift towards AI-powered web crawlers is a significant leap in search engine technology and the accessibility of the internet.

The Future of Web Crawling and the Internet

Web crawling is likely to evolve and improve alongside AI and machine learning advancements.

Deeper Content Understanding

Crawlers are likely to move beyond simply identifying text to understand the meaning and intent of the content. They would be able to understand the sentiment and the target audience of a web page in addition to identifying the main topic of it.

This would allow search engines to deliver results that are not just relevant but also trustworthy and aligned with the user’s needs.

Semantic Crawling

Crawlers might utilize semantic understanding to navigate websites more intelligently in the future.

Imagine you search for “best hiking trails for beginners near Joshua Tree National Park.”

Semantic crawlers with a deeper understanding of meaning and relationships could analyze the content in a nuanced way.

They might identify webpages that discuss easy hikes around Joshua Tree, even if they don’t use the exact term “beginner.” They could consider factors like trail difficulty, distance, elevation gain, and even user reviews mentioning suitability for beginners.

In addition to this, they might crawl and analyze content from local hiking clubs or park authority websites that provide official recommendations for beginner trails.

Based on this semantic understanding, the crawler would prioritize web pages that offer the user the most relevant and valuable information.

Personalized Crawling

Web crawlers that customize their web exploration based on user search history and preferences is another possibility. This would mean a more personalized search experience, where crawlers prioritize indexing content relevant to a user’s specific interests.

Focus on User Experience

Crawlers might be designed in the future to not only crawl content but also evaluate its user-friendliness. This could involve analyzing factors like website loading speed, mobile responsiveness, and the overall design layout.

Search engines would then prioritize user-friendly websites in their rankings, leading to a more enjoyable search experience.

Where do you think web crawling, search engines, and the internet are headed?