According to a study by *Chitika Insights, websites ranked on the first page of Google search results capture over 92% of all search traffic, boosting sales and leads!

But how do search engines like Google list results from all over the web? The answer lies in search engine web crawling.

Read this article further to learn what search engine web crawling is, how search engines use it to access and rank information, and how it impacts search engine optimization.

What is Web Crawling?

Web crawling is an automated process that search engines use to systematically browse the internet, index web pages, and monitor website health.

For this purpose, software known as a web crawler, web spider, or crawler is used to crawl across the web, search and gather URLs, and index pages for search engines.

The crawlers follow links from one page to another and gather information as they go, which allows search engines to find new content and update their databases.

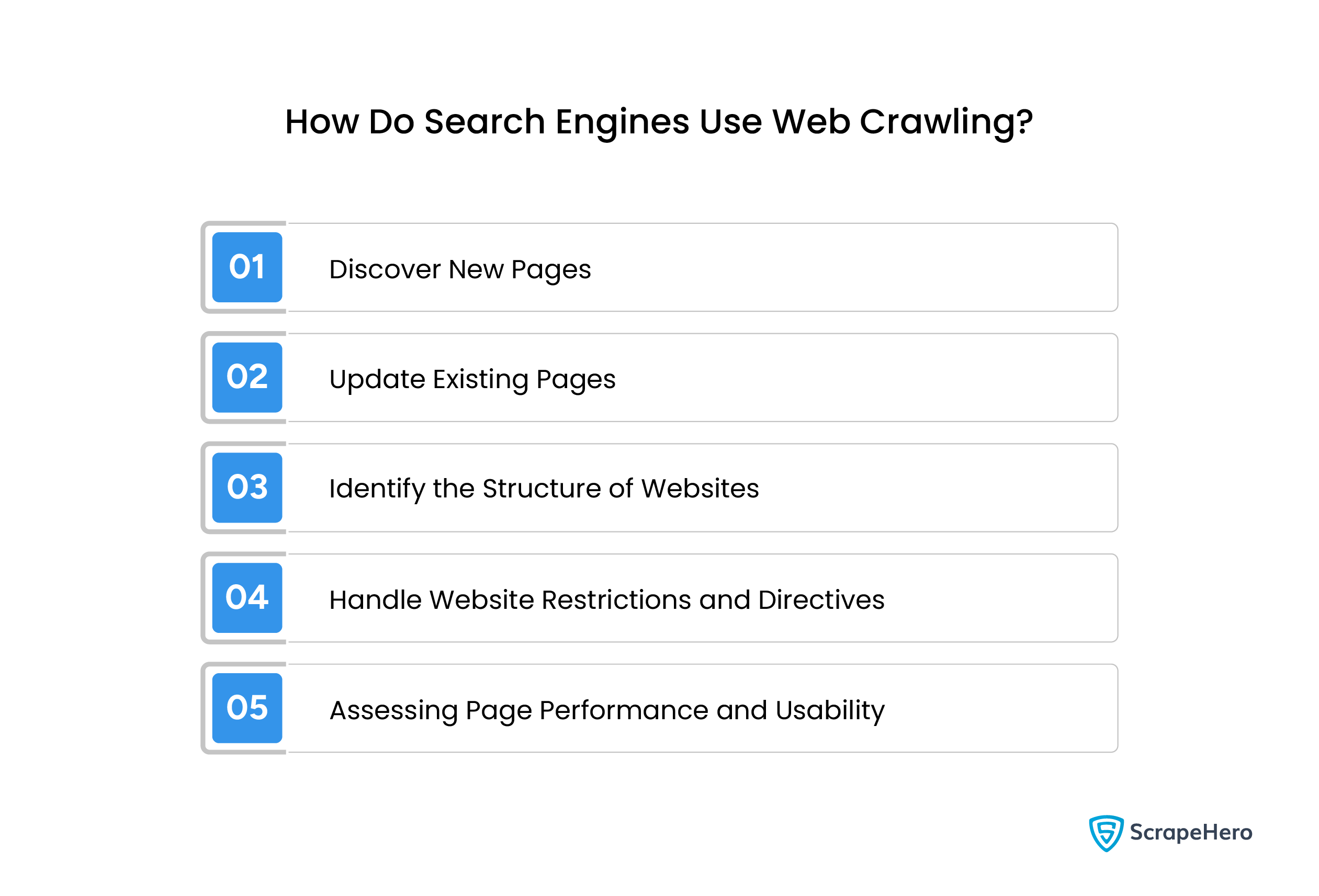

How Do Search Engines Use Web Crawling?

Search engines rely on web crawlers to explore the web and gather information from web pages. This is how web crawling works:

- Discover New Pages

- Update Existing Pages

- Identify the Structure of Websites

- Handle Website Restrictions and Directives

- Assessing Page Performance and Usability

1. Discover New Pages

1. Discover New Pages

Web crawlers visit known URLs, known as seed URLs, and follow interconnected internal and external links to discover new content.

When a website is launched with other sites linked to it, the crawler follows the link to the new websites, crawls the pages, and then adds them to the search engine crawlers & crawling databases.

This way, search engine web crawling helps expand their index with the latest and updated content across the web.

2. Update Existing Pages

Websites are dynamic as they are updated with new information, posts, products, or services.

So, the crawlers must revisit the previously crawled websites often to ensure that the search engine’s index remains accurate and up to date.

When a website changes its page structure, updating content like product prices or descriptions, the search engine spiders detect these changes and send the new data back to the index, ensuring the most recent and relevant information.

3. Identify the Structure of Websites

You should understand that crawlers don’t merely gather data. Instead, they analyze the structure of each website and understand how the content is organized.

Crawlers read a website’s internal link structure, such as navigation menus and category hierarchies, and map out relationships between different pages.

This helps the web crawling search engines understand the site hierarchy and determine which pages are most important, making it easier to categorize, index, and rank higher.

4. Handle Website Restrictions and Directives

Using the robots.txt files and meta tags, website owners control how search engine crawlers & crawling tools interact with their site.

Robots.txt files and meta tags instruct crawlers which pages they can crawl. Crawlers are programmed to respect these restrictions and skip areas marked as off-limits.

This ensures that only relevant and essential content is indexed, improving the efficiency of search engine web crawling.

5. Assessing Page Performance and Usability

Search engine crawlers evaluate performance metrics of web pages, like page load speed, mobile-friendliness, and overall usability for user experience, and rank pages in search results.

Web crawlers analyze how quickly a web page loads, whether it’s optimized for mobile devices, and whether they are responsive across different devices.

Quickly loading pages tend to rank higher because they offer a better user experience. If the crawler detects that a website’s mobile version has issues with navigation, it affects the site’s overall ranking on mobile searches.

How Does Crawling Impact SEO?

Web crawling can impact SEO by determining how quickly search engines index your website, which is vital for ranking. Here are some factors that influence crawlability and SEO:

- Internal Linking

- Robots.txt

- XML Sitemaps

- Crawl Budget

- Page Load Time

- Mobile-Friendliness

1. Internal Linking

1. Internal Linking

Internal links help search engines navigate website content efficiently, creating pathways between pages on the website.

If your website has well-structured internal linking, it enhances user experience and makes it easier for crawlers to follow links and discover new pages.

When the pages are effectively linked to each other, the crawlers can revisit them quickly. If a page has a high number of internal links, its chances of appearing in search results are higher, as the pages are likely to be crawled more frequently.

Best Practice: When you link internally, use clear and relevant anchor text to ensure that important pages like service pages are linked from high-traffic areas such as the homepage.

2. Robots.txt

The robots.txt file instructs the crawlers which pages or directories are allowed to crawl and index.

Even though this file prevents crawlers from accessing unimportant pages like admin pages or login forms, it can block important content from being crawled if not correctly configured.

Moreover, if the file accidentally blocks key pages, those pages won’t be crawled or indexed and will not appear in search results.

Best Practice: Regularly audit robots.txt files to ensure that they block only unnecessary or duplicate pages instead of important pages.

When web crawling is not done ethically, it can affect websites’ performance. So, in most cases, site owners implement anti-scraping mechanisms to restrict open data access.

3. XML Sitemaps

XML sitemaps act as roadmaps for web crawlers. They outline the structure of your website and list important pages, helping the crawlers find and index content more effectively.

An XML sitemap discovers and indexes even the pages that are hard to find within the website architecture.

Also, it provides information to the crawlers about when the page was last updated to prioritize crawling the most current content.

Best Practice: You should update your website sitemap regularly to include new or updated pages. Also, submit it through Google Search Console to ensure that crawlers read it correctly.

4. Crawl Budget

The crawl budget is the number of pages a crawler can and will crawl on your site within a specific amount of time.

The crawl budget is influenced by the website’s authority, the number of internal and external links pointing to it, and the quick loading of web pages.

A search engine will crawl and index a website with a high crawl budget more often, improving its visibility in search results.

Best Practice: To ensure efficient crawling, prioritize high-value content, increase site speed, and limit unnecessary pages.

5. Page Load Time

Page load time is the time it takes for a web page to load fully, and it impacts SEO and user experience.

Page speed is a ranking factor for search engines, so if your pages are slow to load, web crawlers may abandon the crawl, leaving some pages unindexed.

Page load time matters as the crawler crawls fewer pages of a blog that takes 8 seconds to load than a blog that loads in under 2 seconds.

Best Practice: To improve page load speed, optimize the images, use caching, reduce server response times, or use tools like Google PageSpeed Insights.

6. Mobile-Friendliness

In the crawling and ranking processes, search engines usually prioritize mobile-friendly websites.

Your website should be optimized for mobile; otherwise, search engines may have trouble rendering it properly. This negatively impacts crawlability and reduces your ranking potential.

A website with a mobile-responsive design will improve search rankings as it becomes easier for both users and search engines to navigate.

Best Practice: Implement a responsive website design to ensure that your site functions seamlessly on all devices, enhancing both crawlability and SEO performance.

What Happens After Crawling: Indexing and Ranking

As you know, when a crawler visits a page, it processes and stores its contents in the search engine’s index.

This index is what the search engine scans to find the most relevant pages when you type a query. This index can be based on several factors, such as keyword usage, content relevance, and more.

But you have to understand that crawling and indexing are just the beginning. Search engines use sophisticated algorithms to rank the indexed pages.

A page is ranked based on many factors, such as the quality of its content, backlinks, page authority, and user engagement.

When a crawler crawls a website more efficiently, then it is likely to be indexed correctly and appear in search results.

However, web crawling faces several challenges during the process, and there are many solutions implemented to overcome them.

Why Should You Consider ScrapeHero Web Crawling Service?

Search engines gather data through web crawling and build an index of web pages, which allows them to return faster and more accurate search results.

You can also make use of web crawling for your own data extraction needs by combining it with scraping techniques.

Web crawling helps you discover URLs and download HTML files, and web scraping enables you to scrape data from those files.

As a comprehensive web scraping service provider, ScrapeHero offers both robust web crawling and scraping services.

With us, you can expect fast data delivery and exceptional accuracy, helping your business with the data you need.

Frequently Asked Questions

Search engines use web crawlers to browse the web, discover new content, and update the index of web pages.

Crawlers visit the known URLs first and then follow links on these pages. From each page, they collect data and send it back to the search engine for indexing and ranking.

A robots.txt file mentions which pages web crawlers can or cannot crawl on a website. This allows website owners to control which parts of their site appear in search engine results.

To improve your website’s crawlability, ensure it has a clear internal linking structure, optimize page load times, submit an XML sitemap, and use a properly configured robots.txt file.

The legality of web crawling varies according to the purpose, respect for website rules, data privacy, etc.

It is generally legal if you follow the website’s terms of service and restrictions, but bypassing unauthorized crawling can lead to legal issues.