From tracking competitor prices to analyzing customer sentiments, web scraping large datasets is an essential practice that provides raw data.

But do you know that web scraping large datasets comes with its own set of challenges?

In fact, while scraping large datasets, most scrapers encounter IP blocking, which is one of the common challenges in web scraping at scale.

By following some best practices for web scraping, you can ensure that you gather datasets efficiently and ethically. Read to learn more.

What Are Large Datasets?

Large datasets consist of vast amounts of structured or unstructured data that traditional data processing systems find difficult to manage.

In most cases, they require specialized tools and infrastructure as they can include millions of web pages, customer reviews, or other content.

A real-life example of a large dataset is the comprehensive list of McDonald’s restaurant locations in the USA, which includes geocoded addresses, phone numbers, and open hours for actionable insights.

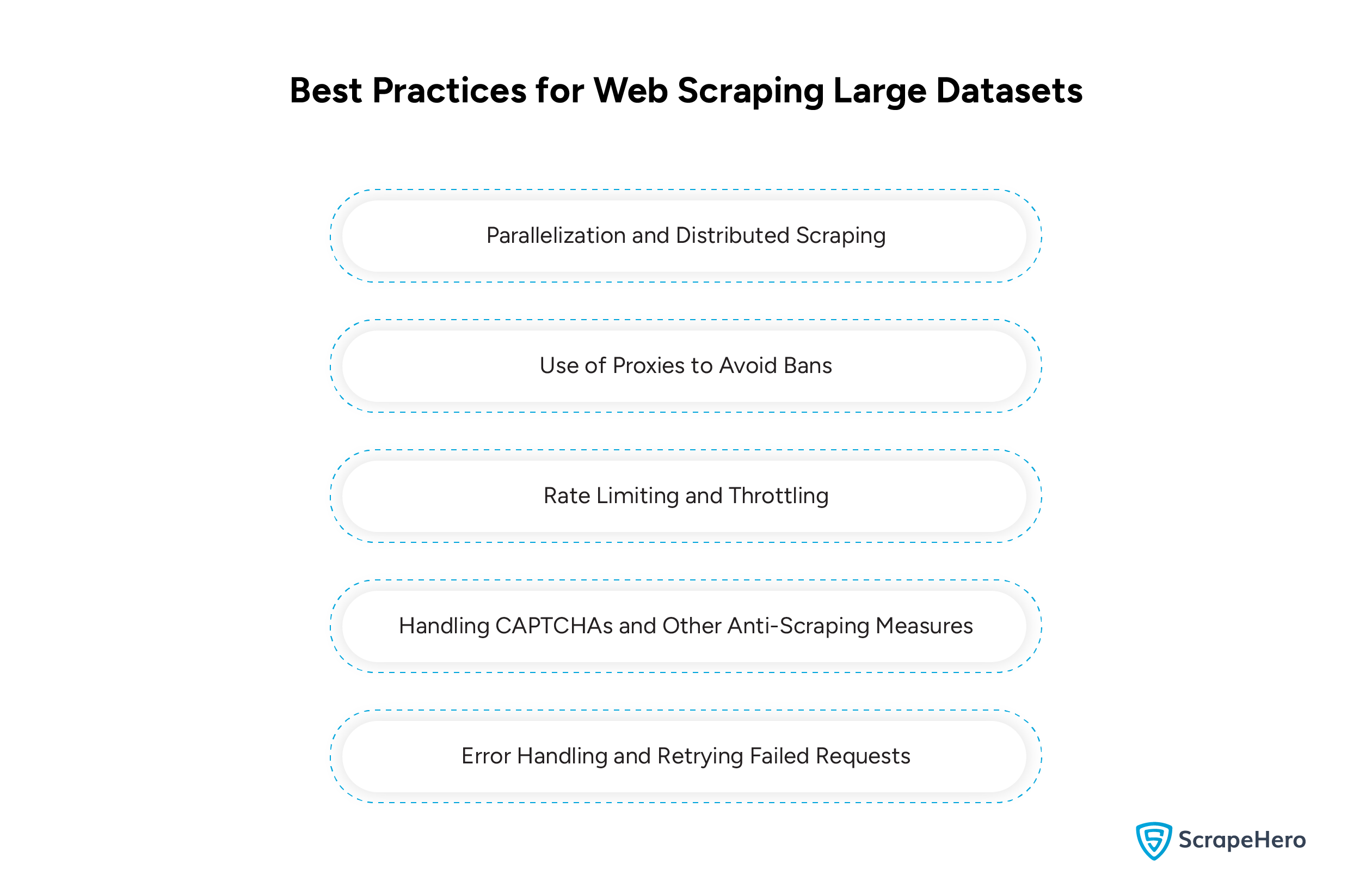

Best Practices for Web Scraping Large Datasets

Web scraping large datasets often presents challenges such as IP blocking, CAPTCHAs, slow scraping speeds, and unstructured data formats.

So, in order to ensure efficiency, compliance, and data quality, some best practices for large-scale web scraping need to be followed. They are:

- Parallelization and Distributed Scraping

- Use of Proxies to Avoid Bans

- Rate Limiting and Throttling

- Handling CAPTCHAs and Other Anti-Scraping Measures

- Error Handling and Retrying Failed Requests

1. Parallelization and Distributed Scraping

Parallelization and distributed scraping is a technique that involves breaking down a large scraping task into smaller, concurrent tasks that can be processed simultaneously. This significantly increases the speed of data extraction.

Use any popular scraping framework that supports asynchronous web scraping. This method handles multiple requests simultaneously, reducing wait times between requests.

You can also distribute tasks across multiple machines or servers to avoid overloading a single machine. For example, there are services like Google Cloud Functions and AWS Lambda for web scraping.

To ensure that requests are evenly distributed across multiple servers, it is better to implement load balancing, which reduces the risk of downtime or throttling by the website being scraped.

2. Use of Proxies to Avoid Bans

When IPs make too many requests within a short period, websites tend to block them. To avoid getting banned by websites, you can rotate proxies.

Use a proxy pool, a collection of IP addresses that makes it difficult for websites to block the scraper. You can also depend on third-party proxy management solutions to automatically rotate IPs based on your needs.

You must understand that Residential proxies are more effective, especially for large-scale scraping, as they mimic the browsing activity of a legitimate user.

To scrape websites that serve region-specific content, you can use Geo-targeting proxies, which assign IPs from a specific geographic area.

3. Rate Limiting and Throttling

Rate limiting and throttling is the practice of controlling the rate at which requests are made to a website. This is done to avoid triggering anti-bot mechanisms or overloading the website’s server.

Rate limiting and throttling mimic human-like behavior, reducing the chances of getting blocked.

Implementing random delays between requests can help in this matter. Also, make sure to avoid predictable patterns, as they can be easily identified as bot activity.

If the website shows longer load times or service unavailable errors, you must reduce the frequency of the requests to continue scraping.

4. Handling CAPTCHAs and Other Anti-Scraping Measures

Websites these days have CAPTCHAs and anti-bot measures like browser fingerprinting for blocking automated scrapers.

To overcome barriers like CAPTCHAs, you can use CAPTCHA-solving services such as 2Captcha or AntiCaptcha.

For browser fingerprinting, use headless browsers like Puppeteer or Selenium to simulate actual browsing activity.

Rotating user-agents with each request can also help avoid detection, as most websites track user-agents to detect bots.

5. Error Handling and Retrying Failed Requests

When scraping large datasets, a robust error-handling mechanism is crucial. This preparation ensures that you can deal with errors effectively, making your web scraping process more reliable.

If a request fails due to a temporary issue, such as server overload or network failure, you can implement retry logic.

A request also fails due to unresolvable issues, such as changes in website structure. So, it is better to log the error and move on to the next task than crash the entire scraper.

You can also set appropriate timeout limits for requests so that your scraper doesn’t get stuck, waiting indefinitely for a response.

Why Should You Consult ScrapeHero for Web Scraping Large Datasets?

Web scraping large datasets requires expertise in managing complex web structures, dynamic content, and anti-scraping mechanisms.

It will be difficult for enterprises that do not prioritize data as a core aspect of their business to handle technical hurdles, legal issues, and data quality concerns.

A full web scraping service like ScrapeHero can help you with this and efficiently handles increasing amounts of data.

Our robust web crawling capabilities can extract millions of pages, overcoming anti-scraping measures.

With over a decade of experience in web scraping, we have a wide range of customers worldwide, from Global FMCG Conglomerates to Global Retailers.

We can help you with your data scraping needs, providing you with a complete processing of the data pipeline from data extraction to custom robotic process automation.

Frequently Asked Questions

Large-scale web scraping is the process of automatically collecting vast amounts of data from multiple sources using distributed scrapers and robust infrastructure.

Some best practices for web scraping large datasets include using distributed scraping, rotating proxies, limiting request rates, and handling anti-scraping measures.

You can use BeautifulSoup for web scraping, as it is flexible, performs well, and supports handling various formats.

To speed up scraping, you can employ asynchronous requests, reduce unnecessary HTTP requests, and optimize data parsing techniques.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data