Google Sheets’ primary function is to create and edit spreadsheets directly in the web browser, but it can also be used for web scraping. Google Sheets uses special formulas to extract data and then import it directly into the spreadsheet.

This blog focuses on the three main functions, IMPORTHTML, IMPORTXML, and IMPORTDATA, used to get data from websites into Google Sheets. So, let’s get started!

Functions Used To Scrape Data From Websites to Google Sheets

Similar to web scraping with Excel, web scraping using Google Sheets also does not require coding and provides various ways to analyze the data that is extracted. Google Sheets has mainly three built-in functions for importing data from web pages. They are:

- IMPORTHTML

- IMPORTXML

- IMPORTDATA

Web Scraping With Google Sheets Using IMPORTHTML

With Google Sheets’ built-in function IMPORTHTML, you can fetch data from a table or a list on a web page. If there are multiple tables, you need to give the specific number of the table that you want to scrape.

Syntax of the IMPORTHTML Formula:

IMPORTHTML(url, query, index)Here,

- url – URL of the HTML web page to scrape

- query – a list or a table from which you scrape data

- index – Number of tables or lists that you need to fetch

How To Scrape Data From Websites to Google Sheets Using IMPORTHTML

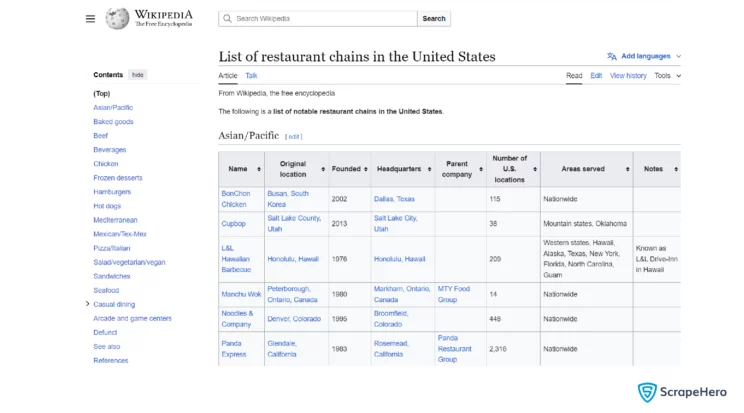

Let’s take a look at how you can get data from websites to Google Sheets with the IMPORTHTML function. Here, let’s consider scraping the tables from the web page “List of restaurant chains in the United States”.

Steps:

1. Open Google Sheets

2. In an empty cell, type =IMPORTHTML(url, query, index)

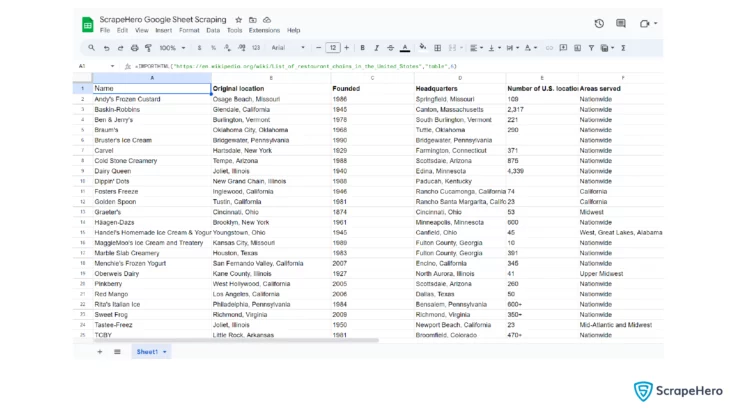

Here, replace the url with the website’s URL, the query with “table”, and the index with the number of the table you need to scrape. Let’s scrape table 6 of the sample website that was considered.So the formula becomes:

=IMPORTHTML("https://en.wikipedia.org/wiki/List_of_restaurant_chains_in_the_United_States","table",6)3. The final result produced is:

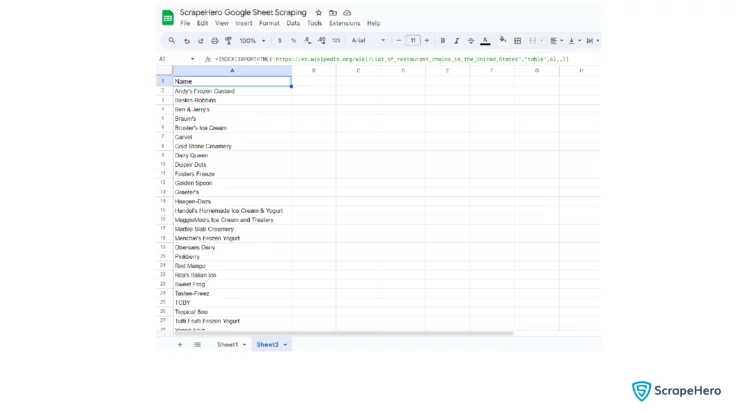

4. Suppose you need to scrape only a specific column from the table, then you can specify the index as:

=INDEX(IMPORTHTML("https://en.wikipedia.org/wiki/List_of_restaurant_chains_in_the_United_States","table",6),,1)

Web Scraping With Google Sheets Using IMPORTXML

Google Sheets’ IMPORTXML function helps you fetch data from structured data types like XML, CSV/TSV, HTML, and RSS/ATOM XML feeds. This function can be used to scrape web page titles or specific sections.

Syntax of the IMPORTXML Formula:

IMPORTXML(url, xpath_query)Here,

- url – URL of the web page to scrape the data

- xpath_query – It is the XPath expression that is extracted from the XML or HTML source

How To Scrape Data From Websites to Google Sheets Using IMPORTXML

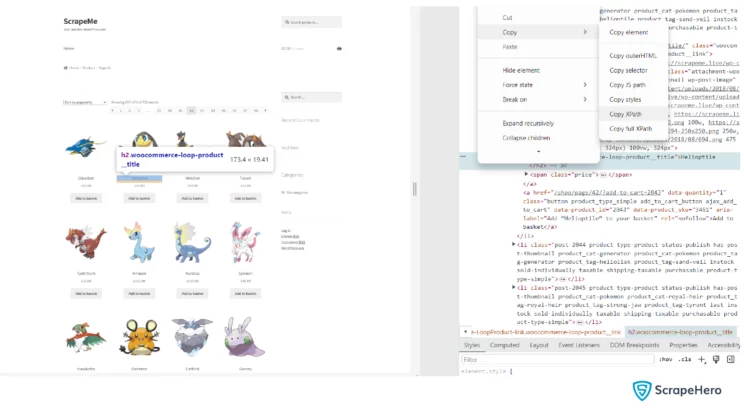

To understand how web scraping using Google Sheets is carried out with the function IMPORTXML, let’s consider an example. Let’s extract titles from the web page ScrapeMe.

Steps:

1. Open Google Sheets

2. In an empty cell, type =IMPORTXML(url,xpath_query)

Here, you must replace the url with the URL of the website from which you need to scrape data. Also, replace xpath_query with the XPath expression that you get from the HTML source.

To obtain the XPath expression, right-click on the title of the product, say Helioptile in this case. Then click on inspect, and the inspect element window opens. The corresponding HTML element gets highlighted:

<h2 class="woocommerce-loop-product__title">Helioptile</h2>

Right-click on the highlighted h2 tag. Later, click on the Copy button. Finally, select the Copy XPath button. So you’ll get:

//*[@id="main"]/ul/li[2]/a[1]/h2

So the formula becomes:

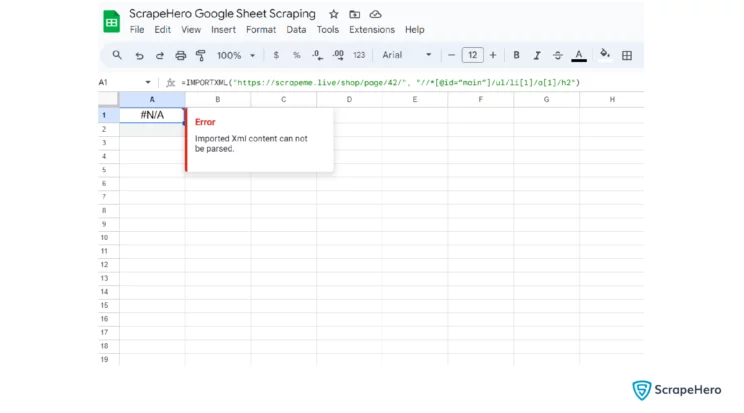

=IMPORTXML("https://scrapeme.live/shop/page/42/", "//*[@id='main']/ul/li[1]/a[1]/h2")Remember to replace the double quotes in the xpath_query with single quotes, or else you’ll get an error message.

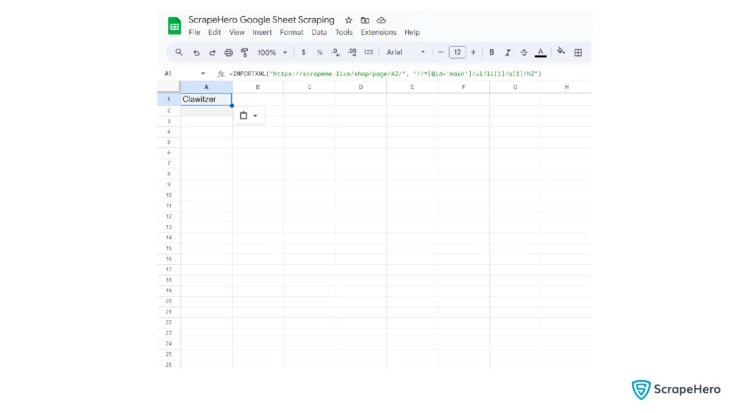

3. The data that is scraped is:

4. To pull all the Pokemon names you have to use the XPath query that selects all the names at once.

It is clear that the data is pulled from the li element with an index 1. So remove this index to select all the name tags.

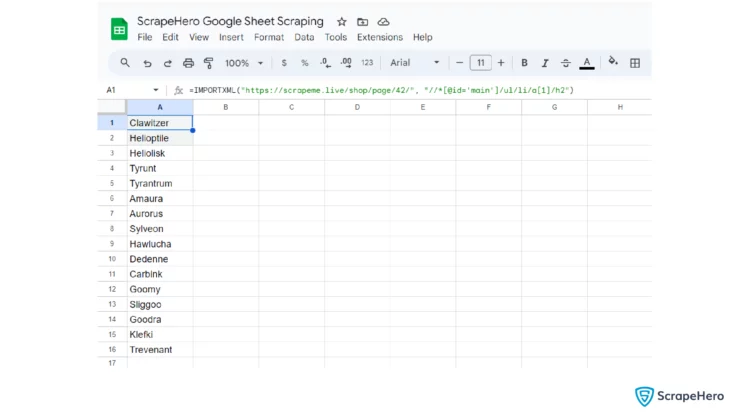

The formula becomes:

=IMPORTXML("https://scrapeme.live/shop/page/42/", "//*[@id='main']/ul/li/a[1]/h2")

Web Scraping With Google Sheets Using IMPORTDATA

The IMPORTDATA function is used by Google Sheets to work with .csv (comma-separated value) or .tsv (tab-separated value) file on the internet.

The values are either separated with commas or tabs, allowing Google Sheets to understand how the data is to be imported into cells.

Syntax of IMPORTDATA Formula:

IMPORTDATA(url)Here,

url – URL of the CSV or TSV file on the internet you want to extract data from

How To Scrape Data From Websites to Google Sheets Using IMPORTDATA

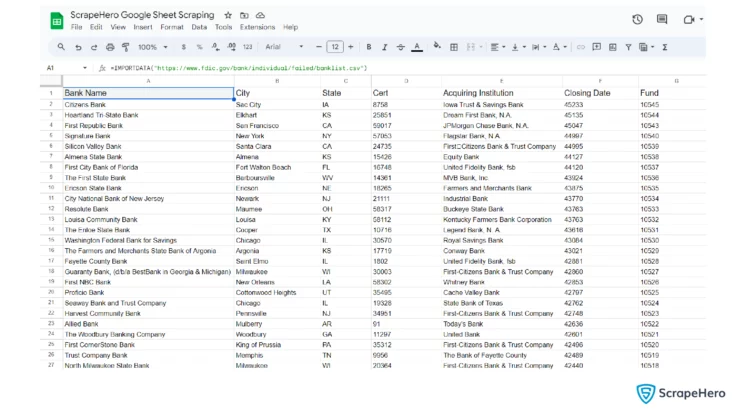

Let’s scrape all the available details from the CSV file ‘FDIC Failed Bank List’ on the web page DATA CATALOG. All the details are imported from this CSV file into Google Sheets in no time.

Steps:

1. Open Google Sheets

2. In an empty cell, type =IMPORTDATA(url)

Replace the url with the URL of the CSV file from which you need to scrape data.

So the formula becomes:

=IMPORTDATA("https://www.fdic.gov/bank/individual/failed/banklist.csv")3. The final data extracted to the Google Sheet is:

Wrapping Up

As mentioned earlier, Google Sheets’s primary objective is not scraping websites. So there are many limitations when compared to other web scraping methods. Google Sheets cannot scrape hundreds of data points at once.

So when it comes to complex web scraping or dealing with proxies or browser fingerprints, it is advisable to go with an enterprise-grade web scraping service provider like ScrapeHero.

You can also make use of ScrapeHero Cloud, which offers pre-built crawlers and APIs if you have specific web scraping needs, like scraping Google reviews. It is affordable, fast, and reliable, offering a no-code approach to users without extensive technical knowledge.

If your scraping needs are much larger, then you can use ScrapeHero web scraping services, which are bespoke, custom-made, advanced, and cater to all industries globally.

Frequently Asked Questions

Yes, web scraping with Google Sheets is possible using some of its built-in functions.

There are different methods to automatically pull data from a website to Google Sheets. You can use the built-in functions such as IMPORTHTML, or you can use Google Apps Script, or else you can make use of third-party services and add-ons like IMPORTFROMWEB.

Scraping JavaScript-loaded websites is challenging. For this use, use Google Apps Script with either Puppeteer or any third-party APIs. If the data needs are smaller, it is better to go for manual tools like web scraping browser extensions.

Scraping data from Google Sheets is easier than scraping websites. You can use the Google Sheets API directly. You can also scrape from Google Sheets using the Google Apps Script.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data