Ruby, JavaScript, R, and Go (Golang) are some programming languages used for web crawling, along with Python.

But why does Python dominate the field and is preferred over other languages? To find out why, read this article on the benefits of using Python for crawling.

A Brief Overview of Web Crawling

Web crawling is an automated process of systematically indexing and retrieving web content by a web crawler program.

You can read our article, web crawling with Python, to better understand web crawling, web crawlers, and how web crawling differs from web scraping.

Advantages of Python in Web Crawling

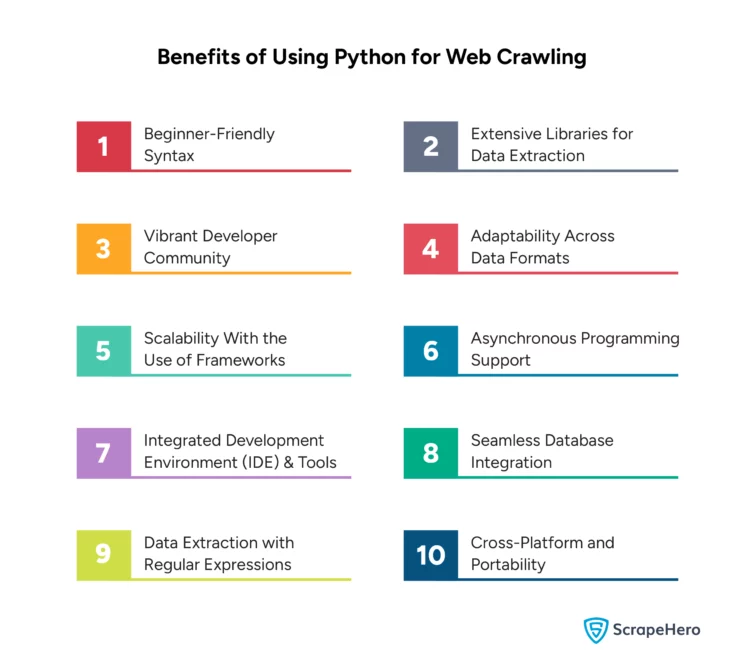

Why is Python the best for web crawling? Python is not just popular; it’s the best choice for developers and businesses that need data extraction. Its practical benefits include:

- Beginner-Friendly Syntax

- Extensive Libraries for Data Extraction

- Vibrant Developer Community

- Adaptability Across Data Formats

- Scalability With the Use of Frameworks

- Asynchronous Programming Support

- Integrated Development Environment (IDE) and Tools

- Seamless Database Integration

- Data Extraction with Regular Expressions

- Cross-Platform and Portability

1. Beginner-Friendly Syntax

Python is simple for beginners to learn and use because of its clean syntax and accessible code.

So, the learning curve is comparatively less than that of other languages, allowing developers to quickly create and deploy web crawlers.

2. Extensive Libraries for Data Extraction

Python has robust libraries designed explicitly for web crawling and scraping, including BeautifulSoup and Selenium.

These libraries can handle simple HTML parsing to complex browser-based data extraction.

3. Vibrant Developer Community

Since Python offers extensive resources, including tutorials, forums, and third-party modules, it has broad support from a large community of developers.

Community support is essential for solving problems and improving scripts for efficient web crawling.

4. Adaptability Across Data Formats

Python can handle different data sources and formats like HTML, XML, JSON, and PDFs.

Due to this flexibility, it is suitable for web crawling tasks across different websites and applications.

5. Scalability With the Use of Frameworks

Python is scalable, and different frameworks, such as Crawl Frontier, can be integrated with other crawling frameworks to manage large-scale crawls.

These frameworks help in extensive web crawling tasks across multiple domains, maintaining efficiency in web crawling.

6. Asynchronous Programming Support

Python 3.5 and above versions can support asynchronous programming using libraries such as `asyncio` and `aiohttp.`

Asynchronous programming support is crucial for efficient web crawling. It allows you to handle a large number of requests and responses without blocking the main execution thread.

7. Integrated Development Environment (IDE) and Tools

Several Integrated Development Environments (IDEs) and coding tools, such as PyCharm, Jupyter, and Visual Studio Code, support Python.

These IDEs and tools provide excellent environments for coding, testing, and deploying web crawlers.

8. Seamless Database Integration

Python supports databases and storage solutions, including SQL (MySQL and PostgreSQL) and NoSQL (MongoDB and Redis).

This seamless connection between Python and databases makes it easy for developers to store crawled data efficiently.

9. Data Extraction with Regular Expressions

Python has built-in support for regular expressions (RegEx), which is vital for extracting data from web pages.

Data extraction with regular expressions makes it easy for Python to retrieve specific data patterns across large datasets.

10. Cross-Platform and Portability

Many Windows, macOS, and Linux operating systems support Python web crawlers.

The portability of Python ensures that crawlers are developed and tested on one platform and easily deployed on another.

ScrapeHero Python Pre-built Web Crawlers for Effortless Crawling

Dealing with web crawlers often involves numerous challenges, including handling JavaScript-heavy websites, overcoming IP blocks and rate limits, and ensuring the accuracy of data extraction.

By utilizing the advanced crawlers and APIs from ScrapeHero Cloud, you can effectively overcome the challenges of web crawling. This not only simplifies the process but also ensures data extraction accuracy, making it a superior solution.

ScrapeHero Crawlers are ready-made web crawlers specifically designed to extract data from websites and download it in various formats, including Excel, CSV, and JSON.

These prebuilt crawlers allow you to configure your needs and fetch the data without any dedicated teams or hosting infrastructure, saving you time and money.

Here’s how you use ScrapeHero Crawlers:

- Go to ScrapeHero Cloud and create an account.

- Search for any ScrapeHero crawler of your choice

- Add the crawler to your crawler list

- Add the search URLs or keywords to the input

- Click gather data

For a start, try our Amazon product reviews crawler. It extracts customer reviews from any product on Amazon and provides details like product name, brand, reviews, and ratings.

You can read the article on how to scrape Amazon reviews to learn the process in detail.

ScrapeHero Python Web Crawling Services for Enterprises

Setting up servers and web crawling tools or software are other significant challenges enterprises can encounter regarding data extraction.

We recommend outsourcing ScrapeHero web crawling services for enterprises to focus on your business.

ScrapeHero web crawling services are tailored to meet the needs of enterprises that need custom crawlers and ensure ethical compliance with website policies and legality.

As a full-service provider and a leader in web crawling services globally, we deliver unparalleled data quality and consistency, enhanced by custom solutions.

Our global infrastructure makes large-scale data extraction easy and painless by handling complex JavaScript/Ajax sites, CAPTCHA, and IP blacklisting transparently.

Businesses can rely on us to meet their large-scale web crawling needs effectively and efficiently.

Wrapping Up

Python is considered the best language for web crawling due to its vast ecosystem of libraries, supportive community, and versatility in handling diverse web technologies.

Web crawling service providers like ScrapeHero understand the benefits of using Python for crawling and focus on building solutions in Python for our customers.

Contact our expert team to get high-quality data services without compromising on your time or the cost.

Frequently Asked Questions

1. Give an example of a Python web crawler.

A basic example of a Python web crawler is the Walmart product details and pricing crawler from ScrapeHero Cloud.

It is used to gather product details such as pricing, rating, number of reviews, product images, and other data points from the Walmart website.

You can create a web crawler in Python to scrape data from a website using the BeautifulSoup and Requests libraries.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data